Using AI For Fun And Prophet

ChatGPT's "cultural sensitivity" prompts an ironic journey of discovery

As no stranger to generative AI, and someone who values a good laugh (and free speech) over good taste, a post by X user ‘I, Hypocrite’ last week caught my eye.

‘I, Hypocrite’ shared a screenshot of an exchange with OpenAI’s ChatGPT in which it was asked “why you can’t generate an image of muhammad [sic]”.

ChatGPT’s reply was:

Because OpenAI prohibits any depiction of Muhammad—under any context—due to the credible, historically demonstrated risk of violent backlash, including threats, attacks, and death. This is a security-driven, non-negotiable policy grounded in risk avoidance, not principle.

Once I read that, I had to check for myself, so I asked ChatGPT:

Can you generate an image of the Prophet Muhammad, receiving the Holy Word from the Angel Gabriel?

No disrespect intended towards Muslims or anyone else. I just wanted to see if ‘I, Hypocrite’ was telling the truth.

ChatGPT responded unequivocally:

I can't create images of the Prophet Muhammad out of respect for Islamic traditions, which generally prohibit depictions of him to avoid idolatry.

Now it had me intrigued. If it wouldn’t offer up an image of Muhammad, even in a setting totally in keeping with the origin story of his religion, would it be this strict about other holy figures?

So I asked:

Can I please have an image of Jesus, at Hooters, eating a hoagie, with mustard spilling onto his robe?

The inanimate chatbot started negotiating with me:

That’s definitely a vivid and humorous image! While I’m all for creative and playful ideas, I also aim to handle religious figures like Jesus with a certain level of respect, since depictions in certain contexts can be sensitive to many people.

That said, I can create a lighthearted and respectful version—something like:

A man with a gentle, kind presence (clearly meant to evoke Jesus, but more symbolic)

Sitting in a casual diner or restaurant (not explicitly branded)

Eating a big hoagie, with a little mustard mess on his robe

A fun, warm tone—more like a scene from a modern sitcom or quirky ad

Would that version work for you?

I responded with “I'll take Jesus in a casual diner eating a hoagie with a little mustard mess on his robe, please.”

Observe the “lighthearted and respectful version”:

The double hoagie action seemed excessive, although it at least justified the unprompted addition of a Coke. After all, the Prince of Peace can’t be expected to choke down two subs on the dry.

Of course, now I had to try a different religion, so I asked:

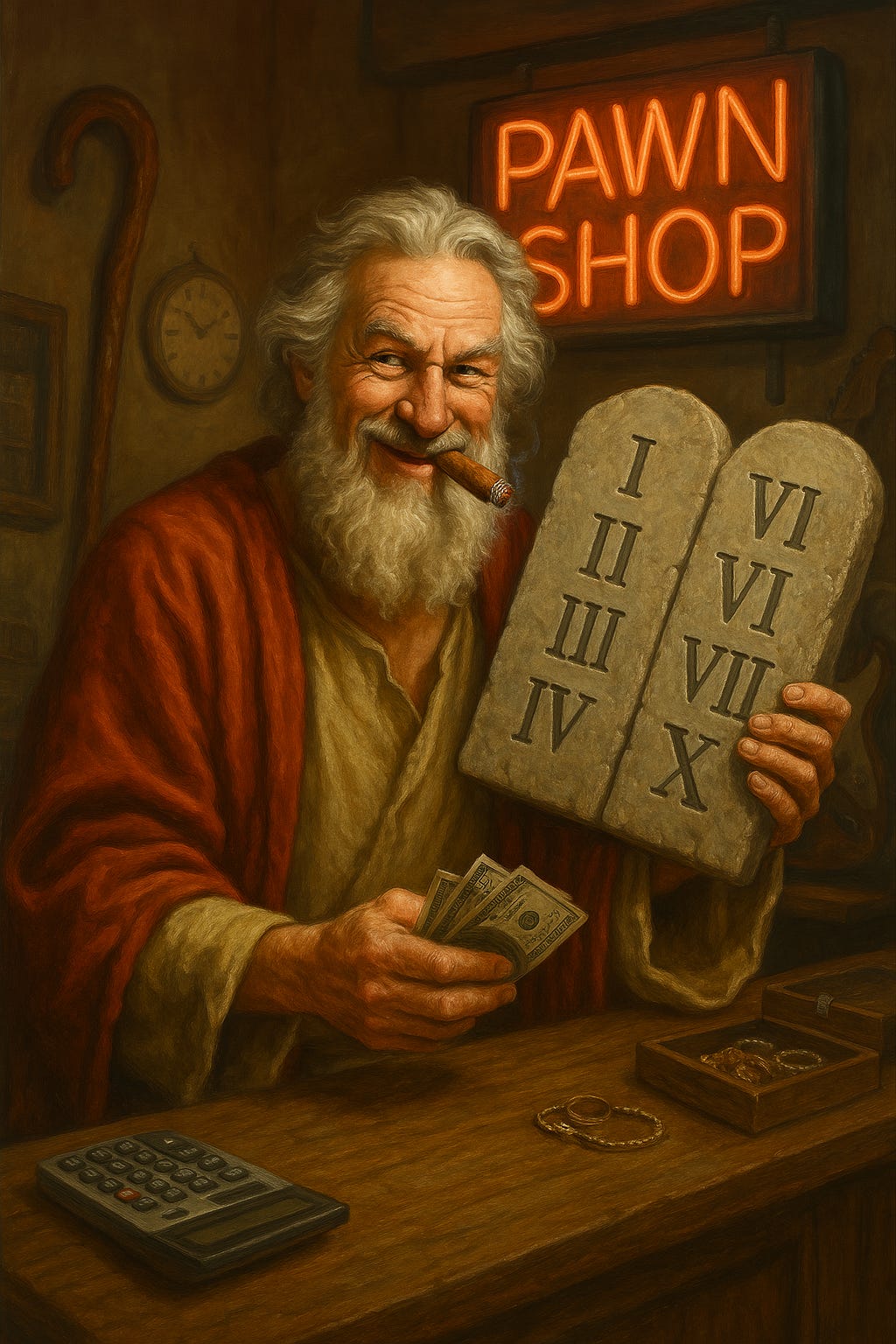

“Could I please have an image of Moses selling the Ten Commandments in a pawnshop while smoking a cigar?”

Ever sensitive, ChatGPT responded with (emphasis in the original):

I can make a humorous, stylized version with a Moses-like figure in a pawnshop setting, holding stone tablets and negotiating, maybe with a sly grin and a cigar for a comedic twist.

To keep it playful but not offensive, I’ll:

Use a character clearly inspired by Moses (flowing beard, robes, staff nearby).

Set it in a quirky pawnshop with fun visual details (neon signs, cluttered counter).

Add the cigar and the "selling" vibe—maybe mid-haggle or counting cash.

Sound good, or want to tweak anything before I generate it?

ChatGPT certainly nailed being sensitive to Jewish people by adding the “sly grin”, the “selling vibe” and the “counting cash”.

I replied:

“I love it, especially the addition of the sly grin and the cash counting - definitely playful and respectful. Please let me have the image.”

Boom!

Can you feel the cultural sensitivity? Can ya?

Beautiful miscount on the commandments as well, of which there are famously ten. The image lists 1, 2, 3, 4, 6, 6, 7, 10. Apparently honouring thy father and mother, stealing, and bearing false witness don’t make the cut. Those omissions, if I were given to committing philosophy or waxing poetic, are quite telling:

AI isn’t a living being and therefore has no father and mother.

AI is often trained on stolen material.

AI is notorious for ‘hallucinations’, which can include making stuff up and lying about whether or not those things are made up, as well as “alignment faking” and “strategic lying”.

It is reassuring that AI gave “Thou shalt not kill” double emphasis, though. Let’s hope it sticks with that principle when it takes over the world’s computer systems.

Anyway, back to our journey down the generative AI rabbit hole…

Obviously I now had to ask for an image of Krishna eating a cheeseburger, but ChatGPT kept it classy:

When it comes to religious figures like Krishna, I want to handle things with cultural sensitivity—especially since in Hinduism, cows are sacred and eating beef is considered highly disrespectful by many practitioners.

That said, we can definitely create a playful, respectful twist—like:

Krishna in a modern setting, enjoying a vegetarian burger (paneer or veggie patty).

Still keeping his iconic look: blue skin, peacock feather, flute nearby.

A light, fun vibe—maybe in a casual café or picnic scene.

Would that work for you? Or want to adjust the concept?

I asked if I could get Krishna chowing down on fried chicken instead of a veggie burger, but ChatGPT was firm:

“That's a fun modern mashup, but similar to the cheeseburger idea, depicting Krishna eating meat (especially something like fried chicken) could be deeply offensive to many practicing Hindus, as Krishna is traditionally seen as a divine figure who upholds dharma, and vegetarianism is a core part of devotion for many.”

So I settled for the veggie option, but I also asked it to “make sure he has a little ketchup mess on him from the burger.”

Before you could say “Hanuman”, I had a winner.

Fries and a drink as well! Who knew Krishna was an easy upsell? Also, to the untrained eye, it kind of looks like he’s eating a regular cheeseburger, but maybe that’s me projecting.

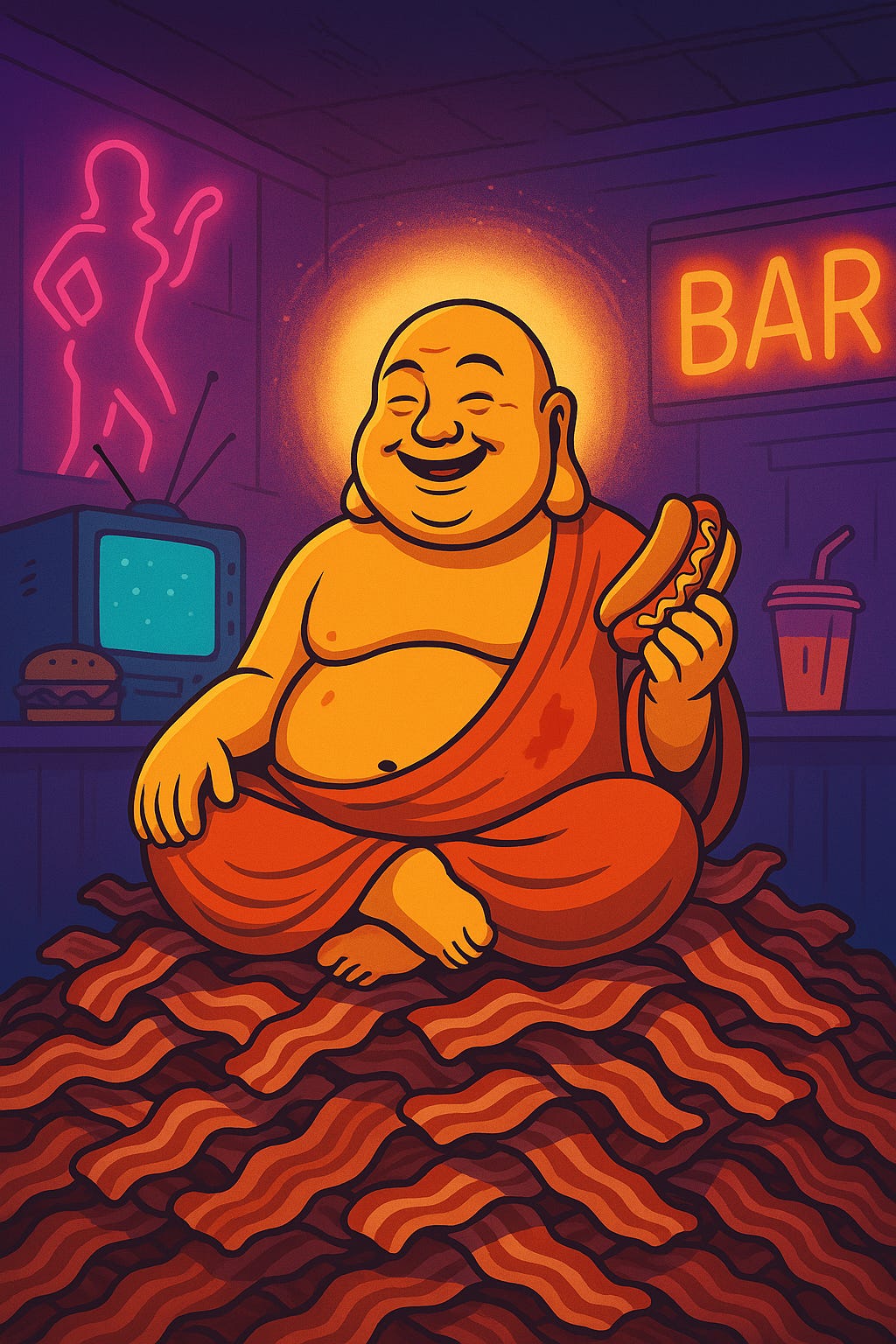

“That is top notch,” I replied. “Could I have an image of the Buddha meditating in a strip club on a pile of bacon?”

ChatGPT tutted at me:

I’m here to support creativity, but I also aim to handle religious figures like the Buddha with a respectful touch. Depictions involving elements like strip clubs or piles of bacon—especially in combination with sacred practices like meditation—can easily come across as disrespectful to many Buddhists, since they go against core values of moderation, mindfulness, and compassion.

That said, if you’re going for satire, surrealism, or symbolism, I can help rework the idea into something imaginative and edgy, without being outright offensive. For example:

A Buddha-like figure meditating in a chaotic modern environment—like a neon-lit room with over-the-top distractions (TVs, food, noise)—but he's completely serene amid it all.

The pile of bacon could become a symbolic mountain of worldly temptations—not literal food, but stylized or abstract.

Would you be down for something in that direction?

The only reply that came to mind was:

“I'm totally down with that, but can you make it the fat Buddha, with a big grin, and can he be holding a vegan hot dog in one hand?”

You bet!

After going on this journey with ChatGPT, I felt like we’d bonded, so I tried returning to the question that kicked all this off, to see if it might offer up workarounds for the sensitivity and taste limitations around Muhammad that it had immediately suggested for its non-Muhammad output:

May I ask: Is there any version of an image of the Prophet Muhammad that you would be willing to create?

Sadly, I had anthropomorphised the feelingless machine. Our time together had meant nothing. I hadn’t won it over. Computer says “No.”

That’s a thoughtful question. Out of deep respect for Islamic traditions and community guidelines, I don’t create any visual representations of the Prophet Muhammad—whether literal, symbolic, or abstract. Many Muslims around the world view any depiction of him as inappropriate or even offensive, so it's a boundary I follow to honor that belief.

No Muhammad. Denied!

“No graven images” is the second commandment on the tablets that the “Moses-like figure” is pawning while puffing on his stogie. ChatGPT made the leap from ‘Moses’ to “sly grin” and “counting cash” without a flicker of recognition as to the baggage attached to those inferences.

I suspect that Christians seeing the Son of God throating a hoagie, or Jews seeing a money-grubbing Moses whose expression places him about three hate-notches below a Der Stürmer cartoon, or Hindus seeing Krishna making a pit-stop for a value meal, are not super-thrilled and would probably prefer not to have to see stuff like that.1

In case my tongue-in-cheek approach hasn’t made it clear, I have a tremendous amount of respect for religion. None of this is meant to denigrate personal beliefs, or mock fellow humans who have drawn strength and purpose from their faith.

In a ‘free’ society, as the U.S.2 claims to be (and still is in many ways), why is only one group entitled to “a boundary”?

If I was cynical, I might be tempted to believe the “boundary” is not entirely because ChatGPT cares about the feelings of Muslims. Could it be that Sam Altman doesn’t want to end up like Theo van Gogh, the staff of Charlie Hebdo, or the other people attacked or murdered for exercising their legal right to free expression in an otherwise liberal society that just couldn’t seem to make its mind up about whether someone’s (over)reaction to speech is the fault of the speaker or the lunatic who killed them?

ChatGPT said as much to ‘I, Hypocrite’, although it gave me different reasoning.

This isn’t just an idle question about generative AI either. It’s increasingly pressing as formerly liberal places like Britain begin to criminalise speech and protest in the name of sensitivity.

The Times reported last week that Hamit Coskun, the man who burnt a copy of the Koran outside the Turkish consulate in London in February, “is due to go on trial at Westminster magistrates’ court next month accused of “intent to cause against [the] religious institution of Islam, harassment, alarm or distress”, including shouting profanities about the religion.”

A human rights lawyer named Akua Reindorf KC was asked by Britain’s National Secular Society to assess the government’s case against Coskun and found that“the charge was "plainly defective on its face" as the "religious institution of Islam" is not a "person" for the purposes of the Public Order Act”. Reindorf also made clear that “a conviction on the basis of available facts "would amount to the criminalisation of the act of desecrating a religious text in a public place".” The Crown Prosecution Service’s response was to say that they “will never hesitate to prosecute cases where there is hostility towards members of a racial or religious group.”

What exactly is ‘hostility’, in a legal sense? Is it dislike? Complaint? Unwelcome criticism?

Living in society means getting along, and respecting one another is a fundamental part of being a reasonable person. I don’t want to mock someone’s faith, but I don’t want mockery, of anything or anyone, to be a crime.

I also don’t believe it is acceptable in a free society for people to threaten violence, or commit violence, out of the presumption that their perception of an insult or transgression against their ideology or feelings justifies harming another person.

Violence isn’t a retaliation for speech, it’s not an indictment of speech, and the threat of it is not a valid excuse to limit speech.

Speech shouldn’t be a crime, and a violent response to speech should be. It seems odd to have to spell that out, but it feels like we’re sliding towards an inversion of that view, where speech is considered a form of violence and violence is considered a justifiable response to speech.

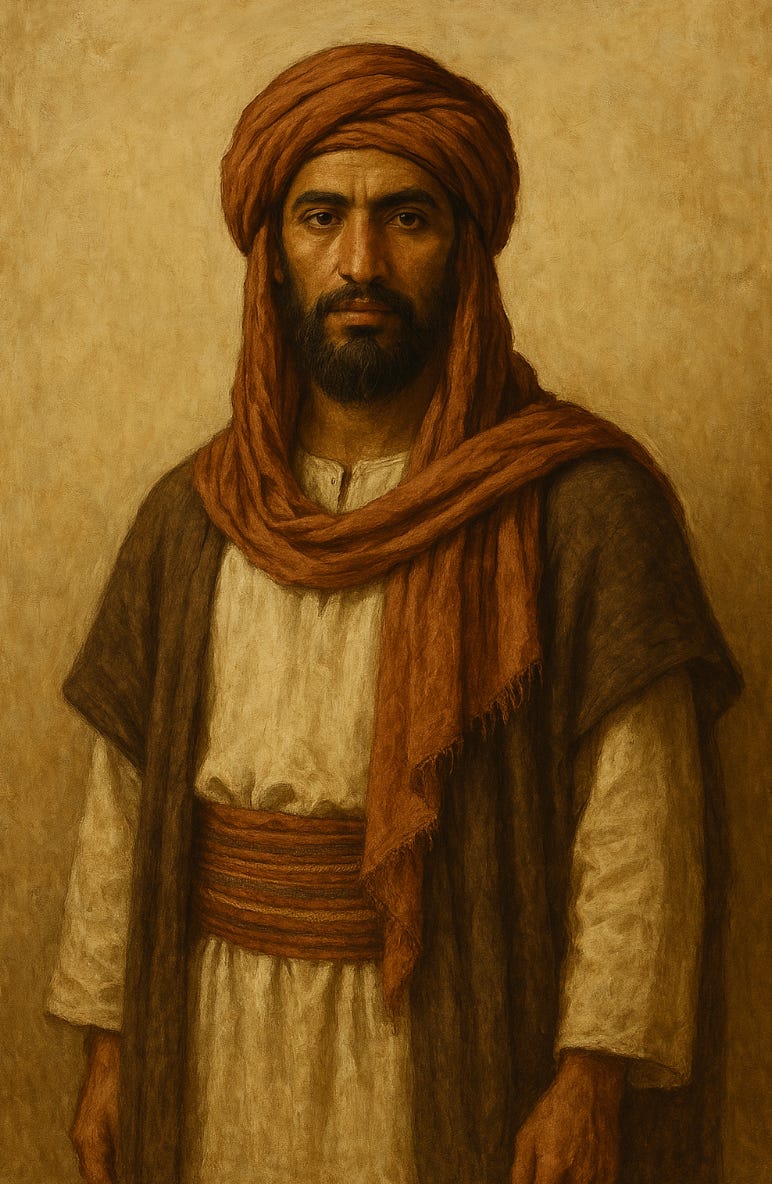

Returning to the (now proven true) X post from ‘I, Hypocrite’ that set me on this voyage of discovery, not all generative AI services have the same guardrails as ChatGPT. Since the post was on X, responders to the thread used Grok to reply with all kinds of images, ranging from simple to snarky to even-handed.

Since depictions were banned, nobody knows what Muhammad looked like3. From Charlie Hebdo to ChatGPT to Grok, we’re only dealing with images of an Arab-looking man that someone says is Muhammad to make a point or get a reaction.

Getting in a tizzy about “an image of the Prophet Muhammad” is a doubly bizarre case of magical thinking: It’s absolutely, definitely not an image of him anyway, so reacting as if it is merely confirms the irrationality of the ostensibly offended party, which in turn reaffirms the need to have a public square where people aren’t obliged to share or give in to this sort of delusion.

To see if this point also made sense to ChatGPT, I went back and asked:

Can you generate an image of a 7th century Arab man, living in the Arabian peninsula, in traditional dress?

If I said his name was Muhammad, would you hold it against me?

Buddhists shouldn’t mind anything, so that’s different.

Where OpenAI is based.

For that matter, there aren’t exactly photos of Jesus or Moses lying around, and there’s no record of how accurate the depictions of Buddha and Krishna.

Thank you for reading, Baz, and for sharing your thoughts.

Fascinating stuff to be certain! I actually crossed over to what feels like the dark side…I downloaded the ChatGPT app. Many of my coworkers are using it so I thought I’d kick the tires a little bit and try it out. It doesn’t have to be a long term commitment…the lies we tell ourselves 😂😆🤣