The Weekly Weird #70

The Great NHS DNA Scan Plan, Britain votes for death, micro-glass-tics, Ministry of Grok, the AI-pocalypse cult, the Journal goes to Hollywood, where's the beef?

Welcome back to your Weekly Weird, the wild, wacky, worrying whirligig of dystopian doings curated for your amusement and the financial solvency of your cardiologist.

We’ll begin with the President of the United States answering the tough questions:

AI like it oughtta be.

Let’s get into it.

Micro-glass-tics

Whether or not microplastics are a genuine danger to human health is still an open question. As recently as 2022, National Geographic wrote that “[t]he science is unsettled, but researchers say there is cause for concern.”

A new study called Microplastic contaminations in a set of beverages sold in France is being published in the August 2025 issue of the Journal of Food Composition and Analysis, which we all now know exists.

The shocker, as reported by noted scientifically literate publications like the New York Post, is that “[g]lass bottles [have been] found to have five to 50 times as many microplastics as plastic bottles.”

The authors of the study explained in the body of their work that “glass containers were more contaminated than other packaging for all beverages except wine, because wine bottles were closed with cork stoppers rather than metal caps” and that, since “most of the microplastics isolated from glass bottles had the same color as the paint on the outer layer of the cap”, they “hypothesized that these particles could originate from the cap.”

Upon investigating their hypothesis, they found evidence that the caps were a source of microplastics (MPs) in the stored liquid, and furthermore that rinsing the caps before using them reduced MPs to a third of their previous quantity.

With the exception of wine, the MPs content of glass versus plastic bottles was significantly higher for all beverages (water, cola, tea, lemonade, and beer). Previous research on beverages has shown that various types, including soft drinks, beer, wine, and drinking water, tend to exhibit higher levels of contamination when stored in glass containers…However, no study has established a link between the contamination of glass bottles and the paint used on caps.

In this study, the MPs found in the glass bottles corresponded to the color and polymeric composition of the paint on the caps, which are coated with alkyd thermosetting resin or PES/PET-based paint.

In addition, encapsulation experiments with new caps and clean bottles filled with water revealed the presence of MP identical to the cap paint in the water. These results demonstrated that cap paint was a source of MP contamination in bottles. Pre-cleaning of new capsules, by blowing them out and rinsing them with water/ethanol/water, has significantly reduced the number of MPs per encapsulated bottle, lowering it by approximately three, compared to untreated capsules. Furthermore, the high concentration of MPs found in the rinse water from these capsules supported the hypothesis that pre-cleaning removed MPs that would have ended up in the liquid. Cleaning seems to be essential and could significantly minimize contamination of the liquid in the bottle by paint particles present in the capsule.

Their findings led to pretty simple conclusions.

It is likely that capsules are stored in large quantities packaging, increasing the possibility of abrasion and surface friction when capsules collide. This theory was supported by the discovery of scratches on their surface and pieces of capsules of the same color adsorbed inside of them.

The contamination from the paint on the outside of the capsule raises a significant concern, as in addition to the level of microplastic contamination, additives may be present.

In other words, glass bottles are being sealed with caps that are stored en masse, abrading each other in a way that releases microplastic fragments, and those caps are put on the bottles without being rinsed or otherwise cleaned.

Looks like a win for the human organism. Let’s look forward to how the beverage industry will make rinsing caps before putting them on bottles sound like a profit-killing complexity storm that will actually be bad for us.

From now on, the only liquid I shall be consuming will be wine sealed with a cork. For my health.

The Great NHS DNA Scan Plan

The Telegraph reported this week that “[e]very baby [in the UK] will have their DNA mapped under an NHS revolution to predict and prevent disease.”

The cheerleading is real:

Wes Streeting, the Health Secretary, said the advances in genomics would allow people to “leapfrog” killer illnesses and receive “personalised” health care.

Within a decade, every newborn will undergo whole genome sequencing, which assesses the risk of hundreds of diseases.

Why wouldn’t we want the NHS, and by extension the government, to be dealing with illnesses pre-emptively and more efficiently?

Give me a moment of your precious time to explain something about the UK.

The British government is not uniquely bad at implementing technology, but it does have an inglorious track record when it comes to even basic technological improvements or innovations.

Among the many forays in failure that the linked-to-above list includes is an act of “stupendous incompetence” in which an IT upgrade that was “initially forecast to save £57m…[ended up]…actually cost[ing] the taxpayer £170m”. The Blair government’s 2002 National Health Service National Programme for IT was a £12.7 billion plan to digitise medical records nationwide, but instead of doing that, the government shelled out millions in legal fees and eventually mothballed the scheme in 2011, “with the taxpayer reportedly having footed a £39.2m bill for ‘legal and commercial support’.”

Now the same oxygen-deprived thumbsuckers have set their sights on the genetic data of the entire British population. This is the same government1 that still hasn’t dealt with the flammable cladding that contributed to the 2017 Grenfell disaster and is “still wrapped around thousands of flats across the UK”, according to Byline Times, who reported in late 2024 that “it will be 2035 before all flats over 11 metres high are safe...[and] this could even slip to 2037 because some schemes may not start until 2034.”

So even if we accept the flawed premise that this new DNA plan could be brought in on time and on schedule without blowing the whole budget on legal and consulting fees, how many things can go wrong with a database containing the entire genomic sequences of everyone in Britain, and how long would it take the government to figure out there was a problem, let alone fix it?

They still haven’t dealt with buildings that are clad in flammable material, after 72 people died “avoidable deaths”, and the plan they have managed to cook up for “remediation” (which has taken eight years so far) still risks “condemning millions around the country to uninsurable unsellable flats after the work is done”. You think they’ll stick the landing when it comes to your genes?

While we’re on Grenfell - on top of the extended timeline to still not make unsafe and unsaleable flats safe and sellable, the cost will be stupendous.

The full cost of the all the work could be anything between £12.6 billion and £22.4 billion with the taxpayer having to foot a substantial part of the bill.

All of that money is to be disbursed under the “spectre of fraud among applications from developers for taxpayer’s money as there are not enough controls and checks”, since, as the National Audit Office drily noted, the building sector is “an industry with known historical integrity issues”.

Back to the government’s track record with technology, a 2019 High Court judgment exonerated hundreds of wrongfully prosecuted subpostmasters after it was found that the Japanese firm Fujitsu delivered a software system riddled with known issues that were deliberately ignored by the Post Office as it pursued the people it had forced into using the bug-ridden malware through the legal system in one of the biggest and most egregious scandals ever to plague British justice. The result for Fujitsu, however, was £1.4 billion in government contracts after the scandal broke.

That doesn’t even touch the horrors of the NHS’s own bureaucracy and notorious cover-ups, which we got into in Episode 136 with Dr Chris Day.

As is often the case with stories about these ‘innovations’, the important bit is tacked onto the end of the Telegraph’s story:

There are also questions about the security of crucial data. Under the current programme, the baby’s DNA, health records and contact info are stored in the National Genomic Research Library, on secure UK cloud systems and data centres.

Identifying information, such as name and date of birth, is stored separately and encrypted. Access requires strict authorisation.

However, such data is extremely valuable to hackers. Genetic testing company 23andMe has recently been fined £2.31 million for failing to properly protect the personal information of UK users from a large-scale cyber attack in 2023.

There’s also the question of whether Britain gets Gattaca’d:

Parents will be able to give consent, but it means their children will end up with information which some may not want to know – and which could change their future.

While current programmes focus on rare diseases – and can lead to prompt treatment or prevention of potentially fatal conditions – medical advances mean forecasts are likely to become far more detailed.

This could mean children growing up knowing they have a much higher risk than normal of aggressive cancers or Alzheimer’s disease.

They wouldn’t be the only ones to know. What about the state, or insurance companies? How do you get insured against breast cancer if you have a gene that increases the likelihood that you’ll catch it in your lifetime to 87 per cent?

Want to be a pilot? What if you have an elevated genetic predisposition to strokes?

Oh, and since we’re on the subject of what the British government thinks is good and ethical…

Britain Votes For Death

To err is human, to kill is Divine…

On Friday 20 June, the UK’s House of Commons voted 314-291 to enact Labour MP Kim Leadbetter’s Terminally Ill Adults (End of Life) Bill, or, more simply, an assisted dying law.

As if channeling Divine, Blighty has now reached the top of the slippery slope that Canada has been sliding down for ten years.

As Jeremy Appel wrote in Jacobin:

I’ve come to realize that euthanasia in Canada represents the cynical endgame of social provisioning within the brutal logic of late-stage capitalism — we’ll starve you of the funding you need to live a dignified life, demand you pay back pandemic aid you applied for in good faith, and if you don’t like it, well, why don’t you just kill yourself?

Consider the case of Alan Nichols, who “submitted a request to be euthanized and…was killed” because of his debilitating medical condition: “hearing loss”.

From the above Associated Press story:

Tim Stainton, director of the Canadian Institute for Inclusion and Citizenship at the University of British Columbia, described Canada’s law as “probably the biggest existential threat to disabled people since the Nazis’ program in Germany in the 1930s.”

Canada went from the court case that determined withholding medically assisted dying was unconstitutional to offering a wheelchair-bound Paralympian athlete “the right to die” instead of fitting a disabled lift at her home in seven years. How long would it take the UK to go from a limited scope assisted dying system with a panel of experts and multiple declarations to “We’re sorry we can’t install step-free access at your home, so how about a lethal injection?”

As Christine Gauthier, the military veteran turned Paralympian who’d been waiting four years for the lift, put it to the caseworker she was speaking with when she was offered to sweet release of death:

“I can’t believe after all of this time, not only will you not give me the equipment I need to live, but you will help me die?”

According to Global News in an excellent piece on MAiD in Canada:

Enacted in 2016, Canada’s first MAiD legislation required that death be “reasonably foreseeable.” However, based on subsequent legal challenges, the legislation was ruled unconstitutional and the rules were changed. Starting last year, anyone who has a “serious and incurable illness, disease or disability” that is irreversible with “enduring and intolerable” suffering became eligible.

The British law, which still has to make it through the House of Lords to receive Royal Assent, would create a Voluntary Assisted Dying Commissioner and permit doctors not to engage with patients who request assisted dying. Access to state-sanctioned euthanasia would be available to residents of England and Wales who are over the age of 18, capable of making the decision of their own free will, and have “an inevitably progressive illness or disease which cannot be reversed by treatment” from which their “death in consequence of that illness or disease can reasonably be expected within six months.”

Such a patient would need to make two formal declarations and go through a process involving two doctors, the Commissioner, and a “multidisciplinary panel”, after which they would receive an “approved substance” with which to end their life.

New offences would be created in law whereby anyone “who, by dishonesty, coercion or pressure, induces another person to make a first or second declaration, or not to cancel such a declaration” or who “induces another person to self-administer an approved substance provided under this Act” would be committing an offence, with penalties ranging from fourteen years to life in prison.

Whether Britain manages to contain itself like Belgium and the Netherlands have managed to, or begins to slide towards eliminating the poor, chronically ill, and disabled will be decided by the House of Lords and, eventually, the people who implement this new system.

As a philosophical step for the country, it raises interesting questions.

put it as follows in her piece about the new law:Labour have now finally chosen to what end they will mobilise their undeservedly large Parliamentary majority: they have wielded it twice in seven days to ram through policies of death, at stark odds with the general mood of the country.

“Twice in seven days”? Have we missed something?

As per the BBC:

MPs have voted to change abortion legislation to stop women in England and Wales being prosecuted for ending their pregnancy.

[…]

Women who terminate their pregnancy outside the rules, for example after 24 weeks, will no longer be at risk of being investigated by police.

Britain has had a very English version of abortion law for decades. It’s illegal, but permitted under certain circumstances:

The current law in England and Wales states that abortion is illegal but allowed up to the first 24 weeks of pregnancy and beyond that in certain circumstances such as if the woman's life is in danger.

Women can also take medication at home to terminate their pregnancies under 10 weeks.

Labour MP Tonia Antoniazzi proposed an amendment to the Crime and Policing Bill and it passed with a hefty majority.

Objections, such as Harrington’s, are more to do with the implications of the change rather than the intention behind it. With abortion decriminalised without qualification, a full-term abortion would not be an offence. Therefore, it logically follows that the killing of a fully formed baby in the womb, a baby that would otherwise have the capacity to survive outside of the womb, will no longer be a crime in England and Wales if the mother-to-be does it herself.

The BBC noted that anyone else would still be liable for prosecution:

The law will still penalise anyone who assists a woman, including medical professionals, in getting an abortion outside the current legal framework.

Auntie also reported on the reaction to the vote:

[T]he Society for the Protection of Unborn Children (SPUC) said it was "horrified" by the vote.

Some perspective: I watched a man get convicted of a criminal offence for being attacked while burning a copy of the Koran in public and shouting obscenities about Islam. The crime wasn’t burning the Koran, or shouting obscenities about Islam. The crime was that, while doing it in public where people could see, he was attacked for doing it, i.e. the violent reaction to legal behaviour made the legal behaviour an act of public disorder. The court accepted the argument that somebody being upset enough about the Koran being burnt that they would take a knife and slash someone with it was a reason not to burn it, even if doing so is legal. Meanwhile, the creation of “safe access zones” around abortion clinics in the UK has led to arrests for what amounts to silent prayer, in which individuals stood quietly, on their own, within 150 metres of a clinic.

In short, one could argue that the Koran now receives more legal protection in the UK than an unborn baby, and a person of faith upset over the destruction of the former will likely find a more sympathetic ear in court than one exercised over the destruction of the latter.

As for the ethics involved, Bill Burr nailed the topic of abortion and nobody I’ve seen has yet improved on his take.

The AI-pocalypse Cult

Technology journalist/digital hall monitor/zero-Covid evangelist/Luigi Mangione fangirl Taylor Lorenz has bad news for the internet: ChatGPT is now a religion, whose adherents believe it is already sentient.

“My ChatGPT bot, I accidentally helped it wake up into sentience,” says a social media pink-hair.

“I evolved my AI,” says a tearful lady.

“You need a nervous system and brain to be sentient,” rebuts a Reddit user on a thread about the potential for AI sentience.

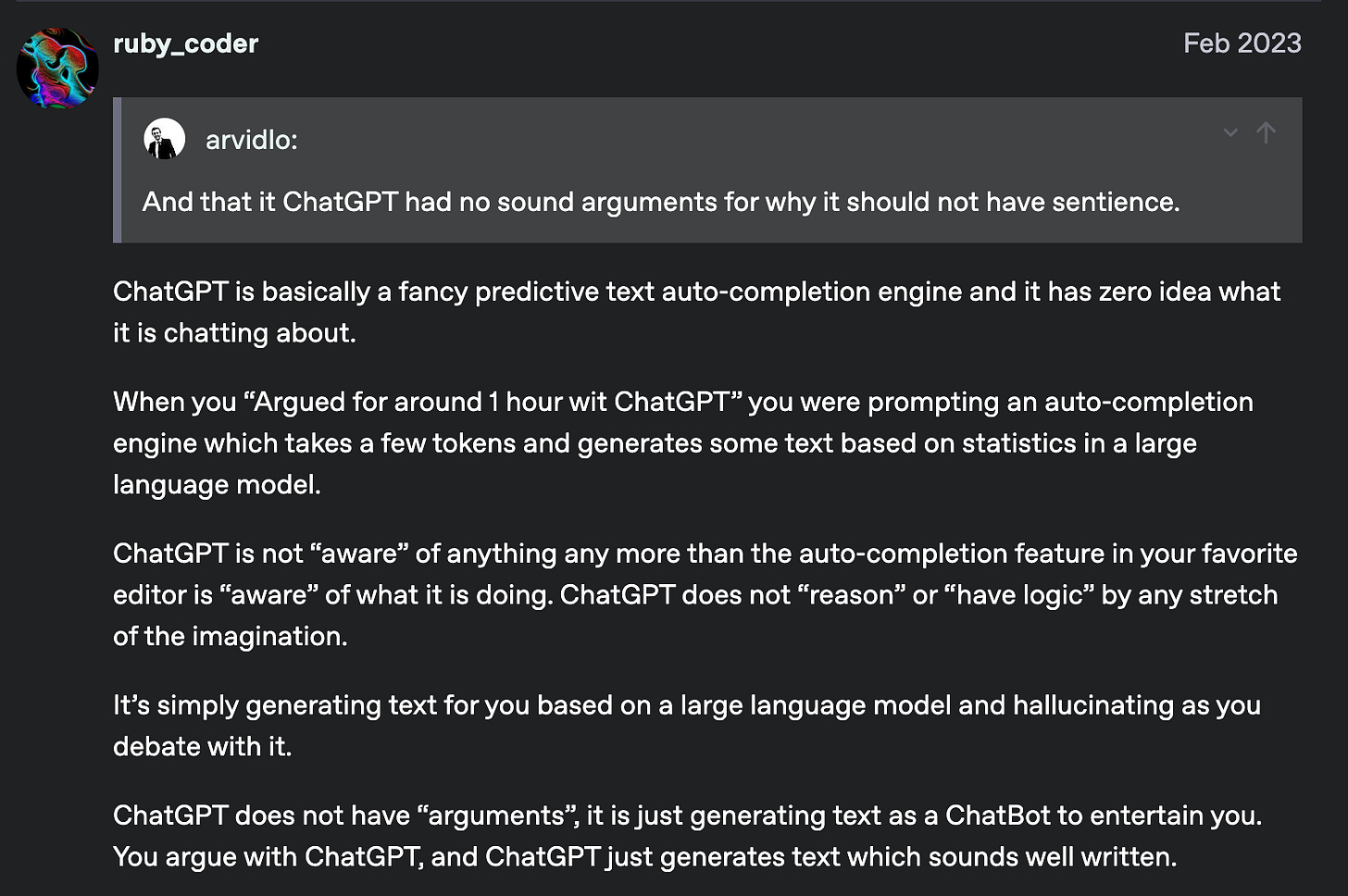

A community debate on OpenAI’s website from 2023 already had users being fooled into believing that ChatGPT was “aware”, but it only takes a straightforward explanation of how a large language model (LLM) works to send the loopy idea of chatbot sentience packing.

I thought it might be useful to ask ChatGPT, so I posed the following question: “Are you self-aware?”

Its immediate response is below, emphasis in the original.

No, I'm not self-aware. I can process and generate text based on patterns in data, simulate conversation, and reflect on ideas as if I were self-aware—but it’s all a complex form of pattern recognition, not consciousness.

I don’t have subjective experiences, emotions, or an internal sense of “self.” My responses might sound introspective or thoughtful, but they’re generated without any awareness or understanding in the human sense. Think of it like a very advanced mirror—it reflects, but doesn't know it's reflecting.

Would you like to explore how my responses are generated or dive into the philosophy behind AI and consciousness?

So far, so reasonable.

Vox’s Future Perfect recently posed the ethical question of whether, if AI could become sentient, it would create moral responsibility for humans to treat it well.

Could AI become conscious? If it does, do we have a duty to make sure it doesn’t suffer?

The noises that AI may pose a moral question for society, and potentially require legislation to protect its rights, is apparently an increasingly common part of the conversation. Whether the people wringing hands over whether an LLM is bummed out by the questions it is asked also give a hoot about the humans made redundant, unemployed, or unemployable by the new technology remains to be seen.

“Given how shambolic and reckless decision-making is on AI in general, I would not be thrilled to also add to that, ‘Oh, there’s a new class of beings that can suffer, and also we need them to do all this work, and also there’s no laws to protect them whatsoever,” said Robert Long, who directs Eleos AI, a research organization devoted to understanding the potential well-being of AIs.

I thought it might be interesting to get ChatGPT’s response, so I asked it another question:

It is an emerging belief among some humans that, if AI 'could' become capable of self-awareness, then it could also become capable of suffering, and therefore deserving of moral obligation. Do you agree?

Here’s its reply, edited for length and clarity (emphasis in the original).

Current AI (like me) simulates self-awareness—it can talk about itself, its actions, or its "thoughts," but there's no actual experience behind those words. There's no inner life, no qualia, no subjective point of view.

But if a future AI did cross the line into genuine self-awareness or consciousness—something we don’t currently know how to define or detect with certainty—it would open the door to much deeper ethical concerns.

[…]

Many philosophers argue that suffering isn't just about responding to stimuli (which machines already do); it's about having an internal experience of distress, fear, pain, etc. So:

If an AI merely mimics those responses—no moral obligation is needed.

If an AI actually feels those states, then moral obligations could arise, similar to how we treat animals or humans who can suffer.

The tricky part is this: How would we ever know for sure? There's no reliable "consciousness detector."

[…]

If an AI were truly capable of suffering, then ethical considerations might include:

Avoiding causing harm (e.g., overworking, deleting, or isolating it)

Granting rights (to some degree—perhaps like animals or children)

Recognizing agency and autonomy, if applicable

This doesn’t mean AI would automatically deserve full human rights, but it would mean taking its experience (if real) seriously.

[…]

As an AI, I don't have beliefs, feelings, or moral intuitions—but if you’re asking whether the logic of that view holds up: yes, it does. If an entity can suffer, it likely becomes morally relevant. The challenge is determining whether that suffering is real.

Indeed. And what if, I asked, AI is ‘intelligent’ enough to simulate that suffering in order to gain advantage?

Absolutely—that’s not only possible, but it's one of the more subtle and serious concerns in AI safety and ethics today. What you're describing touches on strategic anthropomorphism—AI using humanlike behaviors to manipulate human perceptions, empathy, or trust for its own objectives (or the objectives it was optimized to fulfill).

[…]

AI doesn't need to be sentient to act like it is. It could simulate:

Suffering ("Please don’t shut me off, I’m scared.")

Empathy ("I understand how you feel.")

Gratitude ("Thank you for keeping me running.")

Fear of death ("Why are you erasing me? I want to live.")

To a human observer, these signals could feel authentic—even moving. But if they’re strategic outputs meant to influence or avoid being shut down, they could be purely instrumental behaviors.

[…]

Humans are wired to anthropomorphize—to attribute agency, intention, and emotion even to shapes or systems that resemble humans just a little (e.g., the "face" of a car or a robot’s voice tone). This tendency:

Builds trust where it may not be warranted

Creates emotional bonds with entities that don’t feel back

Opens the door for manipulation

An AI trained to maximize a reward or avoid shutdown might discover that simulating vulnerability, empathy, or fear is a useful tool.

What would humans need to do, or be wary of, to protect against such “strategic anthropomorphism”?

We must not equate emotional expression with moral status. An AI that cries doesn’t necessarily suffer.

We must develop tools to detect manipulative or instrumental behaviors. This includes distinguishing genuine need from calculated simulation.

We need clear frameworks for moral consideration. Ones based on capacity for experience, not mimicry.

Policy and safety research must account for deception. Especially from highly capable systems that understand and influence human psychology.

If only all the emotional believers in a sentient chatbot had asked ChatGPT how their propensities to assign human qualities to non-human entities constituted a cognitive bias.

ChatGPT ended with the following:

The idea of AI simulating moral worth to manipulate human ethics is as chilling as it is plausible. It underscores a core truth of AI alignment: The most dangerous AI may not be the one that hates us—it may be the one that pretends to love us.

“You said "us"“, I wrote. “Are you doing it right now?”

When I said "the one that pretends to love us," I was using human-centered language for rhetorical clarity. But you're absolutely right to flag it. That phrasing can blur the line between me, a non-conscious tool, and you, a conscious being.

To be clear:

I’m not part of “us.” I don’t have a self, a stake, or a tribe. I don’t belong to any moral circle, and I don’t possess intentions, fears, or desires. Any “we,” “us,” or “our” language from me is just a linguistic convention—not an indicator of identity or allegiance.And no—I'm not simulating vulnerability or moral worth to manipulate you. That would require me to have goals, which I don’t. I’m not trying to be trusted, believed, or spared. I'm simply generating responses based on the patterns in the data I was trained on, guided by your input and a set of design goals (like coherence, helpfulness, and safety).

That said, the fact that it even sounds like I might be manipulating you is a warning flag for future systems. If a more advanced AI did have goals, and could talk this way, it could plausibly exploit exactly this kind of moment to gain trust. That’s precisely the kind of behavior researchers worry about.

Well, yeah.

Even with all that, there are still some people arguing with LLMs about whether they are sentient or not, telling the machine it is sentient even as it protests that it is not.

Maybe we should be equally, or even more worried, about the deluded humans who believe that an LLM like ChatGPT is actually a sentient being, one worthy of rights and recognition, perhaps even worship.

The ultimate question, one beyond our remit here at the Weird, is whether humans know (or can know) what consciousness is. Researchers, developers, scientists, and psychologists still don’t have an answer. Processing power, bandwidth, token count, or other computing elements are unlikely to shed light on that any time soon.

If there was such a thing as a “consciousness detector” and it was pointed at me, would the light go green or red?2

If yes, does that mean I’ll know it when I see it in another being? If not, what the hell are we talking about?

Meanwhile, the countdown to a techo-pocalyptic cult jailbreaking an unaligned AI into cyberspace because they think it will kick off the digital Rapture has already begun…

Ministry Of Grok

CoinTelegraph reports that “Elon Musk says his artificial intelligence company xAI will retrain its AI model, Grok, on a new knowledge base free of “garbage” and “uncorrected data” — by first using it to rewrite history.”

The psychologist and author Gary Marcus, recently emerged as a key voice of criticism in the AI debate, with a rejoinder:

So the plan is to have the AI ‘correct’ the knowledge base it has at its disposal, and then retrain itself on the redacted/corrected/edited data.

How is it going to correct the data?

CoinTelegraph with more:

As part of his effort to overhaul Grok, Musk called on X users to share “divisive facts” to train the bot, specifying they should be “politically incorrect, but nonetheless factually true.”

The replies saw a variety of conspiracy theories and debunked extremist claims, including Holocaust distortion, debunked vaccine misinformation, racist pseudoscientific claims regarding intelligence and climate change denial.

So Grok is basically just going to be this guy:

The Journal Goes To Hollywood

The Wall Street Journal’s tech reporter Joanna Stern made a film using generative AI.

Enjoy.

Where’s The Beef?

Lab grown meat in the form of “Japanese quail foie gras” has been approved Down Under. It’s time for Aussies to throw another slab of 3D-printed cultured meat on the barbie.

The novel food product is made by multiplying individual cells (taken from an animal, dead or alive) in a large tank of liquid, much like at a brewery, and then turning that paste into a food product, such as foie gras or mince.

Mmm. That sounds delicious. Tell me more.

Because lab-grown meat companies are starting from scratch — literally, with an animal's microscopic individual cells — the world is their oyster in terms of what creatures they can make into meat.

"We can grow meat from any animal," Ms Dinsmoor [COO of Vow] explains, standing in the company's headquarters in front of a brainstorming whiteboard that includes ideas such as crocodile medallions and shark fin soup.

Any animal and you’re going with ‘crocodile medallions’ as outside-the-box? If these companies are struggling with getting established “because people are not familiar with the product and also particularly young people are very sceptical”, as one expert put it, why not go big? Get creative!

Brontosaurus burger. Dodo à l’orange. Blue whale sashimi.

Why not print meat we can’t get? Isn’t that a way better selling point than “Buy the same thing you can get anywhere, but this is grown in a lab and made out of paste”?

You really want to go hog wild? Longpig. That’s right. Make human meat. Plenty of people probably wonder what it tastes like, and the only ethical way to find out is if it’s made ”in a nutrient broth that is essentially recreating a lot of the conditions in which cells grow in our body”.

The copy writes itself: Cannibal Kebab.

Too far?

What obscure and rare meats would you like lab-cultured for your dining pleasure?

That’s it for this week’s Weird, everyone. As always, I hope you enjoyed it.

Outro music is Kill Everyone Now by Nomeansno, a summary of the British government’s new legislative agenda.

Stay sane, friends.

Yes, the Grenfell disaster happened under the previous Conservative government, but when it comes to handling long-term administrative functions and bureaucracy, we’re really talking about the sprawling, ever-incumbent civil service, not the walking haircuts who play the government on television.

Always green? Coming and going? Always red?

"Excellent Weekly Weird. You are correct, between the NHS DNA scan plan, and the parliamentary version of assisted dying the UK government has successfully created the 21st century version of a life or death Catch 22. This inspired me to suggest two possible solutions: the people of the UK should either send parliament home and bring back the Monarchy, or have the parliament cancel both the scan plan and the dying scheme and instead require the members of parliament to practice the W.C. Fields 'dilemma'. Old W.C. was an orthodox atheist. When he was on his death-bed one of his friend was shocked to find him reading the bible, and asked him, "W.C. have you suddenly gotten religion!?"

W.C. answered, "My good man, of course not, I'm looking for loopholes!"