The Weekly Weird #6

1924 predicts 2024, American π, DARPA derp, the AI Resurrection, the birth of botshit, China will section you, and a punk chaser

Happy New Year!

Don’t worry, I’m not going to use the occasion of the New Year to speculate in list form about all the (bad) things that could happen this year. There’s plenty of that going around right now so you don’t need it from me. Anyway, with twelve whole months left for 2024 to surprise us to the up- or downside, the chances are slim that my imagination could capture the full spectrum of what reality might throw at us. Besides, my crystal ball is in the repair shop.

Speaking of crystal balls…

1924 Predicts 2024

Here’s a fun silent short film from 1924 in which ‘Q’ (yeah, seriously, but not that one) predicts what London will be like in 2024.

Charming in-camera effects and Monty Python-style overlays notwithstanding, the film, made by French director Gaston Quiribet, goes to show that we really don’t know what the future holds.

Speaking of predicting the future…

American π

The Forecaster, the definitely-not-impartial but very watchable documentary about Martin Armstrong, an economics analyst with a very modern tale of woe, is worth watching if you haven’t seen it yet. You’ll find the trailer below. It’s free with Amazon Prime in the UK (not sure about the US), and available elsewhere at a price. This YouTube playlist has a selection of clips from the film if you want to dip your toe.

TL;DW for the purposes of our time together: Armstrong claimed to have developed software that used historical price data to predict future geopolitical and economic events. His cycle analysis uses π (pi), the forever-repeating number immortalised by Darren Aronofsky in his excellent 1998 debut feature film (with a star turn from Mark Margolis before he made sitting in a wheelchair, flaring his nostrils, and hitting a service bell famous in Breaking Bad).

The way Armstrong tells his story, he was persecuted (and prosecuted, and jailed for 11 years) by the US government for refusing to surrender his algorithm. Sounds far-fetched, right? Come on, would Uncle Sam really try to ruin someone’s life because they had discovered a way to accurately predict geopolitical events and refused to hand it over to the CIA?

In one scene in the film, Armstrong’s former colleagues boot up a laptop, dormant since 1998, which has a presentation on it prepared before Armstrong’s arrest. The slide presentation on the laptop from back then includes a timeline of eerily accurate predictions going forward all the way to 2015 (including the dotcom bubble, sub-prime real estate crisis, and sovereign debt crisis).

For those open to the possibility of prophecy: According to Armstrong’s pi cycle, the next inflection point for a major crisis is approximately April 16th, 2024. Mark that on your calendar, and sleep tight.

DARPA Derp

DARPA (for the uninitiated, that’s the US Department of Defense’s Defense Advanced Research Projects Agency) has released its unclassified 2024 budget.

One of the planned programmes (also known as a ‘thrust’) therein is the Foundational Artificial Intelligence Science thrust. From their budget report:

…the Foundational AI Science thrust will focus on the development of new learning architectures that enhance AI systems' ability to handle uncertainty, reduce vulnerabilities, and improve robustness for DoD AI systems. One focus area of this thrust is the ability to detect and accommodate novelty - i.e., violations of implicit or explicit assumptions - in AI applications. Another focus area is the development of a model framework for quantifying performance expectations and limits of AI systems as trusted human partners and collaborators. A third focus area is the development of new tools and methodologies that enable AI approaches for accelerated scientific discovery. The technology advances achieved under the Foundational AI Science thrust will ultimately remove technical barriers to exploiting AI technologies for scientific discovery, human-AI collaboration, accommodating novelties, and other DoD relevant applications.

Guaranteeing AI Robustness against Deception (GARD) is also on the list of projects. In the quote below, ‘ML’ refers to ‘machine learning’. They sure do love their acronyms and initials.

GARD addresses the need to defend against deception attacks, whereby an adversary inputs engineered data into an ML system intending to cause the system to produce erroneous results. Deception attacks can enable adversaries to take control of autonomous systems, alter conclusions of ML-based decision support applications, and compromise tools and systems that rely on ML and AI technologies.

Autonomous weapons systems are not new in military tech, and there is a conversation to be had about the ethical arguments for and implications of lethal autonomous weapons systems (LAWS). However, with the advancement in AI and LLMs in 2023 and the likely ramping up of the rate of improvement/development going forward, GARD is a programme worth being haunted by.

An old saying in computer science is “garbage in, garbage out”. As military hardware is increasingly run not just by software, but autonomous AI decision-making overseen or reviewed by humans, the prospect of fatally flawed choices and outcomes increases significantly. For AI to return a reasonable, measured, tactically valid response, the information it is using must be accurate. If polluted data gets into a LAWS, such as which personal characteristics or locations qualify a target for elimination, the results could be outstandingly awful.

Another ‘thrust’ is Machine Common Sense or, you guessed it, MCS.

The Machine Common Sense (MCS) program is exploring approaches to enable common-sense reasoning by machines.

…

AI systems that are capable of human-like reasoning will be able to behave more appropriately in unforeseen situations and to learn with reduced requirements for training data.

Common sense these days appears to be a superpower, so I’m not particularly optimistic about the level of common sense humans will be able to teach to AI systems. Garbage in, garbage out.

Here’s an X thread with further highlights from the DARPA budget.

Speaking of garbage in, garbage out…

The Birth of Botshit

One of the delightful things about living through the Exponential Age as a language enthusiast is the occasional neologism one discovers glittering amongst the lexical chaff like a rare jewel.

A new paper by Tim Hannigan, Ian P. McCarthy and Andre Spicer provides us with one such welcome addition to the English language: botshit.

From the paper’s abstract:

Advances in large language model (LLM) technology enable chatbots to generate and analyze content for our work. Generative chatbots do this work by ‘predicting’ responses rather than ‘knowing’ the meaning of their responses. This means chatbots can produce coherent sounding but inaccurate or fabricated content, referred to as ‘hallucinations’. When humans use this untruthful content for tasks, it becomes what we call ‘botshit’. This article focuses on how to use chatbots for content generation work while mitigating the epistemic (i.e., the process of producing knowledge) risks associated with botshit. Drawing on risk management research, we introduce a typology framework that orients how chatbots can be used based on two dimensions: response veracity verifiability, and response veracity importance. The framework identifies four modes of chatbot work (authenticated, autonomous, automated, and augmented) with a botshit related risk (ignorance, miscalibration, routinization, and black boxing). We describe and illustrate each mode and offer advice to help chatbot users guard against the botshit risks that come with each mode.

Humans really are extraordinary creatures. In a way, one of the things that permits distortions of our social fabric to form and endure is our incredible ability to adapt, to absorb change, and muddle through regardless. How quickly we’ve moved from being appalled or worried about LLMs lying (or ‘hallucinating’, in the watered-down parlance) to producing guidelines on how to spot botshit and mitigate or work around it.

The next time someone goes batshit and calls you on your bullshit, blame it on the botshit.

The AI Resurrection

A long, long time ago in a society far, far away, the actor Yul Brynner filmed an anti-smoking spot that aired after his death from lung cancer.

Bill Hicks did a great bit about it in his 1991 special, Relentless.

A charming reminder that this sort of thing used to be considered genuinely outlandish rather than just ‘tech news’.

In 2014, Ad Age wrote that “the marketing, licensing and commercial use of dead celebrities is an estimated $3.0 billion business,” noting that “payments to the beneficiaries of departed icons utilized in commercials have grown by nearly 20% in the past two years.” The next paragraph in the article should hang in the Louvre:

So why, according to a recent survey conducted by our company of senior production executives at the top agencies, would 80% rather work with a live celebrity than a dead one? Aren't they, and their real-world demands, more problematic than working with a dead celeb, who can't insist you fly their trainer, vegan chef, hair and makeup people first-class to the set or banish brown M&M's from the craft table?

In 2015, Marketing Week reported that “MaxFactor has just named Marilyn Monroe as its new “global brand ambassador”, despite the fact that she died more than 50 years ago.”

As Rob Wright proclaims in the opening track (and musical chaser at the end of this week’s Weird) on Nomeansno’s album ‘Wrong’: “Have you heard the news? The dead walk.”

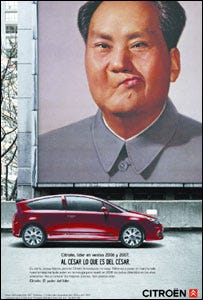

To date, it seems like only Mao is off-limits when it comes to endorsing your product in dead-face. In 2008, French car maker Citroen had to apologise for using a satirical image of the Chairman in an ad where the slogan was “At Citroen, the revolution never stops.” It stopped PDQ when China shocked them by not finding it funny. It was very difficult to find an image of the ad, which I’m sure has nothing to do with China wanting it gone, so apologies for the small size1.

I remember when I saw Audrey Hepburn digitally dug up and shoehorned into an advert - it creeped me out and made me wonder why anyone felt that they had the right to decide whether a dead celebrity would endorse a product, and to choose what that product might be. The ad was posted on YouTube when it came out, with the question “Creepy or Cool?” in the title.

Well, the votes are in, folks. Digital clones are officially cool, and they even fall into a grey area of the law that doesn’t guarantee you the right to prevent someone from creating and profiting from an AI clone of you.

It seems like Ari Folman nailed the future of stardom in his very trippy and under-appreciated 2013 film The Congress, except for the in-retrospect-optimistic view that production companies would actually pay the talent for the digitised versions of themselves. What a difference a decade makes.

Mohar Chatterjee at Politico got into this question in a recent article about the AI cloning of the famous psychologist Martin Seligman.

From the article:

The bot, cheerfully nicknamed “Ask Martin,” had been built by researchers based in Beijing and Wuhan — originally without Seligman’s permission, or even awareness.

The Chinese-built virtual Seligman is part of a broader wave of AI chatbots modeled on real humans, using the powerful new systems known as large language models to simulate their personalities online. Meta is experimenting with licensed AI celebrity avatars; you can already find internet chatbots trained on publicly available material about dead historical figures.

I checked out that last link and, hoo boy.

HelloHistory.ai offers chat-speriences with a selection of historical figures from Genghis Khan to Buddha to Marilyn Monroe to…Borat? How long before university students are quoting Che Guevara’s chat bot in their history essays instead of his actual writing? Defending a physics thesis because the AI ghost of Albert Einstein said the equations were “ze sheet”? Anyone? Bueller?

One touching use case for AI chat bots, especially ones cloned from the work of prominent psychologists, is therapy. What if anyone could just whip out their phone and text Freud to ask about their dreams, or get Jung to advise them on their relationship to their shadow? In its way, it does make sense.

However, we already are on the back foot regarding privacy with the technology that we have now. Our personal data is sloshing around all over, pooling in places we didn’t anticipate, being captured and consolidated and traded by companies we never even hear of, in order to be leveraged for purposes ranging from the prosaic (‘People who bought this also bought this’) to the problematic (identity thieves on the dark web). Your lawyer, your priest, and your shrink are meant to be the bastions of privacy. What happens when your sessions with a therapist are as accessible and legible to interlopers as your unencrypted emails? Governments have already staked a claim to your digital fingerprints, your chat logs, your phone records…what if they had not just the desire to know what you said to a professional in confidence in a therapeutic setting, but the technological means?

Well, since you asked…

China Will Section You

On March 12, 1957, Mao gave a speech at the Chinese Communist Party’s National Conference on Propaganda Work, in which he said:

The new social system … has to be consolidated systematically. To achieve its ultimate consolidation, it is necessary not only to bring about the socialist industrialization of the country and persevere in the socialist revolution on the economic front, but also to carry on constant and arduous socialist revolutionary struggles and socialist education on the political and ideological fronts.

Perhaps it is unreasonable to respond cynically, but something in me reads “socialist education” as a euphemism. The following comment from a Chinese psychiatric ‘patient’ about their ‘treatment’, quoted in a 2002 report from Human Rights Watch, does not disabuse me of that notion.

"My stay in hospital this time was just like being in a political study school – you cured both my physical illness and also my ideological sickness. I want to thank the Party for all the warmth and concern it has shown me.”

That last line could be straight out of a book by Orwell or Koestler.

Richard J. Bonnie, the author of this 2002 article published in the Journal of the American Academy of Psychiatry and the Law, titled Political Abuse of Psychiatry in the Soviet Union and in China, lays it out pretty straight.

Psychiatric incarceration of mentally healthy people is uniformly understood to be a particularly pernicious form of repression, because it uses the powerful modalities of medicine as tools of punishment, and it compounds a deep affront to human rights with deception and fraud. Doctors who allow themselves to be used in this way (certainly as collaborators, but even as victims of intimidation) betray the trust of society and breach their most basic ethical obligations as professionals.

Bonnie draws on and refers to the 2001 research of Robin Munro, “a London-based expert on China human rights issues who served as principal China researcher and director of the Hong Kong office of Human Rights Watch during 1989-1998”, who was also the author of the 2002 Human Rights Watch report quoted above:

Munro’s research indicates, convincingly in my view, that the Soviet system of forensic psychiatry was transplanted to China during the 1950s and 1960s, thereby placing a small subset of psychiatrists at the intersection of criminal prosecution and psychiatric confinement, and importing a smoothly oiled process by which psychiatrists found that most offenders referred for assessment lacked criminal responsibility and committed them for treatment without any adjudication or judicial oversight. Eventually, in the 1980s, China also established a system of maximum-security forensic hospitals (Ankang), modeled after the Soviet “special hospitals,” for confining offenders who present a “social danger.”

Safeguard Defenders released a report on China’s use of psychiatric intervention to suppress dissent in 2022. Previous news coverage includes The Guardian in 2008 and Voice of America in 2020.

All of which is to say, if you’re in China, you’re using an app or logged chat to tell your therapist about what’s on your mind, and what’s on your mind happens to be that you are not fully joy-joy with the Party, then the chances are high that, as my dear departed grandfather used to say, the men in the white coats will come and take you away.

Would you trust your government not to read a record of your deepest thoughts and emotions if it was accessible in a digital form?

Punk Chaser

Now that you’ve had the shot(s), here’s the chaser as promised.

The new episode of the podcast drops this Sunday, so look out for it.

Stay sane out there, friends.

That’s what Mao said. Ba dum tss.