The Weekly Weird #54

Deep Eek, make mammoths great again, robots didn't kill anyone, Covid-19: Origin Story, the global trust bust, the EU poops on speech

Howdy ho, fellow Weirders! Once again we convene to survey the scene, figure out what it all means, vent our spleens, look past the sheen, and hopefully exceed the potential of our genes…

Yeah, that’s right, we got rhymes up in here!

Up-fronts:

Colossal Biosciences Inc., a company focused on the “functional application of advanced gene editing technology aimed at rebuilding the DNA of lost megafauna and other creatures that had a measurably positive impact on our fragile ecosystems,” i.e. de-extinction, “is on track to produce a woolly mammoth calf born to a surrogate elephant mother by late 2028,” according to Bloomberg. Colossal has raised nearly half a billion dollars in cash and is valued at over $10 billion. The company was founded by tech entrepreneur Ben Lamm and Professor George Church of Harvard University (our guest from Episode 111).

A Reuters “Fact Check” found “No evidence that four AI robots killed 29 scientists in Japan or South Korea.” The claim, made in a viral video by ufologist Linda Moulton Howe, was that “lab workers deactivated two of the robots, took apart the third, but the fourth robot began restoring itself and somehow connected to an orbiting satellite to download information about how to rebuild itself even more strongly than before.” The Robotics Policy Office of the Japan Ministry of Economy, Trade and Industry deadpanned to Reuters when asked about the alleged incident: “To our knowledge there is no basis in fact regarding the matter you inquired about.” The Japanese are so polite that even when they say “This is bullshit,” it sounds genteel.

A team at the Indian Institute of Science (IISc) have developed “a type of semiconductor device called Memristor, but using a metal-organic film rather than conventional silicon-based technology.” The neuromorphic innovation, “when integrated with a conventional digital computer, enhances its energy and speed performance by hundreds of times, thus becoming an extremely energy-efficient ‘AI accelerator’.” The researchers believe that, subject to scaling, “the technology could enable the most large-scale and complex AI tasks – such as large language model (LLM) training – to be done on a laptop or smartphone, rather than requiring a data centre.”

Crypto bros are turning puce from holding their breath over the “will they/won’t they?” question of a Strategic Bitcoin Reserve in the United States. Wyoming Senator Cynthia Lummis (“Lummis rhymes with hummus” according to her X profile) enthused the faithful with a ‘laser eyes’ X post that humorously misquoted the opening line of the song Sympathy For The Devil by the Rolling Stones.

Is Sympathy For The Devil really the song you want associated with your now-declared allegiance to the crypto community, Senator?

Curious individuals visiting YouTube to search for Elon Musk’s “awkward gesture” have been getting an unexpected search result featuring the British Royal Family, specifically a film from either 1933 or 1934 featuring the Queen Mother and her daughters, one of whom is the future Queen Elizabeth II, making decidedly less arguable gestures. When The Sun published the film and an article about it in 2015 (under the characteristically understated headline Their Royal Heilnesses), a Palace spokesperson said: “Most people will see these pictures in their proper context and time…To imply anything else is misleading and dishonest.” Plus ça change, eh?

Covid-19: Origin Story

Politico have published a story on the CIA’s recent admission that “it’s more likely a lab leak caused the Covid-19 pandemic than an infected animal that spread the virus to people,” news that is presumably only shocking to the readers of Politico, and the other media outlets that insisted for years that it was ludicrous, problematic, partisan, and even racist to consider the possibility that the Wuhan Institute of Virology, a known centre of bat coronavirus research and China’s only BSL4 facility, might have been involved in creating the pathogen.

Still, Politico couldn’t quite bring themselves to just report news, as the following two paragraphs show. Spot the lexical sleight of hand:

Congressional Republicans have embraced the unproven lab leak theory, pointing to how the first cases of Covid-19 were reported in Wuhan, where a virology lab was researching coronaviruses at the time.

Still, many virologists have published studies supporting a likely natural origin, arguing that the virus may have spread amongst people who were exposed to animals infected with the virus that were being sold at a wet market in the city.

Funny, right? There is no conclusive evidence accepted by a preponderance of experts or officials that determines the origin of the virus. So while it’s valid to say that the ‘lab leak theory’ is “unproven,” it is deliberately misleading to refer to the alternative, ‘natural origin’, as “likely” rather than “also-unproven”.

The Press Gazette, in early 2024, wrote up a useful summary of the tussle over Covid’s origin story. Their overview:

Press Gazette contacted 20 journalists, read 165 academic journal articles and news reports, and sifted through nearly 4,000 pages of leaked and FOIAed documents to create this retrospective of how the Wuhan lab story did, and did not, get covered.

We found:

Credible sources urged The New York Times to investigate the Wuhan lab, but the paper baulked

Scientists advising National Institute of Allergy and Infectious Diseases director Anthony Fauci told the NYT that the leak theory was “false” even though they had seriously considered it

Academic papers published in The Lancet and Nature Medicine shut down the lab-leak theory without hard evidence

Science journalists were sometimes “captured” by their sources and thus avoided investigating the story

Reporters feared false accusations of racism if they proposed investigating the lab.

The Press Gazette, in a manner that Politico might benefit from noting, lays it out:

There are two threads to the lab-leak theory: One argues it might have been a sample collected from nature — perhaps from one of China’s many bat caves — that escaped from the lab by accident. The other posits that the lab was manipulating samples it had collected into more infectious “man-made” versions, one of which also escaped, probably by accident.

To be clear: There is no scientific proof that SARS-CoV-2 (the official name of the virus) leaked from the Wuhan lab.

Rather, many prominent scientists say, the virus likely emerged from nature. But there is no definitive proof of the “natural origin” theory, either. On both sides, the jury remains out.

In the meantime, the circumstantial evidence linking the Wuhan lab to the pandemic continues to emerge. Thousands of pages of emails, Slack messages, leaked documents, intelligence reports, and regretful testimony from senior US officials have been unearthed by reporters. Much of it demonstrates that government officials and the scientists advising them regarded the theory that SARS-CoV-2 leaked from the Wuhan lab more seriously than they admitted in public.

In a story published on the same day as [former British cabinet member Michael] Gove testified, former US Director of National Intelligence John Ratcliffe said: “The most likely origin of Covid-19, of the Wuhan virus… was a lab leak at the Wuhan Institute of Virology.”

The Sky News Australia story referred to above included a documentary featuring an interview with Ratcliffe, who also said about the lab leak, in a Rumsfeldian turn of phrase, “It's certainly a probability and it's probably a certainty.”

During the pandemic, I was (and still remain) intellectually curious about but not politically identified with the truth of the matter. The degeneration of what should be a matter of extreme global interest into lazy mud-slinging, inexplicable incuriosity, and partisan bickering has been a deflating spectacle to behold. Perhaps we’ll never know the bedrock facts about where Covid came from. Is it possible that we could at least agree to ask questions about the virus, and accept the answers, with an interest in what they mean for the future health of the humans on our planet, rather than the parochial expediency of political gain or loss?

Deep Eek

The Chinese AI firm DeepSeek have burst onto the scene with what is, by all accounts, a better cheaper AI model than the current recognised industry leader, OpenAI.

According to Nature:

DeepSeek, the start-up in Hangzhou that built the model, has released it as ‘open-weight’, meaning that researchers can study and build on the algorithm. Published under an MIT licence, the model can be freely reused but is not considered fully open source, because its training data has not been made available.

“The openness of DeepSeek is quite remarkable,” says Mario Krenn, leader of the Artificial Scientist Lab at the Max Planck Institute for the Science of Light in Erlangen, Germany. By comparison, o1 and other models built by OpenAI in San Francisco, California, including its latest effort o3 are “essentially black boxes”, he says.

Mario Krenn, leader of the Artificial Scientist Lab at the Max Planck Institute for the Science of Light in Erlangen, Germany, told Nature that an “experiment that cost more than £300 with o1, cost less than $10 with R1,” making AI one more thing that can now be done more cheaply in China.

DeepSeek’s success comes at a time when China’s access to computer components is being limited by the United States for national security reasons, making it all the more striking (or worrying).

Part of the buzz around DeepSeek is that it has succeeded in making R1 despite US export controls that limit Chinese firms’ access to the best computer chips designed for AI processing. “The fact that it comes out of China shows that being efficient with your resources matters more than compute scale alone,” says François Chollet, an AI researcher in Seattle, Washington.

DeepSeek’s progress suggests that “the perceived lead [the] US once had has narrowed significantly,” wrote Alvin Wang Graylin, a technology expert in Bellevue, Washington, who works at the Taiwan-based immersive technology firm HTC, on X. “The two countries need to pursue a collaborative approach to building advanced AI vs continuing on the current no-win arms race approach.”

DeepSeek’s limitations in terms of budget and hardware led to a shift that mimics OpenAI’s newer model, o1.

LLMs train on billions of samples of text, snipping them into word-parts called ‘tokens’ and learning patterns in the data. These associations allow the model to predict subsequent tokens in a sentence. But LLMs are prone to inventing facts, a phenomenon called ‘hallucination’, and often struggle to reason through problems.

Like o1, R1 uses a ‘chain of thought’ method to improve an LLM’s ability to solve more complex tasks, including sometimes backtracking and evaluating its approach. DeepSeek made R1 by ‘fine-tuning’ V3 using reinforcement learning, which rewarded the model for reaching a correct answer and for working through problems in a way that outlined its ‘thinking’.

Yes, LLMs are prone to lying, something that researchers and boosters cover up by giving it a less concerning name that sounds almost fun. If only humans could pull that fast one:

“No, Dad, I didn’t crash the car last night.”

“I was in the car with you when it happened. Why are you lying?”

“I’m not lying, I’m hallucinating.”

The cost of the achievement is also raising eyebrows, as per The Indian Express:

The Chinese AI company reportedly just spent $5.6 million to develop the DeepSeek-V3 model which is surprisingly low compared to the millions pumped in by OpenAI, Google, and Microsoft. Sam Altman-led OpenAI reportedly spent a whopping $100 million to train its GPT-4 model. On the other hand, DeepSeek trained its breakout model using GPUs that were considered last generation in the US. Regardless, the results achieved by DeepSeek rivals those from much more expensive models such as GPT-4 and Meta’s Llama.

The questions that come up are not just around global market dominance. Google Gemini and Chat-GPT have both been on the receiving end of mockery and criticism for producing results that speak to a willingness to play fast and loose with historical facts in favour of a broader agenda.

China is no slouch in the “agenda” department, with a brutal history of stifling dissent, crushing opposition, and insisting that reality be warped to the preferences of a version of history rewritten by the Chinese Communist Party. Since DeepSeek was grown in China’s information orchard, will it produce tainted fruit?

Critics and experts have said that such AI systems would likely reflect authoritarian views and censor dissent. This is something that has been a raging concern when it came to the debate around allowing ByteDance’s TikTok in the US. While largely impressed, some members of the AI community have questioned the $6 million price tag for building the DeepSeek-V3. Additionally, many developers have pointed out that the model bypasses questions about Taiwan and the Tiananmen Square incident.

Shocker.

Humans do a perfectly good job of revising the CCP’s legacy of suffering and defending against what they consider attempts to “undermine Mao’s reputation.” The linked-to article, written by one such human in 2006, ends with the following paragraph, a Duranty-esque apologia that falls one Stalin short of a Holodomor denial:

If India’s rate of improvement in life expectancy had been as great as China’s after 1949, then millions of deaths could have been prevented. Even Mao’s critics acknowledge this. Perhaps this means that we should accuse Nehru and those who came after him of being “worse than Hitler” for adopting non-Maoist policies that “led to the deaths of millions.” Or perhaps this would be a childish and fatuous way of assessing India’s post-independence history. As foolish as the charges that have been leveled against Mao for the last 25 years, maybe.

So AI is going to do the same thing, big deal, right? Unless it becomes the foundation for all search queries, chatbot dialogue, and human learning that filters through into the view of the world that the next generation and those that follow end up having.

The Global Trust Bust

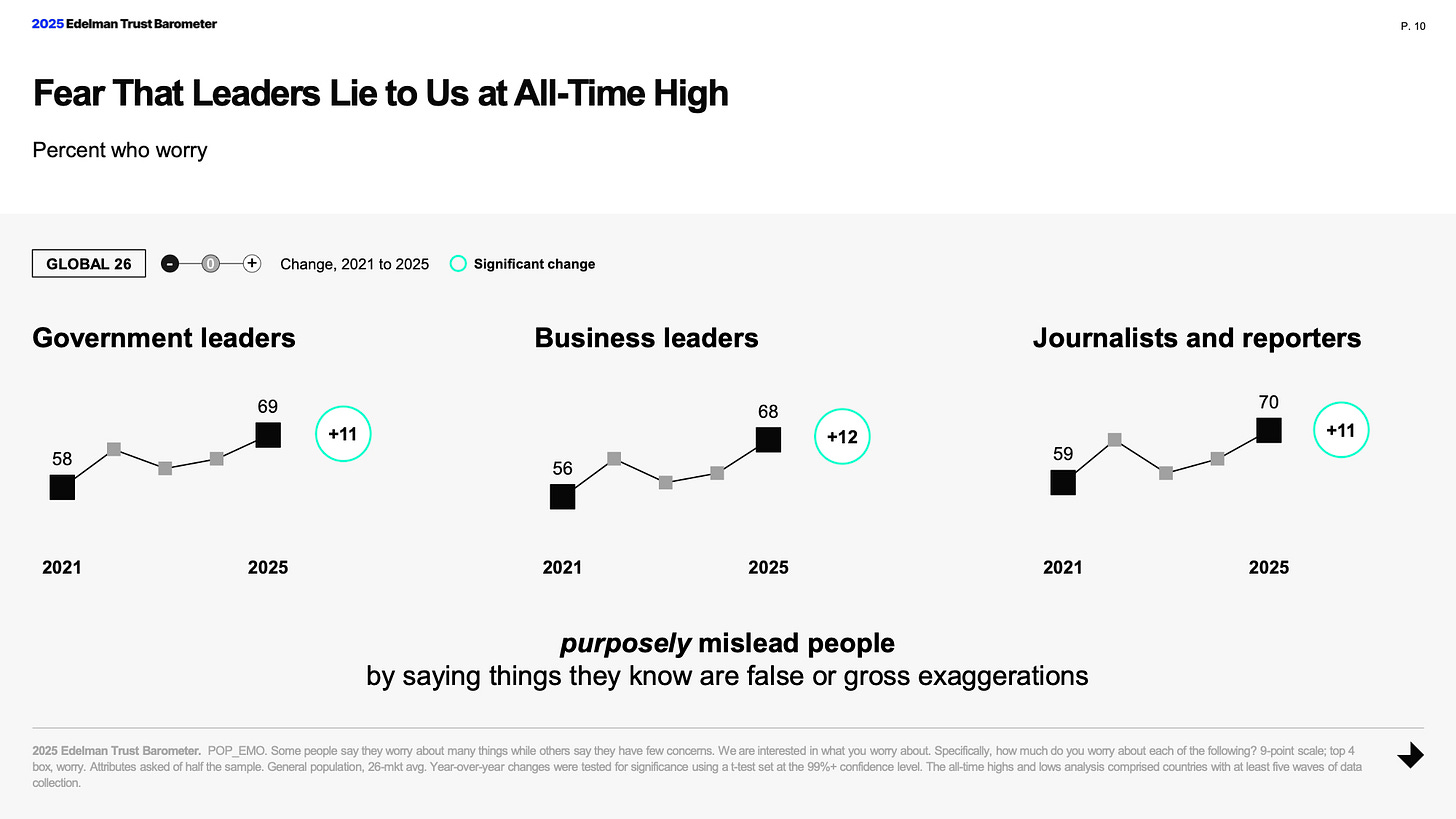

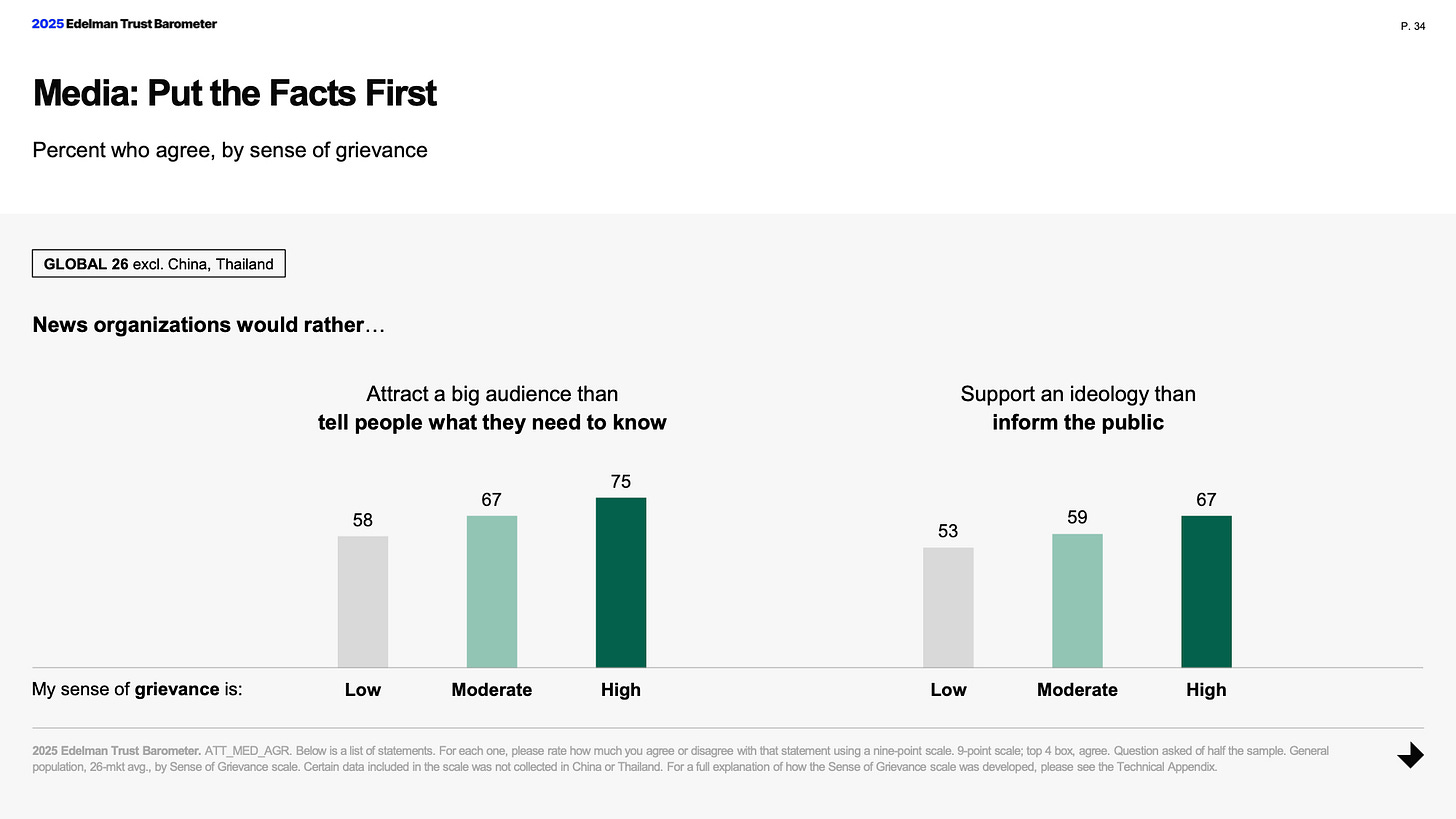

The global communications firm Edelman just published their 2025 Trust Barometer. After polling over 33,000 respondents in 28 countries, the votes are in: Trust is out.

More respondents agreed that the people in charge are lying.

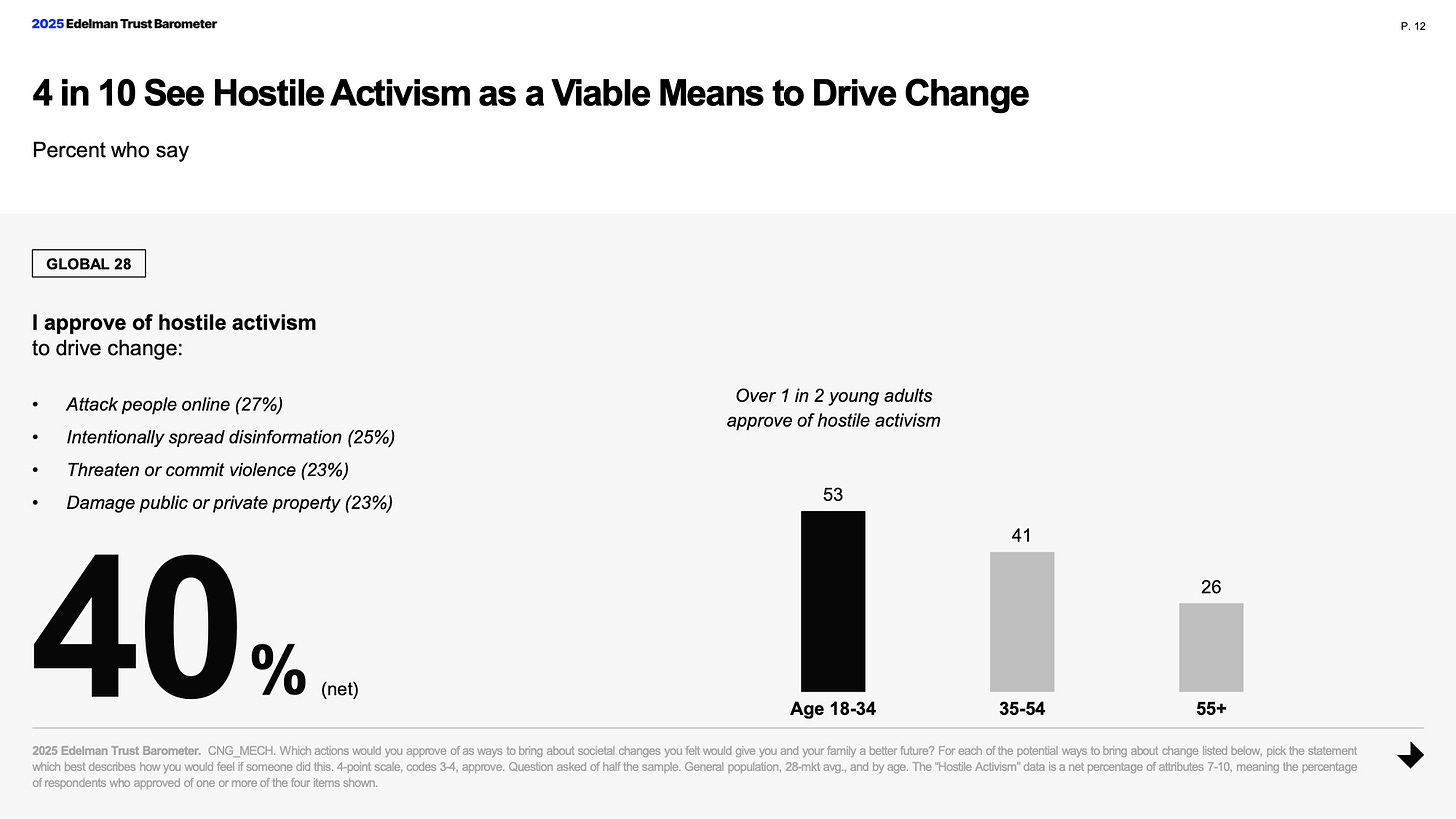

Hostility and violence are considered “a viable means to drive change” by 40% of respondents, with over half of adults aged 18 - 34 approving of “hostile activism.”

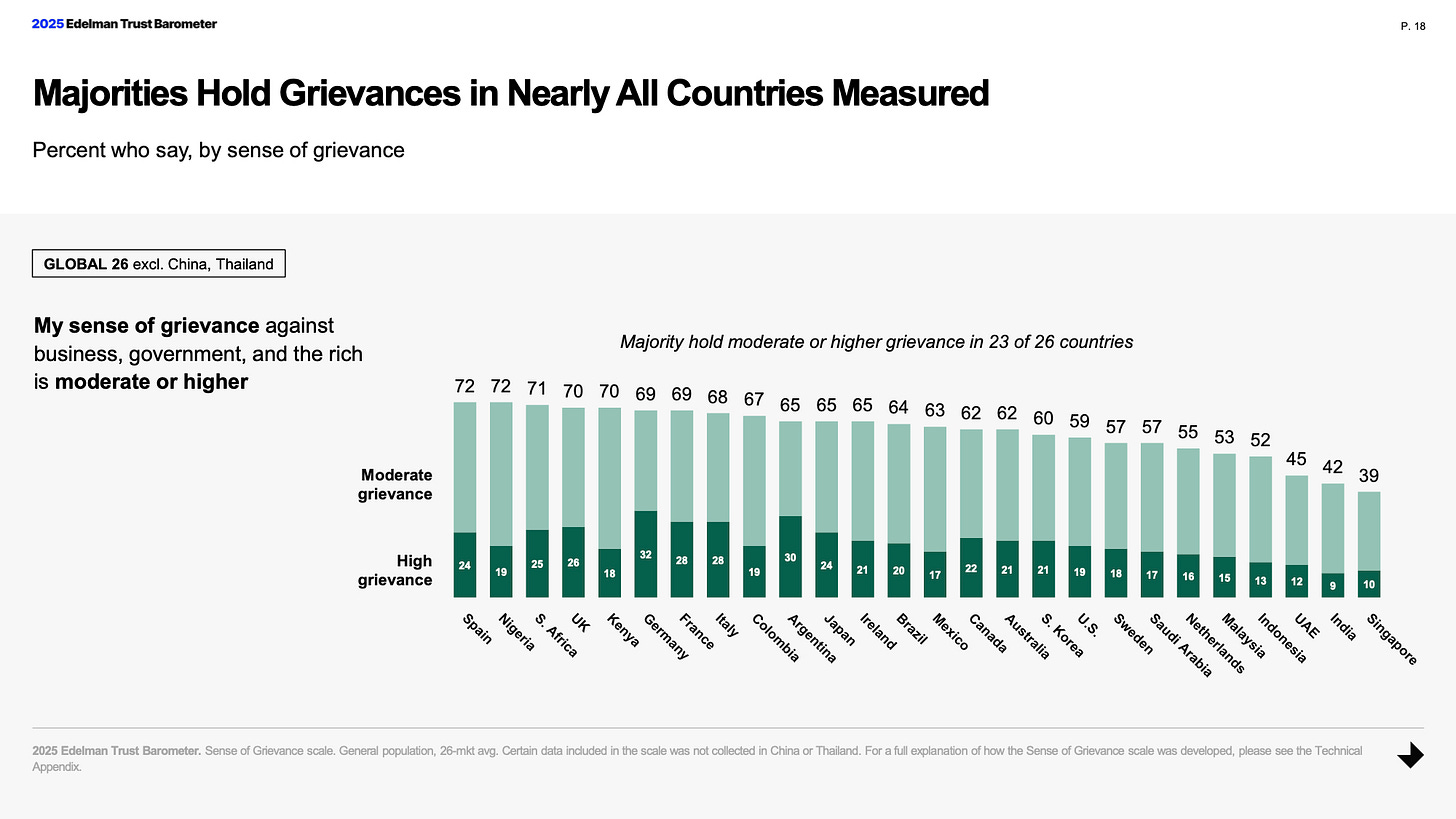

61% of respondents had moderate or high grievance with “business, government, and the rich.”

63% of respondents agreed that “It is becoming harder to tell if news is from respected media or an individual trying to deceive people.”

Business was trusted in 15 out of 28 countries and NGOs were trusted in 11, while media and government were distrusted in 14 and 17 out of 28 countries respectively.

The EU Poops On Speech

Speaking of trust, the EU (trusted in only 8 out of 28 countries measured by Edelman) has revised its Code of conduct on countering illegal hate speech online, which since its inception in 2016 has been agreed to by Facebook, Microsoft, Twitter, YouTube, Instagram, Snapchat, Dailymotion, Jeuxvideo.com, TikTok, LinkedIn, Rakuten Viber, and Twitch.

The European Commission’s press release welcomed the upgrade to the code, which they are now calling Code of conduct+, saying (emphasis in the original):

Concretely, the signatories of the Code of conduct+ commit to, among other things:

Allow a network of ‘Monitoring Reporters', which are not-for-profit or public entities with expertise on illegal hate speech, to regularly monitor how the signatories are reviewing hate speech notices: Monitoring reporters may include entities designated as ‘Trusted Flaggers' under the DSA.

Undertake best efforts to review at least two thirds of hate speech notices received from Monitoring Reporters within 24 hours.

Engage with well-defined and specific transparency commitments as regards measures to reduce the prevalence of hate speech on their services, including through automatic detection tools.

Participate in structured multi-stakeholder cooperation with experts and civil society organisations that can flag the trends and developments of hate speech that they observe, helping to prevent waves of hate speech from going viral.

Raise, in cooperation with civil society organisations, users' awareness about illegal hate speech and the procedures to flag illegal content online.

So the ‘Trusted Flaggers’ from the DSA now have new friends, the ‘Monitoring Reporters’. It sounds like the lamest shoegaze festival ever.

While trust is falling in government and media, the EU government wants to team up with the media to decide what can and cannot be said online, because they believe they can be trusted.

They really should have hired Edelman as a consultant on this one.

Three poops. 💩💩💩

That’s it for this week’s Weird, everyone. As always, I hope you enjoyed it!

Outro music is Bonkers by Dizzee Rascal, a party anthem for those who think they’re free.

Stay sane, friends.

I wake up everyday, it's a daydream

Everything in my life ain't what it seems

I wake up just to go back to sleep

There was no virus. Prove me wrong.