The Weekly Weird #52

Charlie Hebdo 10 years on, Telegram bends over, Meta-of-fact, OpenAI's Operator, China's new humanoid robot, is our children dumber?

Well hello! It’s that time again, when we push the dystopian dinghy out onto the choppy waters of weirdness, the hull lapped by ludicrousness, as we bask in a brisk breeze of badinage. That’s right, your Weekly Weird is here!

Up-fronts:

A meta-analysis published this week “found significant inverse associations between fluoride exposure and children’s IQ scores,” prompting conspiracy theorists, concerned parents, and Robert F. Kennedy to cry “Back of the net!” in their best Alan Partridge voice. The good news is that “the association was null at less than 1.5 mg/L.” The bad news is that “the US Environmental Protection Agency’s (EPA’s) enforceable and nonenforceable standards for fluoride in drinking water are 4.0 mg/L and 2.0 mg/L, and the World Health Organization’s (WHO’s) drinking water quality guideline for fluoride is 1.5 mg/L.” All that aside, the IQ decrease found across studies was 1.14 to 1.63 points, so how much does it matter, really?

Over 27,000 acres of Los Angeles are burning as three separate fires go uncontained, in part because of a lack of water in local fire hydrants. Walking haircut Governor Gavin Newsom, responding to Anderson Cooper on CNN, blamed the fact that there was no water on “local folks” who need to “figure it out.” Charming. To any readers in Los Angeles, we hope you’re all safe, and that your homes and loved ones emerge unscathed.

As news circulates (mostly via smartphone) that the average smartphone user spent 4 hours and 37 minutes on their phone in 2024, the digital detox company Unplugged built a depressing widget on their website that lets you calculate how many years of your life you will spend on your phone based on average life expectancy. It’s worse than you think. Enjoy!

Let’s get into it…

Charlie Hebdo, 10 Years On

7 - 9 January 2025 marks the tenth anniversary of the massacre at Charlie Hebdo, the French satirical magazine that published a cartoon of the Prophet Muhammad, and the associated violence over the following days.

Media reporting on the attack at the time didn’t tend to share the actual image that is claimed to have sparked the violence, even while they held forth, as Vox did eloquently on the day of the attack, on the importance of ridicule in the face of extremism.

The magazine was not just criticized by Islamist extremists. At different points, even France’s devoutly secular politicians have questioned whether the magazine went too far. French Foreign Minister Laurent Fabius once asked of its cartoons, “Is it really sensible or intelligent to pour oil on the fire?”

It is, actually. Part of Charlie Hebdo’s point was that respecting these taboos strengthens their censorial power. Worse, allowing extremists to set the limits of conversation validates and entrenches the extremists’ premises: that free speech and religion are inherently at odds (they are not), and that there is some civilizational conflict between Islam and the West (there isn’t).

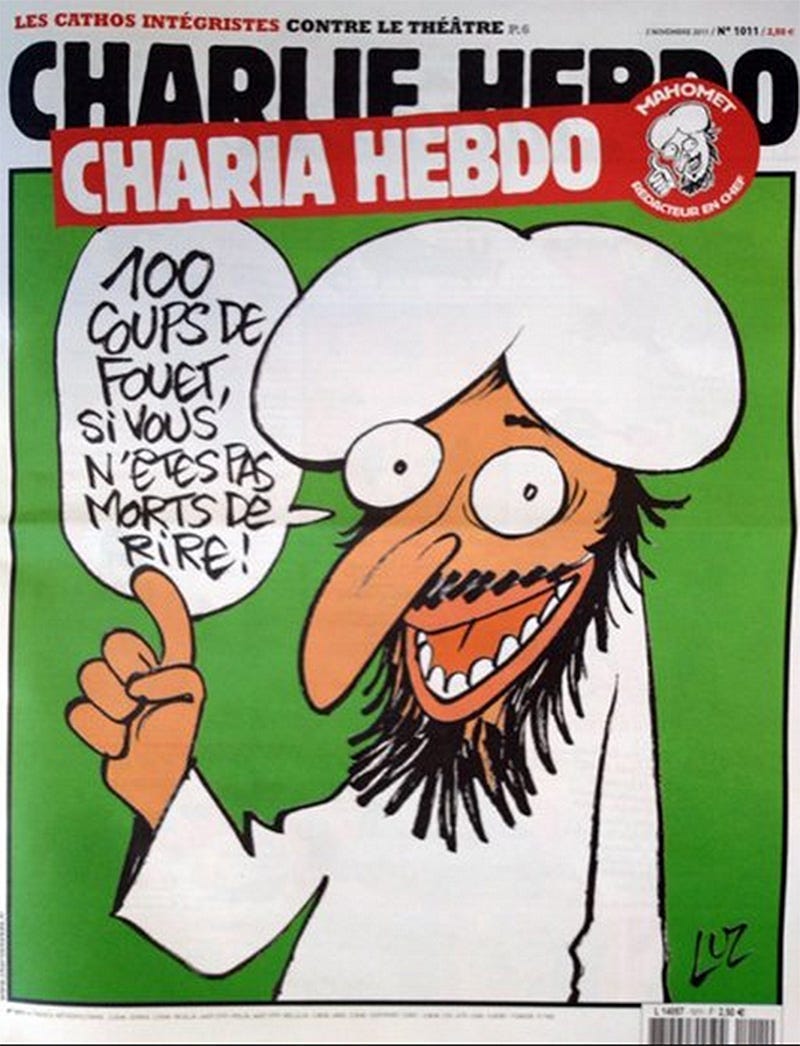

The alleged bone of contention was this ‘controversial’ magazine cover from November 2011 (see below), in which their ‘guest editor’ Muhammad, under the heading ‘Charia Hebdo’, promises the reader “100 lashes if you do not die laughing!”

The context was explained by the magazine: “To fittingly celebrate the victory of the Islamist Ennahda party in Tunisia ... Charlie Hebdo has asked Muhammad to be the special editor-in-chief of its next issue.”

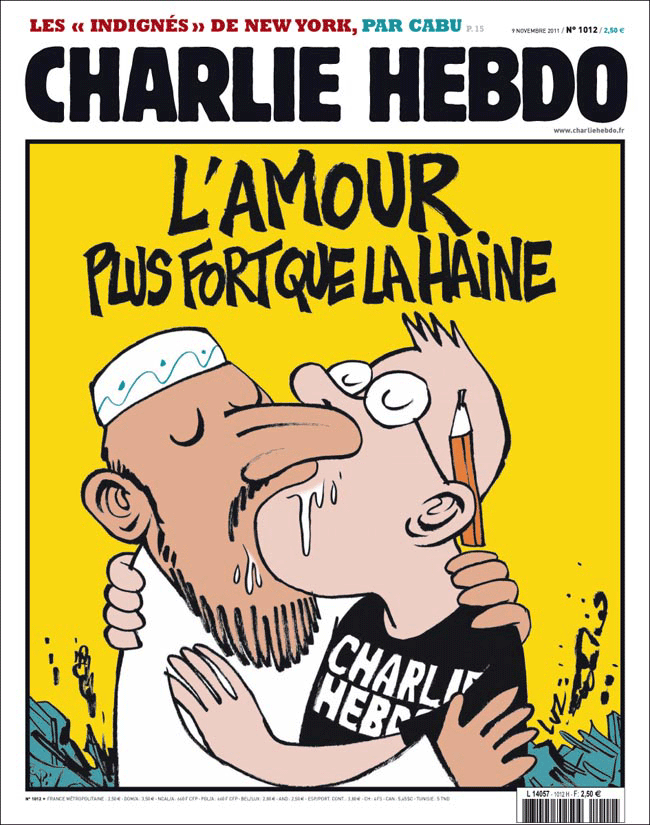

After the backlash to that cover, which included their website being hacked by “the Turkish hacker group Akıncılar” and their office being burnt down, the magazine offered up a conciliatory message: “Love is stronger than hate.”

From Vox again:

Yes, the slobbery kiss between two men is surely meant to get under the skin of any conservative Muslims who are also homophobic, but so too is it an attack on the idea that Muslims or Islam are the enemy, rather than extremism and intolerance.

Laurent Léger, a Charlie Hebdo staffer who survived the attack, told CNN in 2012, "The aim is to laugh. ... We want to laugh at the extremists — every extremist. They can be Muslim, Jewish, Catholic. Everyone can be religious, but extremist thoughts and acts we cannot accept."

As a reminder of why this isn’t just old news, you can watch this video from a month ago, in which British MP Tariq Ali asks the Prime Minister to reintroduce blasphemy laws in the UK, or you can listen to Episode 123 with Hatun Tash, in which she describes her experiences of being spat on, punched, and stabbed by religious extremists.

Telegram Bends Over

The 2024 arrest and detention of Telegram CEO Derek Zoolander (pictured above) seems to have worked a treat for the authorities.

404 Media reports that “[b]etween January 1 and September 30, 2024, Telegram fulfilled 14 requests “for IP addresses and/or phone numbers” from the United States, which affected a total of 108 users,” but “for the entire year of 2024, it fulfilled 900 requests from the U.S. affecting a total of 2,253 users, meaning that the number of fulfilled requests skyrocketed between October and December.”

What does that mean in English? After long being considered the bad boy of social media apps when it came to collaborating with Der Staat, Telegram suddenly started handing over user data at a rate of knots some time in late 2024.

What else happened in late 2024?

A month after Durov’s arrest in August, Telegram updated its privacy policy to say that the company will provide user data, including IP addresses and phone numbers, to law enforcement agencies in response to valid legal orders. Up until then, the privacy policy only mentioned it would do so when concerning terror cases, and said that such a disclosure had never happened anyway.

Since “the vast majority of fulfilled data requests were in the last quarter of 2024, showing a huge increase in the number of law enforcement requests that Telegram completed,” it seems reasonable to infer that Telegram CEO Pavel Durov (pictured below) had a road to Damascus moment in the slammer. Funny, that.

My favourite part of the article is this nugget:

In the wake of that arrest, multiple cybercriminals indicated they would leave the platform…

Aww, isn’t that cute? Cybercriminals are the same as celebrities and uptight hacks: If the platform they’re on changes in a way they dislike, they take their ball and go home, or to BlueSky, or the dark web, or wherever.

Adorable.

Meta-Of-Fact

I know, I really had to reach for the pun. No, I’m not sorry. Yes, it will happen again.

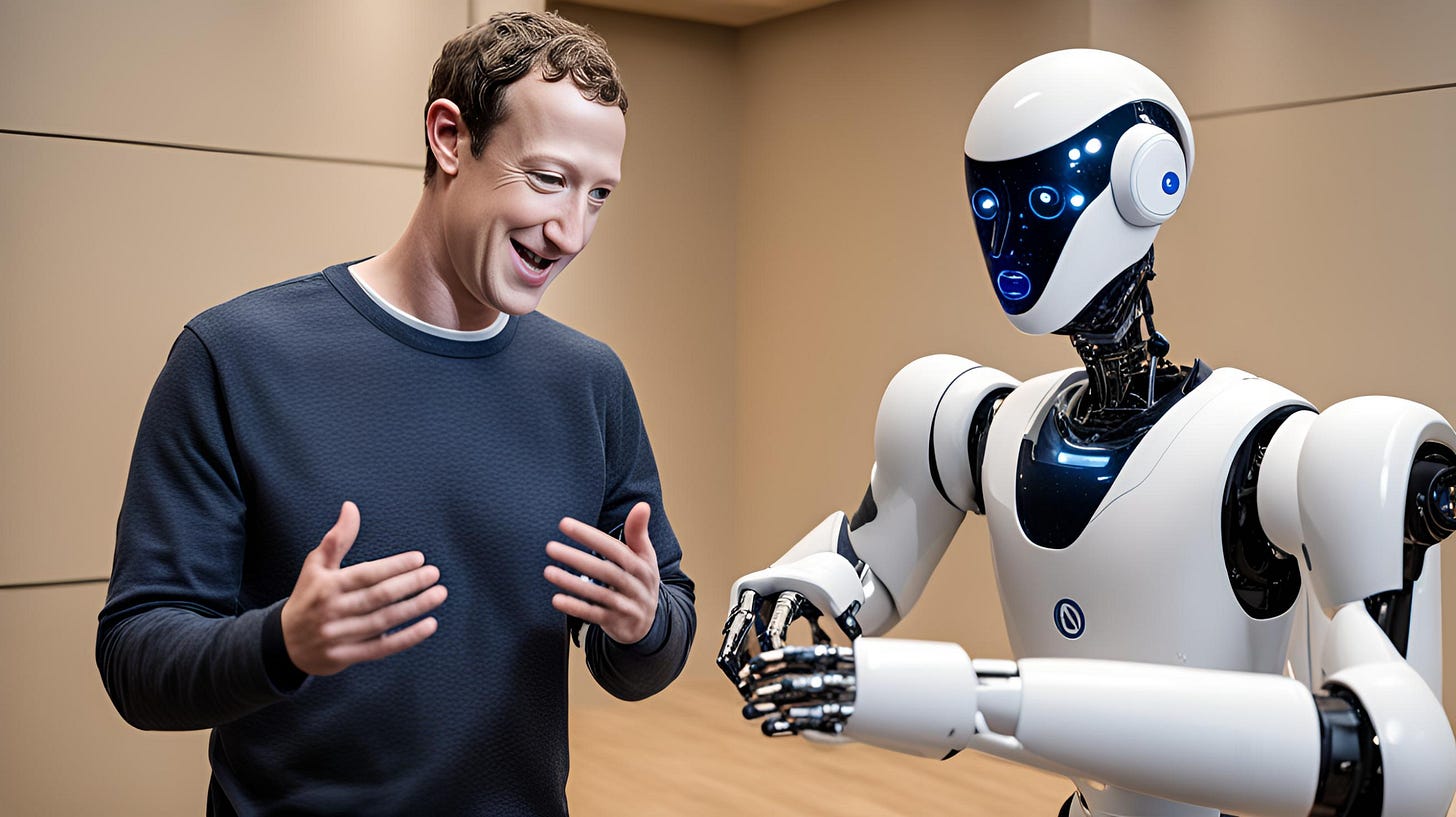

Meta CEO Mark Zuckerberg (pictured above trying to remember the theme songs to Superman and Police Academy one after the other1) posted a video on Facebook announcing that the social media site would be “restoring free expression on our platforms.” How, you might ask?

Here’s the Zuck:

More specifically, we're going to get rid of fact-checkers and replace them with Community Notes similar to X, starting in the US.

Did Zuckerberg party with Diddy and end up on a tape that got sold to Trump and Elon?

The Zuckbot continued:

After 2016, the legacy media wrote non-stop about how misinformation was a threat to democracy. We tried in good faith to address those concerns without becoming the arbiters of truth, but the fact-checkers have just been too politically-biased…

A Meta press release posted after the video went out included more on how they plan “to reduce the kind of mistakes that account for the vast majority of the censorship on our platforms.”

Experts, like everyone else, have their own biases and perspectives. This showed up in the choices some made about what to fact check and how. Over time we ended up with too much content being fact checked that people would understand to be legitimate political speech and debate. Our system then attached real consequences in the form of intrusive labels and reduced distribution. A program intended to inform too often became a tool to censor.

Further into the corporate bumf is a fun little statistic - by their own reckoning, 10 - 20% of the content being removed “may not have” violated their policies:

For example, in December 2024, we removed millions of pieces of content every day. While these actions account for less than 1% of content produced every day, we think one to two out of every 10 of these actions may have been mistakes (i.e., the content may not have actually violated our policies).

Meta also admit they’ve been preventing discussions that have a rightful place in a digital town square (even if it is owned by a private company):

We want to undo the mission creep that has made our rules too restrictive and too prone to over-enforcement. We’re getting rid of a number of restrictions on topics like immigration, gender identity and gender that are the subject of frequent political discourse and debate. It’s not right that things can be said on TV or the floor of Congress, but not on our platforms.

Of course, to achieve such a shift in policy requires drastic measures, and oh boy do they have that in mind.

[W]e will be moving the trust and safety teams that write our content policies and review content out of California to Texas and other US locations.

They have to move the operation out of California to find people who don’t dig censorship?

In response to Meta’s announcement that censorship is so last season, the International Fact-Checking Network, which is a thing I now know exists, called an “emergency meeting”, according to Business Insider:

The meeting is expected to draw between 80 and 100 attendees from the IFCN's network of fact-checkers, which spans 170 organizations worldwide. Not all the expected attendees are Meta fact-checking partners, though many of them have a stake in the program's future and its global implications.

The IFCN has long played a crucial role in Meta's fact-checking ecosystem by accrediting organizations for Meta's third-party program, which began in 2016 after the US presidential election that year.

The “crucial role” of accrediting “fact-checkers [who] have just been too politically-biased” was created right after the 2016 election?

As an aside, in 2012 the Guardian and others reported breezily and positively on Barack Obama’s campaign using “the power of Facebook to target individual voters to a degree never achieved before.”

It’s worth quoting at length:

At the core is a single beating heart – a unified computer database that gathers and refines information on millions of committed and potential Obama voters. The database will allow staff and volunteers at all levels of the campaign – from the top strategists answering directly to Obama's campaign manager Jim Messina to the lowliest canvasser on the doorsteps of Ohio – to unlock knowledge about individual voters and use it to target personalised messages that they hope will mobilise voters where it counts most.

Every time an individual volunteers to help out – for instance by offering to host a fundraising party for the president – he or she will be asked to log onto the re-election website with their Facebook credentials. That in turn will engage Facebook Connect, the digital interface that shares a user's personal information with a third party.

Consciously or otherwise, the individual volunteer will be injecting all the information they store publicly on their Facebook page – home location, date of birth, interests and, crucially, network of friends – directly into the central Obama database.

"If you log in with Facebook, now the campaign has connected you with all your relationships," a digital campaign organiser who has worked on behalf of Obama says.

Now compare that reporting with the Guardian’s 2018 coverage of the Cambridge Analytica story.

Spot the difference:

The data analytics firm that worked with Donald Trump’s election team and the winning Brexit campaign harvested millions of Facebook profiles of US voters, in one of the tech giant’s biggest ever data breaches, and used them to build a powerful software program to predict and influence choices at the ballot box.

A whistleblower has revealed to the Observer how Cambridge Analytica – a company owned by the hedge fund billionaire Robert Mercer, and headed at the time by Trump’s key adviser Steve Bannon – used personal information taken without authorisation in early 2014 to build a system that could profile individual US voters, in order to target them with personalised political advertisements.

It took them six years to get from awe at how the Obama campaign could use Facebook to “unlock knowledge about individual voters and use it to target personalised messages” to how dastardly it was of Trump’s campaign to “build a system that could profile individual US voters, in order to target them with personalised political advertisements.”

Are the two situations different? Yes, of course. To me, they’re both gross, but in different ways. The reporting, however, definitely makes Obama’s data hoovering sound awesome and fun and great, while Trump’s data hoovering sounds slimy and horrible and criminal. But it’s all data hoovering, even if some of it was done within the lines. Also, the Federal Trade Commission didn’t fine Facebook $5 billion and restructure the company when they helped Obama get elected. Just sayin’.

Aside over, back to Meta firing its third-party fact-checkers…

The punchline of the whole affair is this I Can’t Believe It’s Not Sarcasm Award winner from the New York Times:

“We didn’t actually nuke the content. We just told the company that did nuke the content that the content should be nuked, but we didn’t nuke it ourselves, so it getting nuked has nothing to do with us. Therefore, saying we were involved in the nuking is fake news.”

Fantastic.

As Tom Lehrer sang about Wernher von Braun, the Nazi who ran NASA:

“Once the rockets are up, who cares where they come down?

That’s not my department,” says Wernher von Braun.

OpenAI’s Operator

OpenAI, CEO Sam Altman’s attempt to help us all live in the Terminator movies, is very excited about its new product, an “AI agent” code-named Operator.

AI agents are trained and empowered to take control of doing things rather than just responding passively to queries. A neural network controlling a computer, or an email account, or a bank account, or an electricity grid - what could go wrong?

In a staff meeting on Wednesday, OpenAI’s leadership announced plans to release the tool in January as a research preview and through the company’s application programming interface for developers, said one of the people, who spoke on the condition of anonymity to discuss internal matters.

Sam Altman wrote on his blog about the advent of agents, and you know it’s super important because he used words like “paradigm” and “complex”.

First, here’s his not-totally-reassuring statement on building a company at the bleeding edge of technology:

There is no way to train people for this except by doing it, and when the technology category is completely new, there is no one at all who can tell you exactly how it should be done.

Or if it should be done, apparently.

Then he gets to the agents, with a bit about AGI (Artificial General Intelligence) to boot:

We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies.

But that’s patate piccole2 for Altman and his merry band.

We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future. With superintelligence, we can do anything else. Superintelligent tools could massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own, and in turn massively increase abundance and prosperity.

This sounds like science fiction right now, and somewhat crazy to even talk about it. That’s alright—we’ve been there before and we’re OK with being there again.

So it’s full steam ahead towards unleashing computer superintelligence on us all, programmed by people who cannot be trained “except by doing it,” overseen by individuals for whom the phrase “moral hazard” was invented.

Oh, and did I mention that OpenAI is going broke and definitely has a financial reason to rush things to market in the hope that they can staunch the bleeding before they go under?

TechCrunch with more:

OpenAI isn’t profitable, despite having raised around $20 billion since its founding. The company reportedly expected losses of about $5 billion on revenue of $3.7 billion last year.

Expenditures like staffing, office rent, and AI training infrastructure are to blame. ChatGPT was at one point costing OpenAI an estimated $700,000 per day.

ChatGPT Pro, for which subscribers pay $2,400 a year, is losing money because of how heavily it is being used. Where did the price tag come from?

“I personally chose the price,” Altman wrote in a series of posts on X, “and thought we would make some money.”

This is the smartest guy in the room? The tech entrepreneur who wants to rush towards ‘superintelligence’ and take us all with him? We’re trusting this guy not to open the Hellgate?

Sam Altman, with his billions, is “OK with being there.”

Are you?

Check out Episode 126 with Nikhil Suresh to hear about the lies, damned lies, and fraud of AI.

China’s New Humanoid Robot

Chinese robotics firm EngineAI just announced their new humanoid robot, the PM01, hot on the heels of their last humanoid robot, the SE01, which was announced only a few months ago as well.

Here’s a lovely matryoshka doll of weirdness for you: A video of an AI-generated presenter describing and reviewing the features of the new AI robot, which can be yours for “only” $12,000. Bargain!

That’s it for this week’s Weird, everyone. As always, I hope you enjoyed it.

Outro music is Muse with Time Is Running Out, dedicated to all the smartphone users (and abusers) out there, waving away the few precious years left on this planet with a swipe of their finger.

Stay sane, friends!

Editor’s Note: Try it. It’s impossible. The human mind can only recall one tune or the other, but not both one after the other.

Small potatoes, but in Italian. We’re a cultured bunch here at the Weird.