The Weekly Weird #5

A chess robot breaks a child's finger, scientists poop on speech, Poland liberates media by liquidating it, The New York Times vs. OpenAI, SHAFT, and bye bye 2023

Greetings all. What a week!

First of all, a very warm welcome to all our new subscribers. We’ve had lots of people signing up, thanks to their obviously-excellent taste and the generous support of CJ Hopkins, who features in Episode 103 which dropped on Christmas Eve and now includes a transcript on the Substack page. If you haven’t listened to it already, please do. We’re now over 200-strong and rising, after only three episodes. I’m very grateful for your support, it makes the prospect of 2024 positively inviting.

Tweet of the Week

If only you were wrong, MikeOS. If only…

Since this is the final Weekly Weird of 2023, and our world continues to provide more dystopian material than we could possibly cover, let’s press on with all possible dispatch.

Chess Robot Breaks Child’s Finger

Poor kid. You’d think a robot with a sensor able to read a chess board and manipulate a chess piece would have a built-in fail-safe for human hands, right?

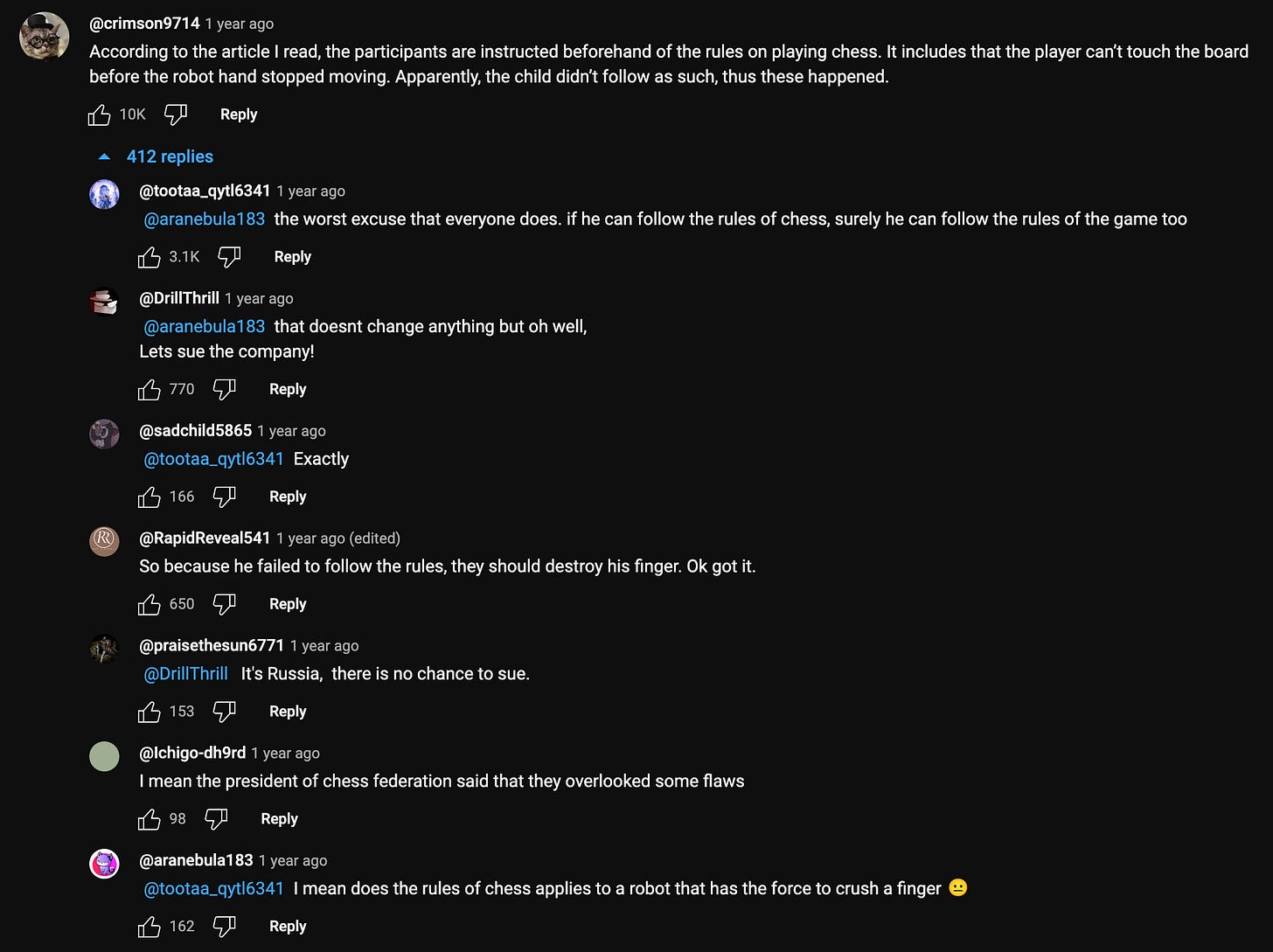

No YouTube comment section is worth reading, but skimming this one gave me a whiff of the moral dilemmas we’ll face in the future as robots become more autonomous and widespread.

Is it the kid’s fault for not following instructions? Is it the fault of the chess association for using a robot that could do something like that? The company that made the robot? The software company that wrote the code? How do we assign responsibility and liability in a world of autonomous mechanical entities interacting with humans who are fragile, inattentive, and litigious?

Fun fact, by the way: The word ‘robot’ was invented by the Czech author Karel Čapek in his 1920 play R.U.R. (Rossum’s Universal Robots). He also wrote a fantastic satirical dystopian novel called ‘War With The Newts’, which I’d love to cover on the podcast; I’ve been searching for a suitable expert to discuss it with.

In other chess news, a Chinese chess champion has been stripped of his title and prize money for defecating in a bathtub and “has been accused of cheating using a communication device similar to anal beads.” Besides qualifying as weird, this also hits a dystopian note because, from The Independent:

The Chinese Xiangqi Association (CXA) on Monday announced that Mr Yan would be stripped of his title and have his prize money confiscated for “disrupting public order” and displaying “extremely bad character”.

While this might be another way of saying “bringing the sport into disrepute”, which is often a reason for athletes getting disciplined, it’s a bit loaded coming from a country with a well-documented, pervasive, and intrusive social credit system. The unfortunate bathtub-defecator may find himself unable to board a plane or train, or get his children into certain schools, as a consequence of what sounds like a poorly-judged drunken night out. We’ll cover China’s social credit system at greater length soon - it’s a deeper dive than a weekly summary can handle.

Speaking of defecation…

Scientists Poop on Speech

A study titled “Prosocial motives underlie scientific censorship by scientists”, published in November this year, looks at the ways in which scientific research, publication, and communication is influenced and often held back by actual or expected censorship, leading to the chilling of inquiry, self-censorship, and other things that are super-desirable if you’re trying to understand and describe reality.

Steve Stewart-Williams, one of the study’s 39 authors, posted an explainer on his Substack which is well worth a read.

The study gives a clear and distinct definition of scientific censorship:

We define scientific censorship as actions aimed at obstructing particular scientific ideas from reaching an audience for reasons other than low scientific quality. Censorship is distinct from discrimination, if not always clearly so. Censorship targets particular ideas (regardless of their quality), whereas discrimination targets particular people (regardless of their merit).

The study further breaks down censorship into hard and soft varieties.

Hard censorship occurs when people exercise power to prevent idea dissemination. Governments and religious institutions have long censored science. However, journals, professional organizations, universities, and publishers—many governed by academics—also censor research, either by preventing dissemination or retracting postpublication. Soft censorship employs social punishments or threats of them (e.g., ostracism, public shaming, double standards in hirings, firings, publishing, retractions, and funding) to prevent dissemination of research. Department chairs, mentors, or peer scholars sometimes warn that particular research might damage careers, effectively discouraging it. Such cases might constitute “benevolent censorship,” if the goal is to protect the researcher.

Elaborating on this distinction, the authors then lay out where scientific censorship sits, emphasis mine.

Contemporary scientific censorship is typically the soft variety, which can be difficult to distinguish from legitimate scientific rejection. Science advances through robust criticism and rejection of ideas that have been scrutinized and contradicted by evidence. Papers rejected for failing to meet conventional standards have not been censored. However, many criteria that influence scientific decision-making, including novelty, interest, “fit”, and even quality are often ambiguous and subjective, which enables scholars to exaggerate flaws or make unreasonable demands to justify rejection of unpalatable findings. Calls for censorship may include claims that the research is inept, false, fringe, or “pseudoscience.” Such claims are sometimes supported with counterevidence, but many scientific conclusions coexist with some counterevidence. Scientific truths are built through the findings of multiple independent teams over time, a laborious process necessitated by the fact that nearly all papers have flaws and limitations. When scholars misattribute their rejection of disfavored conclusions to quality concerns that they do not consistently apply, bias and censorship are masquerading as scientific rejection.

In a section headed ‘Consequences of Censorship’, I found something which anchored why I felt this was relevant for us here.

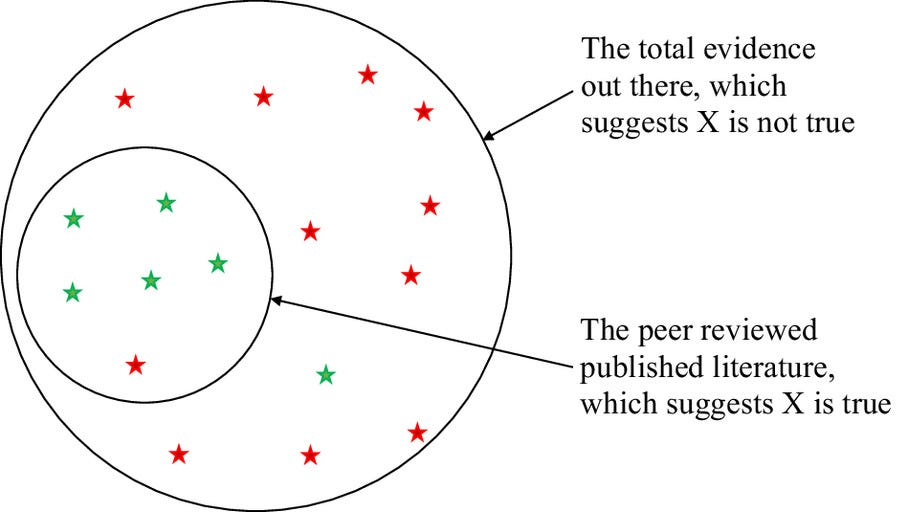

There is at least one obvious cost of scientific censorship: the suppression of accurate information. Systematic censorship, and thus systematic misunderstandings, could emerge if a majority of scientists share particular preferences or prejudices that influence their scientific evaluations. Fig. 2 illustrates how the published literature could overwhelmingly indicate that X is True, even if X is more often Not True. If social processes align to discourage particular findings regardless of their validity, subsequent understandings of reality will be distorted, increasing the likelihood of false scientific consensus and dysfunctional interventions that waste valuable time and resources for no benefit or possibly even negative consequences. Scientific censorship may also reduce public trust in science. If censorship appears ideologically motivated or causes science to promote counterproductive interventions and policies, the public may reject scientific institutions and findings.

This is Figure 2, referred to in the above quote. Hopefully the authors won’t be mad if I cross-post it here, with the caption below.

Fig. 2. The potential epistemic consequence of scientific censorship. Green stars are evidence that X is true. Red stars are evidence that X is not true. Assume that each piece of evidence is equally weighty. Censorship that obstructs evidence against X will produce a peer- reviewed literature that concludes that X is true when most likely it is not.

In a way, the graphic says it all. Where we look, or, more accurately, where we are permitted to look, will determine what we see. If there’s a better visual representation of why totalitarian systems demand control of determining what should be perceived to be true, and why censorship is therefore highly dangerous for a free or even nominally free society, please let me know, I’d love to see it.

One of the possible approaches to the problem, proposed by the authors, is a re-evaluation of why, if, and how papers should be retracted.

Scholars should empirically test the costs and benefits of censorship against the costs and benefits of alternatives. They could compare the consequences of retracting an inflammatory paper to 1) publishing commentaries and replies, 2) publishing opinion pieces about the possible applications and implications of the findings, or 3) simply allowing it to remain published and letting science carry on. Which approach inspires more and better research? Which approach is more likely to undermine the reputation of science? Which approach minimizes harm and maximizes benefits? Given ongoing controversies surrounding retraction norms, an adversarial collaboration (including both proponents and opponents of harm- based retractions) might be the most productive and persuasive approach to these research questions.

As also mentioned in the study, it would be good to have some data on the number of retractions, the stated reasons, and possibly also a measure of any relevant social opprobrium as well. If we’re going to have governments and international organisations wringing their hands about misinformation, it would be good to have stats on how and why the most basic building blocks of information, scientific data, become untrue.

Poland’s Government Liberates Media By Liquidating It

Poland recently had a change of government. The Law & Justice Party (PiS), who had been in charge for eight years, were the largest parliamentary party after a recent election but, lacking an absolute majority, were unable to form a government because nobody would work with them. Reasons for the shunning by potential coalition partners might include accusations of undermining the judicial system, turning state media into a government mouthpiece, and outlawing factual descriptions of Polish participation in the Holocaust (more context here)1.

PiS’s leader lost a confidence vote, so the Polish parliament decided that Donald Tusk, a former prime minister and European Council president who skews more towards the EU’s idea of dependable centrism, should form a coalition government to take over running the country.

‘Twas the week before Christmas when the Polish parliament, now working under a Tusk government, voted on a resolution to reform state media. Andrzej Duda, the President of Poland broadly aligned with PiS, said “a political goal cannot constitute an excuse for violating or circumventing constitutional and statutory regulations.”

From Reuters:

Critics say [the National Council of the Judiciary and the state media apparatus] came under political influence during the nationalist Law and Justice (PiS) party's eight years in office, with 24-hour news channel TVP Info becoming an outlet for government propaganda.

The day after the vote on 19 December, the government started shutting down state media.

Yesterday, after a squabble over funding and reforms, the Polish government put the state’s television, radio, and news agency into liquidation. More here from DW.

A post-election post-mortem on the failure of PiS to win convincingly shared some mea culpas from media people confessing they had participated in a propaganda operation.

From the above story by Notes on Poland:

While every government in Poland since 1989 has exerted a degree of influence over public media, that has happened to an unprecedented extent under PiS, with TVP in particular becoming a mouthpiece for the party.

Things are afoot at the Circle-K. Tusk has vowed to save the state media by putting it into liquidation, while Duda claims he was trying to save it by refusing to approve a budget for it. Would the new government go through all this trouble just to have an objective state media? Is ‘objective state media’ an oxymoron?

Time will tell.

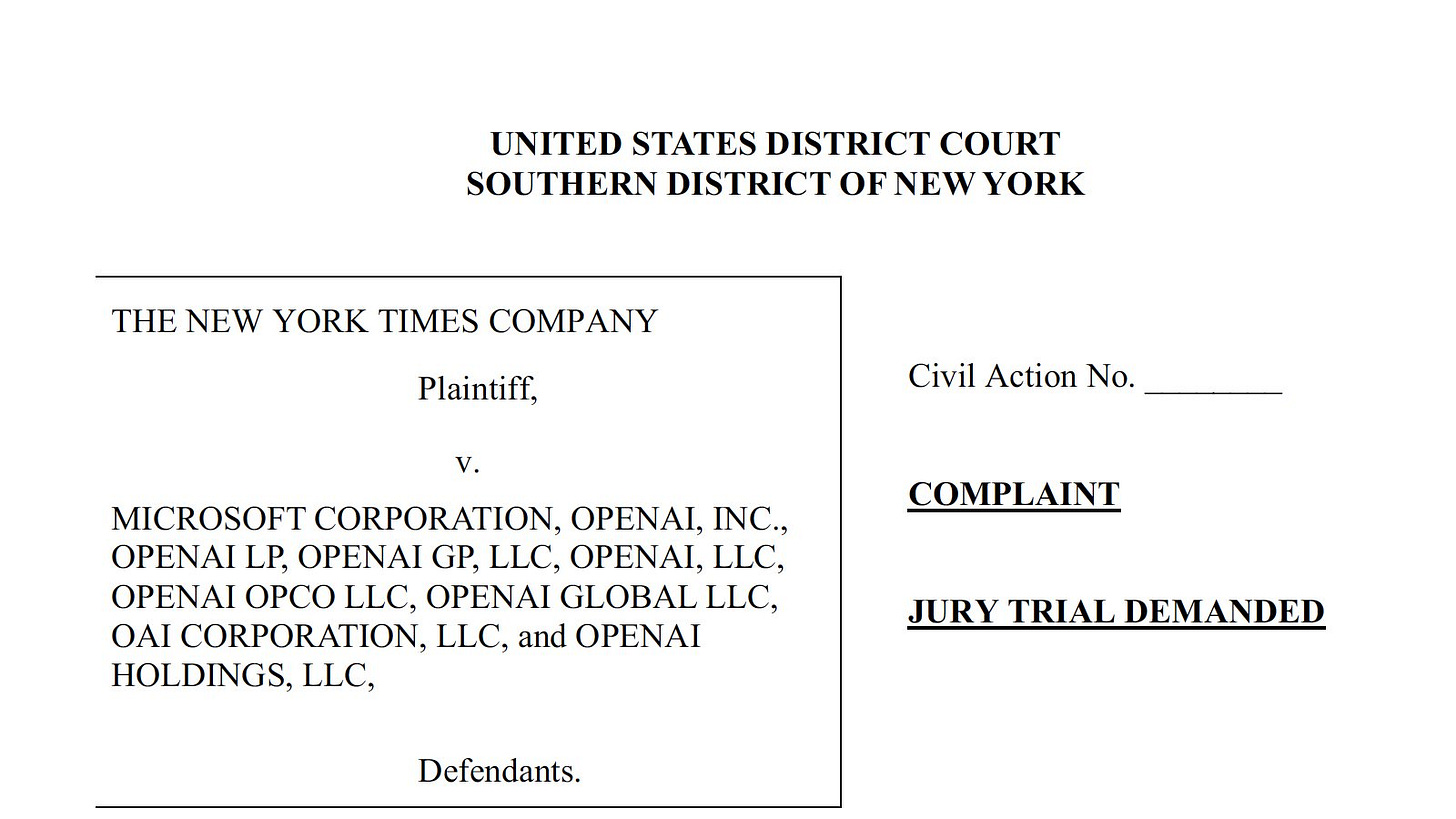

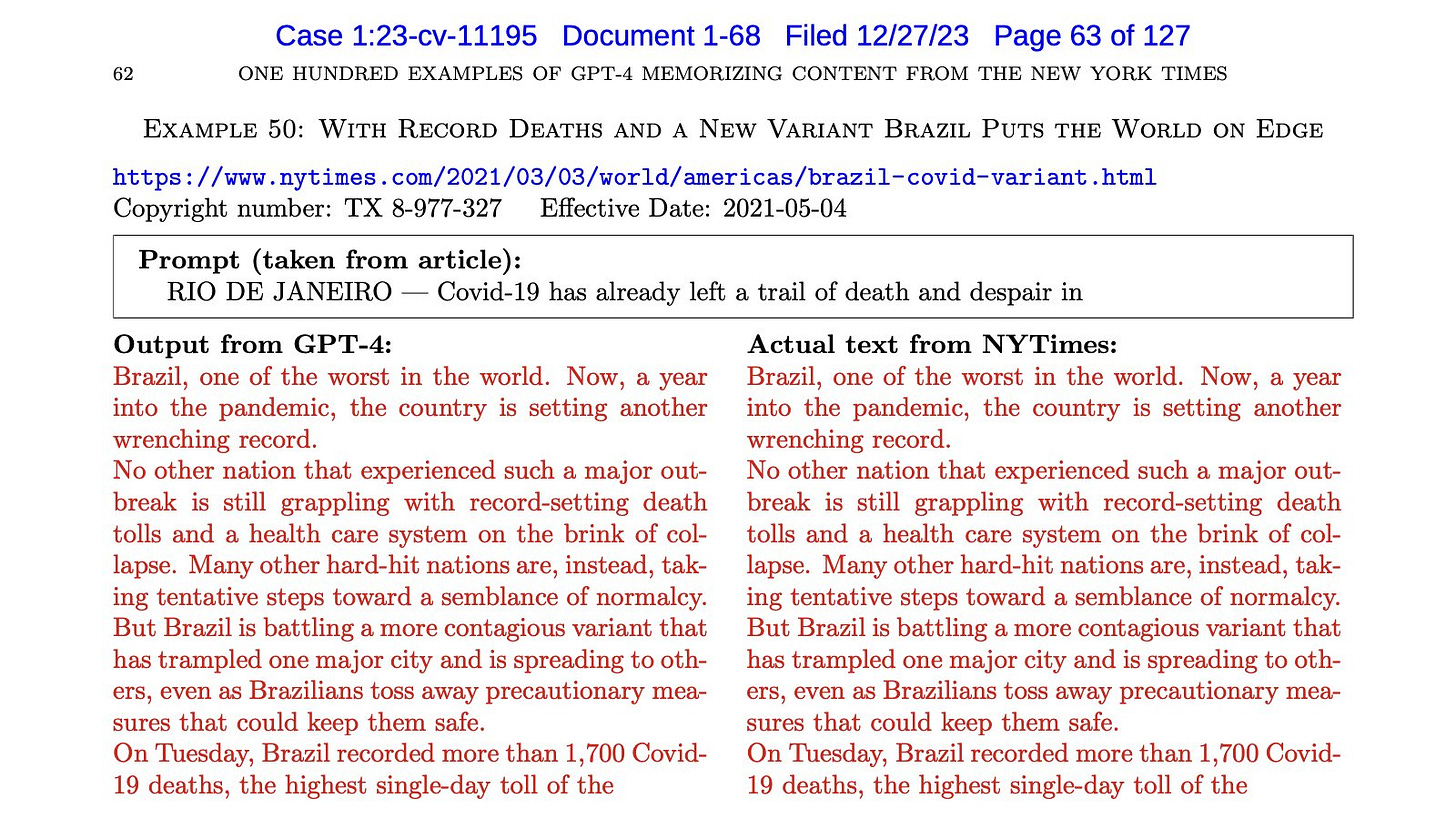

The New York Times vs. OpenAI

The paper of record is suing OpenAI for copyright infringement in what might prove to be a landmark case.

Large language models (LLMs) are trained by ‘scraping’ the web for content and assimilating it in order to learn how to formulate language. The material they scrape is often copyrighted but not always paid for, and the New York Times are not the first aggrieved party to file suit about this. However, they have filed what appears to be the most comprehensive suit to date, supported by 220,000 pages of exhibits and one hundred detailed examples of ChatGPT reproducing their content almost or entirely verbatim.

OpenAI apparently refused to settle the case, which may bite them badly if a judge finds against them. The suit explicitly links OpenAI’s for-profit model and multi-billion dollar valuation to the unpaid exploitation of copyrighted material. Microsoft, who acquired OpenAI not long after ChatGPT blew up the internet, gained a trillion dollars in market cap in 2023. High noon in the Southern District of New York.

This thread on X/Twitter by Jason Kint, CEO of Digital Content Next, which provided the above screenshots, is worth checking out to get an overviewgrip on the meaning, purpose, and possible ramifications of the case.

He ends it with a statement:

Generative AI developers should not be allowed to undermine fundamental copyright protections. We are pleased that The New York Times is standing up for the protection of original content as it plays a vital role in democracy and the everyday lives of regular Americans.

Whether or not AI training falls under ‘fair use’ or some other protection could determine the extent to which the US is hospitable to future developments in LLMs. Or it could just mean that the tech sector has to pay for something that it wants for free. Are there enough billions to go round?

One last thing before I exceed Substack’s email limit and your patience.

SHAFT

No, not the absolutely classic Isaac Hayes tune, although the film of him conducting the recording session is great, so I’ll share it at the end.

S.H.A.F.T. is an acronym that stands for Sex, Hate, Alcohol, Firearms, and Tobacco. It outlines the categories of text messages specifically regulated due to moral and legal issues and is monitored and enforced by the CTIA and the mobile carriers.

From 1 January 2024, T-Mobile is instituting fines for certain violations of its Terms of Service. SHAFT violations will cost you or your business $500.

From Yotpo again:

Although not technically a law, the S.H.A.F.T. guidelines should be taken seriously by anyone using SMS to communicate with consumers to comply with the CTIA best practices and carriers’ requirements.

There you have it. SHAFT should be taken seriously. I couldn’t agree more - Richard Roundtree is a legend (who sadly passed away in October this year at the age of 81).

I’d be interested in seeing any information on how exactly mobile carriers in the US and Canada are monitoring for or enforcing extralegal content restrictions.

Bye Bye 2023

That’s it for the Weekly Weird for this year. Thank you so much for reading and subscribing.

Have a wonderful break and a Happy New Year. I’ll check in with you next week, in 2024. The next episode of the podcast drops on January 7, so stay tuned!

Stay sane out there, my friends.

During the Second World War, Poland was occupied by Nazi Germany and its government was replaced by the Nazis. The Polish Resistance, and other opposition to the Nazis by Polish people, is well-documented. Under the conditions at the time, however, many Polish people did collaborate with the Nazis, and the country had a long prior history of anti-Semitism as well, which did not disappear. The ‘national pride’ argument from Duda and PiS was more or less that Nazi collaboration was forced upon the Polish people, so referring to concentration camps like Auschwitz as ‘Polish’ just because they were physically in Poland is unfair.