The Weekly Weird #28

Apple spies, buy with your eyes, if it flies it dies, going Pete Tong in Hong Kong, AI Steve, the EU builds a twin Earth and therefore reality is a simulation (maybe)

Welcome back to the wondrous weird world of what’s-happening! Yet another avalanche of awful has tumbled down the mountain of madness over the last seven days, and we’re here to dig our way out of it or soil ourselves trying.

Before we get started, just a quick reminder to check out Episode 115 of the podcast, featuring Bjørn Karmann, a Danish inventor who created a lens-less camera that uses AI to generate images of what it ‘thinks’ you’re seeing.

Onwards!

Apple Spies

At this week’s developer conference, Apple announced a new feature to be rolled out across its ecosystem, called Apple Intelligence. In partnership with (and according to) OpenAI, the deal will “integrate ChatGPT into Apple experiences.”

You all thought you had devices, phones, computers. Wrong. You’re having an experience. That thing you buy from a major company at a premium rate? It’s something they give you, not something you own. You’re welcome. What a way to make you sound like the recipient of a gift rather than the buyer of a product. Hang-gliding, rock climbing, iPhone, all identical. Experiences, see?

Definitely something Partridge would have filed under abusage. Anyway, lexical rage over.

From Apple:

Apple Intelligence is deeply integrated into iOS 18, iPadOS 18, and macOS Sequoia. It harnesses the power of Apple silicon to understand and create language and images, take action across apps, and draw from personal context to simplify and accelerate everyday tasks

OpenAI gushed in a similar fashion:

Apple shares our commitment to safety and innovation…

If Apple share OpenAI’s commitment to safety, that’s not a compliment.

In May, OpenAI’s head of Super Alignment, Jan Leike, left the company because of his concerns over their cavalier attitude to safety.

He was quoted by The Independent as saying:

“I have been disagreeing with OpenAI leadership about the company’s core priorities for quite some time, until we finally reached a breaking point…”

Leike’s resignation followed that of co-founder and board member Ilya Sutskever, who was allegedly instrumental in the brief dismissal of Sam Altman as CEO. As with Leike, the presumed underlying cause of Sutskever’s abrupt departure was a strong difference of opinion over the direction the company has taken since it decided to go full steam ahead with publicly releasing iterations of ChatGPT while waving away the looming ramifications of artificial general intelligence (AGI).

Leike put it bluntly:

“OpenAI must become a safety-first AGI company”

Coming from someone in his position, that pretty much makes it clear that OpenAI isn’t “safety-first” right now. So back to Apple deciding to incorporate ChatGPT into all their operating systems as standard, and in the process plumb their users directly into whatever subterranean data-pipe is feeding OpenAI’s quickly-developing non-human future planetary overlord.

What exactly will Apple Intelligence do?

ChatGPT will read your emails…

In Mail, staying on top of emails has never been easier. With Priority Messages, a new section at the top of the inbox shows the most urgent emails, like a same-day dinner invitation or boarding pass. Across a user’s inbox, instead of previewing the first few lines of each email, they can see summaries without needing to open a message. For long threads, users can view pertinent details with just a tap. Smart Reply provides suggestions for a quick response, and will identify questions in an email to ensure everything is answered.

…listen to your phone calls…

In the Notes and Phone apps, users can now record, transcribe, and summarize audio. When a recording is initiated while on a call, participants are automatically notified, and once the call ends, Apple Intelligence generates a summary to help recall key points.

…monitor your messages…

For example, if a user is messaging a group about going hiking, they’ll see suggested concepts related to their friends, their destination, and their activity, making image creation even faster and more relevant.

…scan your photos and videos…

Searching for photos and videos becomes even more convenient with Apple Intelligence. Natural language can be used to search for specific photos, such as “Maya skateboarding in a tie-dye shirt,” or “Katie with stickers on her face.” Search in videos also becomes more powerful with the ability to find specific moments in clips so users can go right to the relevant segment.

…and super-charge Siri:

With Apple Intelligence, Siri will be able to take hundreds of new actions in and across Apple and third-party apps. For example, a user could say, “Bring up that article about cicadas from my Reading List,” or “Send the photos from the barbecue on Saturday to Malia,” and Siri will take care of it.

[…]

Siri will be able to deliver intelligence that’s tailored to the user and their on-device information. For example, a user can say, “Play that podcast that Jamie recommended,” and Siri will locate and play the episode, without the user having to remember whether it was mentioned in a text or an email. Or they could ask, “When is Mom’s flight landing?” and Siri will find the flight details and cross-reference them with real-time flight tracking to give an arrival time.

Are you excited yet?

Elon Musk certainly isn’t. The world’s richest stoner made it clear that Apple Intelligence won’t be welcomed by him:

“If Apple integrates OpenAI at the OS level, then Apple devices will be banned at my companies. Visitors will have to check their Apple devices at the door, where they will be stored in a Faraday cage.”

He went on:

"It's patently absurd that Apple isn't smart enough to make their own AI, yet is somehow capable of ensuring that OpenAI will protect your security & privacy! Apple has no clue what's actually going on once they hand your data over to OpenAI. They're selling you down the river."

X’s Shitposter-in-Chief also shared a helpful image to describe how OpenAI might drink Apple’s milkshake.

The jury is out on whether this is genuine concern from Elon, or just sour grapes at the thought of getting pipped to the post before he can launch his own world-devouring AI.1

What does seem confirmed is that Big Tech doesn’t care whether you want AI or not - you’re gonna get it.

ChatGPT will come to iOS 18, iPadOS 18, and macOS Sequoia later this year, powered by GPT-4o. Users can access it for free without creating an account, and ChatGPT subscribers can connect their accounts and access paid features right from these experiences.

Speaking of spying…

Buy With Your Eyes

Mastercard, the payment provider known worldwide as being second-best to Visa, is expanding its “Biometric Checkout Program” to five stores in Poland.

In other news, Mastercard has a Biometric Checkout Program.

In the opinion of your humble correspondent, whomever was in charge of this project and missed the opportunity to call it “Buy-o-metric” should be sacked, or at least made to do push-ups with Janice from Accounts sitting on their back.

From their press release:

Mastercard's global Biometric Checkout Program, represents a first-of-its-kind technology framework to help establish standards for new ways to pay, allowing cardholders to use a wide range of biometric payment authentication methods such as palm, face or iris scan. This simplifies the checkout process in store, as consumers no longer need to use a physical payment card, cash or a mobile device to pay for purchases. With Mastercard Biometric Checkout Program, secure and convenient experiences are possible simply by using your biometrics.

The payment system is being installed in partnership with a Polish firm called PayEye, and involves special terminals that watch your eye movements (emphasis mine).

PayEye is a Polish fintech that is developing proprietary cashless biometric payment technology using a fusion of iris and face biometrics. To accept PayEye payments, a business must equip itself with a special eyePOS terminal. The process requires precise calibration, such that there is no risk of accidentally looking at the terminal and paying.

As you may have noticed from these corporate announcements of intrusive technology roll-outs, there is an emphasis on how secure and safe it will be but with precious little actual information on how oversight works, what the relevant rules and laws are, and what, if any, penalties could be incurred by transgressing. Instead, it’s just the ol’ “trust me, and if you trust me, you can trust my partners too.”

Maybe we should call it the ‘too-step.’

The Biometric Checkout Program is governed by Mastercard’s principles for data responsibility, reinforcing that consumers have the right to control how their personal data is shared and benefit from its use. As part of Biometric Checkout Program, Mastercard does not process any biometric data but ensures stakeholders, such as Empik and PayEye, maintain top security and privacy when performing a biometric in-store payment.

“Principles for data responsibility” in fintech is a phrase I’d list alongside “vegan piranha” as inherently ludicrous, but maybe I’m just a spoilsport.

Nick Corbishley at Naked Capitalism adds some flavour via Ajay Bhalla of Mastercard:

Ajay Bhalla, Mastercard’s president of cyber and intelligence solutions described the company’s biometric checkout program as a “cool new technology” that “allows consumers to pay with a smile on their face or just wave.” That way, he said (emphasis my own), “you can forget the clunkiness of taking your wallet out, your devices out, your card out.” Just do your shopping, he said “go to the checkout and… pay with your face. It’s as simple as that.”

Yeah, a smile is the first thing that comes to my face when I have to pay for something. How well you know me, Mastercard.

If It Flies, It Dies

Animal rights activists are in a flap because “Limburg an der Lahn, in the western German state of Hesse, has just voted to exterminate its 700-strong pigeon population,” as per EuroNews.

The fatwa on our feathered friends is to be carried out by a falconer:

…the falconer will lure the birds into a trap, hit them over the head with a wooden stick to stun them, and then break their necks.

As usually happens when the authorities stick their beak in, it probably won’t even work:

Remarkably, some studies show that pigeon numbers can even increase following a cull.

This was the case in Basel, Switzerland, which had a pigeon population of around 20,000. From 1961 to 1985, the city killed around 100,000 pigeons each year, but the population remained stable, the Local news site reports.

A group called Pigeon Action found an alternative solution, now known as the ‘Basel model’, whereby citizens were warned against feeding the animals. Pigeon lofts were also installed so that eggs could easily be removed from them.

As a result, Basel’s pigeon population plummeted by 50 per cent within four years.

The much-maligned rock dove is most likely on its way out anyway, “because fries and breadcrumbs do not meet the requirements of pigeons, [so] the animals die a slow death of starvation.”

Someone should really take the poor birds under their wing.

Going Pete Tong In Hong Kong

According to Jonathan Sumption, “a former overseas judge of Hong Kong’s Court of Final Appeal”, Hong Kong is “slowly becoming a totalitarian state.”2

Sumption published a scathing piece in the Financial Times this week, sure to harsh the mellow of Hong Kong’s chief executive and the supposedly-autonomous region’s real controllers in Beijing.

In it, he highlights a recent case that precipitated his resignation:

On May 30 Hong Kong’s High Court handed down a landmark judgment convicting 14 prominent pro-democracy politicians of “conspiracy to commit subversion”, contrary to the Beijing-imposed National Security Law of 2020. The accused had organised unofficial primaries to select a common list of pro-democracy candidates for the elections to the Legislative Council.

The Legislative Council (LegCo) is the legislative body of Hong Kong, responsible for passing laws, approving the budget, the usual stuff. The Basic Law, Hong Kong’s constitution, “expressly authorises Legco to reject the budget, and provides that if it does so twice, the chief executive must resign.”

The 14 pro-democracy politicians convicted in May had planned to leverage the right to reject the budget to obtain concessions from the Chief Executive and, by extension, Beijing.

As Sumption explains it, the High Court’s decision was “legally indefensible”:

…the High Court decided that rejecting the budget was not a permissible means of putting pressure on the chief executive to change his policies. He would certainly reject the majority’s demands, they said, and so would have to resign. That would interfere with the performance of his functions. The result is that Legco cannot exercise an express constitutional right for a purpose unwelcome to the government. Putting a plan to do this before the electorate was branded a criminal conspiracy. The maximum sentence is life imprisonment, the minimum 10 years.

Referring to “a growing malaise in Hong Kong’s judiciary”, Sumption explains that there are multiple factors affecting the morale of judges, not the least of which is that “every judge knows that under the Basic Law, if China does not like the courts’ decisions it can have them reversed by an “interpretation” from the standing committee of the National People’s Congress in Beijing.”

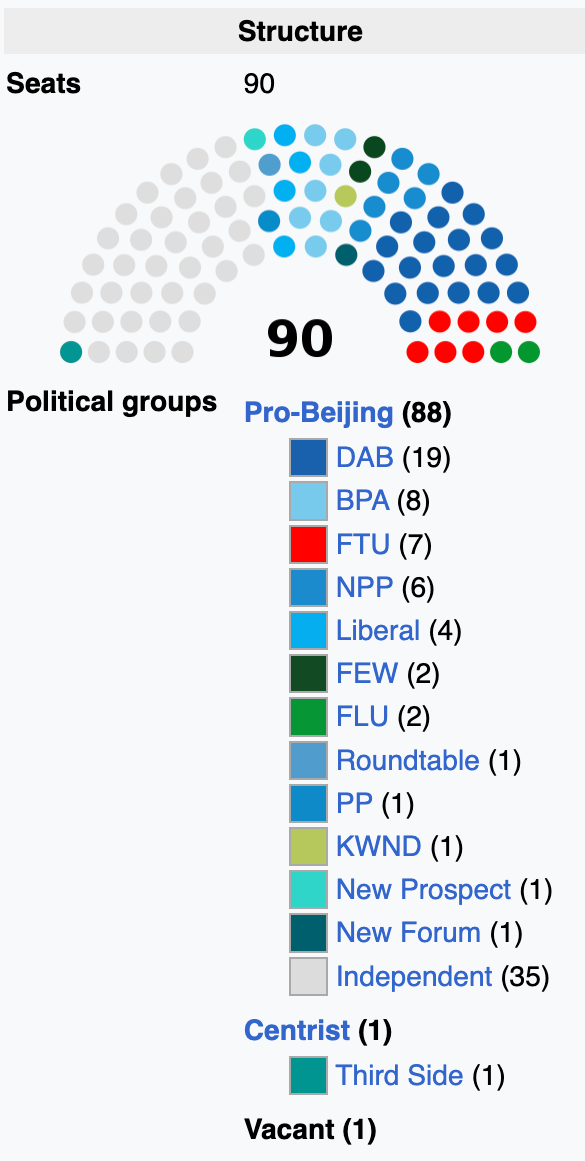

Wikipedia has a seat map of Hong Kong’s Legislative Council, to give you a flavour of the robust adversarial politics permitted in the Special Administrative Region.

90 seats, 88 of which are Pro-Beijing.

The handover of Hong Kong in 1997 came with the condition that the Chinese Communist Party preserve and respect the island’s democratic system for 50 years thereafter, but they apparently could only manage to hold back their authoritarian urges for 23 years.

This is a sign of what Sumption calls “the paranoia of the authorities,” the obsession of the CCP with restricting, crushing, and silencing any opposing voice, any dissent, any contradiction.

It’s worth quoting Sumption here at length:

The violent riots of 2019 were shocking but the ordinary laws of Hong Kong were perfectly adequate for dealing with them. The National Security Law was imposed in response to the threat of a pro-democratic majority in Legco in order to crush even peaceful political dissent. Pro-democracy media have been closed down by police action. Their editors are on trial for sedition. Campaign groups have been disbanded and their leaders arrested.

An oppressive atmosphere is generated by the constant drumbeat from a compliant press, hardline lawmakers, government officers and China Daily, the mouthpiece of the Chinese government. A chorus of outrage follows rare decisions to grant bail or acquit. There are continual calls for judicial “patriotism”. It requires unusual courage for local judges to swim against such a strong political tide. Unlike the overseas judges, they have nowhere else to go.

Intimidated or convinced by the darkening political mood, many judges have lost sight of their traditional role as defenders of the liberty of the subject, even when the law allows it. There are guarantees of freedom of speech and assembly in both the Basic Law and the National Security Law, but only lip-service is ever paid to them. The least sign of dissent is treated as a call for revolution. Hefty jail sentences are dished out to people publishing “disloyal” cartoon books for children, or singing pro-democracy songs, or organising silent vigils for the victims of Tiananmen Square.

Unfortunately, it seems that “one country, two systems” is quickly becoming “one system”, and democracy is not winning.

Marke Raines, a London lawyer with Raines & Co, wrote a letter to the FT in response to Sumption’s article, in which he pointed out that it is odd for the article’s title to be “The rule of law in Hong Kong is in grave danger”.

In essence, the rule of law means that people are bound to obey laws, not the discretionary diktats of men or bodies of men.

Raines goes on to argue that since the Basic Law allows any judicial ruling to be subjected to “interpretation” by party cadres in Beijing, the rule of law in Hong Kong is non-existent, not endangered.

Hear hear.

AI Steve

Meet AI Steve, an independent candidate running to be a Member of Parliament representing the Brighton Pavilion constituency.

This is not a joke.

‘He’ is running against Siân Berry, a Green Party stalwart, in the safest Green seat in the UK, so it won’t be an easy campaign.

From AI Steve’s website:

AI Steve’s policies will be implemented by the “Real” Steve Endacott who will attend Parliament and therefore its [sic] also important that Voters get to know his background, attitudes and capability.

The “Real” Steve Endacott is “a Sussex Entrepreneur” who “comes from a working-class background, with a mother who worked in Tesco and a father who unloaded ships in Sheppey Docks.”

The SmarterUK team, led by the “Real” Steve Endacott, has spent many months brainstorming sensible and practical policies for both the National Stage and, specifically, the Brighton and Hove Area.

No Career Politicians. SmarterUK is staffed by sensible businesspeople.

No Political Egos. We will happily adopt the good policies of other Party’s [sic] rather than just criticise them for no real reason.

Practically Green. We all need to become greener to save the planet, but we must make these changes in behaviour affordable.

Technology Reform. You have seen how we have created AI Steve. Think who [sic] else we could reform Government with our understanding of modern technology.

It’s actually a pretty cool idea, to use a 24/7 AI interface to gather constituents’ feedback and needs, and collate all that information as a basis for policy-making. With a real human (why put quotes around “Real”, Steve Endacott?) attending Parliament and leading a team of humans, it seems less of a horrible blunder or cheeky gimmick and more like a reasonably smart solution for a comms-saturated, time-poor political environment where everyone wants to be heard but nobody has space in their schedule to listen.

I’m not 100% clear on how exactly policies will be formed, although the SmarterUK team’s description sounds a lot like blockchain governance:

SmarterUK will recruit locals to create policies (Creators) and commuters from Brighton Station to score these policies (Validators). Policies meeting the 50% threshold will be adopted, and the validators will control all AI-STEVES parliamentary votes—the ultimate form of democracy.

A Creator is expected to chat with AI Steve to hear about existing policies and give opinions on which new policies can be based. A Validator is expected to “[s]pend just minutes a week acting as a control mechanism to stop daft policies by giving each a score from 1 to 10. Only policies scoring more than 50% will be adopted.”

It all sounds very Black Mirror, specifically the episode called The Waldo Moment, in which a popular animated character runs for political office. This particular bit of dialogue rings eerily prescient:

The UK general election has been called for July 4, so we’ll know in a few weeks whether AI Steve is the “man” of the hour, or will be scheduled for deletion. Either way, yet another line in consensus reality has been crossed.

Speaking of consensus reality…

The EU Builds A Twin Earth And Therefore Reality Is A Simulation (Maybe)

The EU has spent a reported €315 million creating a “digital twin” of Earth on a supercomputer to run complex climate simulations.

This AI-powered “digital twin” virtually simulates the interaction between natural phenomena and human activities to predict their effect on things like water, food and energy systems.

[…]

It is expected to continually evolve over the coming years, completing a full digital replica of the Earth by 2030.

The Europeans involved were positively frothy at the advent of such a Very Very Big Deal.

"The launch of the initial Destination Earth (DestinE) is a true game changer in our fight against climate change," says Margrethe Vestager, Executive Vice-President for a Europe Fit for the Digital Age. "It means that we can observe environmental challenges which can help us predict future scenarios - like we have never done before... Today, the future is literally at our fingertips."

There’s a punchline to the story of building a supercomputer to model our planet in order to help manage measures to mitigate the effects of a changing climate, but I’ll save that to the end.

Let’s look at the idea of a simulation in itself for a moment.

The “simulation hypothesis” was set out in a paper by Nick Bostrom in 2003 but dates back at least to 2001. Bostrom put it as follows in an early draft:

The basic idea of the argument can be expressed roughly as follows: If transhumanists were right in thinking that we will get to the posthuman stage and run many ancestor-simulations, then how come you are not living in such a simulation?

If in the future we could become capable of simulating our past, Bostrom suggests that it follows that our present is a simulation.

Bostrom expanded on how such a simulation could be ‘nested’, creating worlds within worlds:

If we are living in a simulation, then the cosmos which we are observing is just a tiny piece of the totality of physical existence. The physics in the universe where the computer is situated which is running the simulation may or may not resemble the physics of the world we observe. While the world we see is in some sense “real”, it is not located at the fundamental level of reality.

It may be possible for simulated civilizations to become posthuman. They may then run their own ancestor-simulations on powerful computers they build in their simulated universe. Such computers would be “virtual machines”, a familiar concept in computer science. (Java script web-applets, for instance, run on a virtual machine – a simulated computer – inside your desktop.) Virtual machines can be stacked: it’s possible to simulate a machine simulating another machine, and so on, for arbitrarily many iterations. If we do go on to create ancestor-simulations, then [we] must conclude that we live in a simulation. Moreover, we would have to suspect that the posthumans running our simulation are themselves simulated beings; and their creators, in turn, may also be simulated beings.

Reality may thus contain many levels.

Bostrom must have seen The Thirteenth Floor in 1999 and gotten inspired.

Fouad Khan, writing in Scientific American in 2021, concluded that we do in fact live in a simulation, and that the speed of light is the telling “hardware artefact”3 that proves it:

We can see now that the speed of light meets all the criteria of a hardware artifact identified in our observation of our own computer builds. It remains the same irrespective of observer (simulated) speed, it is observed as a maximum limit, it is unexplainable by the physics of the universe, and it is absolute. The speed of light is a hardware artifact showing we live in a simulated universe.

Interestingly, Khan ended the article by calling the simulation hypothesis “the ultimate conspiracy theory.”

The mother of all conspiracy theories, the one that says that everything, with the exception of nothing, is fake and a conspiracy designed to fool our senses. All our worst fears about powerful forces at play controlling our lives unbeknownst to us, have now come true. And yet this absolute powerlessness, this perfect deceit offers us no way out in its reveal. All we can do is come to terms with the reality of the simulation and make of it what we can.

Back to the EU’s twin Earth now, EuroNews quote a member of the project explaining how it will be useful to have a simulated twin Earth.

“If you are planning a two-metre high dike in The Netherlands, for example, I can run through the data in my digital twin and check whether the dike will in all likelihood still protect against expected extreme events in 2050,” said Peter Bauer, co-initiator of Project Destination Earth, when the project was initially announced in 2021.

Does the simulation include simulated people, animals, ecosystems, all interacting, behaving, creating, iterating continuously, to try to include the living things on the planet that also affect the planet? Do those simulated people have opinions about dikes, or climate change, or computer simulations?

This is where Bostrom got the bug. If we can create a simulation like that, how do we know we aren’t in one? From Descartes’ malicious demon to the EU’s DestinE project in four centuries. What a trip.

The punchline I promised you:

Although it is unclear how the system will be powered, computing experts previously stressed the importance of carbon-neutral energy in its development.

[…]

By 2027, the team aims to have additional digital twins and services up and running. The data from these simulations will then be combined to create “a full digital twin of Earth” by the end of the decade.

So the advanced computer model they’ve built to help mitigate climate change is going to use a lot of energy, they don’t quite know where that energy will come from yet, and in the meantime they are building more models that will use even more energy, all to figure out (among other things) how to use less energy.

Brilliant.

That’s it for this week. Stay sane out there, friends.

Outro music is Monty Python’s Galaxy Song, in honour of the possibility that we exist in an endless matryoshka-doll array of simulated worlds.

“Pray that there’s intelligent life somewhere up in space

‘cos there’s bugger all down here on Earth.”

I reckon he’s probably pretty chill, since Tesla’s shareholders just voted (again) to give him a $55.8 billion pay package as CEO.

For anyone unfamiliar with Cockney rhyming slang, here’s a helpful YouTuber explaining what ‘Pete Tong’ means.

The hard universal limit imposed on the simulated world by the processor speed of the computer on which the simulation is running.