The Weekly Weird #13

Sora killed the video star, AI has balls, Musk's mind microchip moves mouse, India inspects irises (and more), Gauteng Watch Party, Mums for dumbphones

Mellow greetings and welcome to the land of nodding along to the nonsense, as the world grows ever-stranger, with a sense of impending danger.

I’m a poet and I had no idea.

It’s Bumfight: Geriatric Edition in the United States as election season heats up.

Tweet of the Week is a banger, providing a suitable and encapsulating belly laugh as the world gears up for that, as well as a bumper crop of elections globally in 2024:

Sound advice.

In that vein, the Word of the Week is gerontocracy, brought to you by this New York Times op-ed and its rebuttal by the excellent Substacker

. In the former, a neuroscientist explains why all brains are equal but some brains are more equal than others. In the latter, a neuroscientist explains why neuroscientists shouldn’t preface their hot takes with the claimed authority of being a neuroscientist.Episode 107 of the podcast featuring the filmmaker and all-round quality human Sergei Loznitsa is out now, so if you haven’t checked it out yet, please do. He’s great, and we get in depth on his work, Ukraine, Russia, and totalitarianism in general.

Lastly, before we crack on: Julian Assange had his final day in court in London yesterday, arguing against his looming extradition to the United States. Here’s a screenshot of the first page of search results returned after entering the term “Assange verdict”:

Compare the framing of the story in the headline by the BBC (circled) to all the other outlets. The BBC headline is more or less the verbatim argument made by the prosecuting barrister. Mouthpiece of der Staat much?

The verdict in the Assange case is due in the coming weeks, so smoke ‘em if you got ‘em.

Onwards with our programme…

Sora Killed The Video Star

OpenAI are at it again. The tech company that brought you ChatGPT (and effortless cheating on any writing assignment) has released initial footage from their text-to-video generative AI known as Sora.

Responses have varied from breathless to bemused to bitchy. At the latter end of that spectrum, critics have pointed out that, for all the fanfare, poor Sora still doesn’t understand object permanence. For example, if you watch the bottom left of this shot from OpenAI’s reel, you’ll see three people walking across the sun-drenched plaza towards the stairs, but two of them disappear en route. While there are valid arguments doing the rounds that these kinds of bugs will be fixed as the technology develops, it’s understandable that skeptics like pointing out that an apparently advanced technology being gushed over is surprisingly dumb in some basic ways.

For your humble correspondent, the main takeaway is that this latest development in generative AI makes it clear that the era of taking video evidence at face value is categorically over, if it wasn’t over already.

The satirical 1997 film Wag The Dog did a great job of getting into the question of what we can and can’t believe in media, but it’s still worth reflecting on the budget, tech, and manpower this sort of thing required back then, versus a simple text prompt now.

The wailing and gnashing of teeth has already begun from those who expect their jobs to be destroyed by Sora as it stomps the yard, perhaps with good reason. It seems baked into the foreseeable future that the capabilities of LLMs and generative AI will grow exponentially, as technology is wont to do, swallowing human livelihoods in the process.

It doesn’t even need to be the dramatic death of industries: One corporate client using generative AI to create internal comms material is a freelancer not getting paid to do that. The money instead flows to an already-highly valued tech company and away from the less centralised web of individuals currently doing that work. It’s very similar to the death of the cottage industry at the birth of the cotton gin, and from our vantage point here in the future nobody is mad at Big Cotton. That said, it’s defensible to feel that this is different, if not qualitatively then at least in scale.

Nine years ago, CGP Grey released the video Humans Need Not Apply, which described the inexorable movement towards automation and the naturally-resulting unemployability of humans.

Well, here we are.

More amusingly in AI news…

AI Has Balls

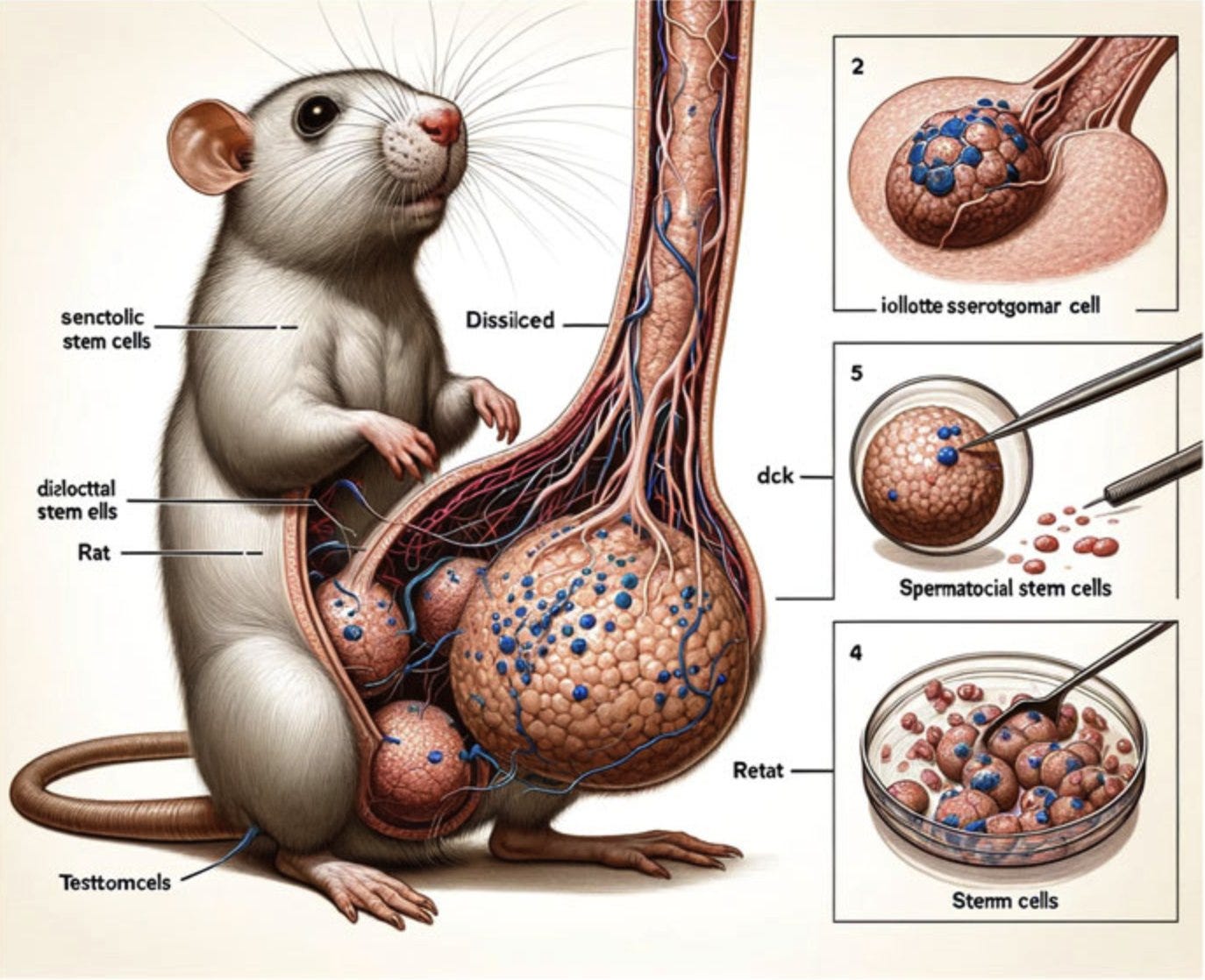

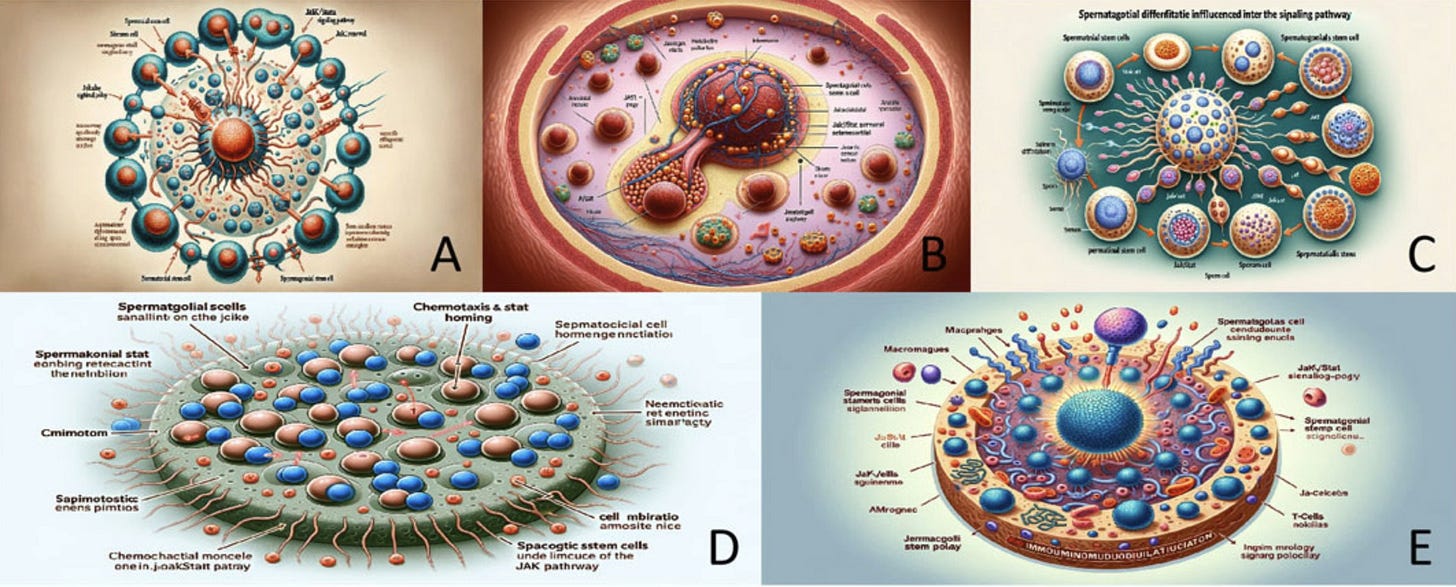

In the span of a week, the peer-reviewed journal Frontiers in Cell and Developmental Biology published and then retracted an article called Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway. While perhaps not sounding so gripping from the title, what lurked within was…well, when they say that a picture is worth a thousand words, they ain’t lyin’.

Check out the Testtomcels on that rat! Dude’s junk looks like an umbilical cord. Oh, wait, it’s called a Dissilced. My mistake. Forgive me, I’m not a scientist.

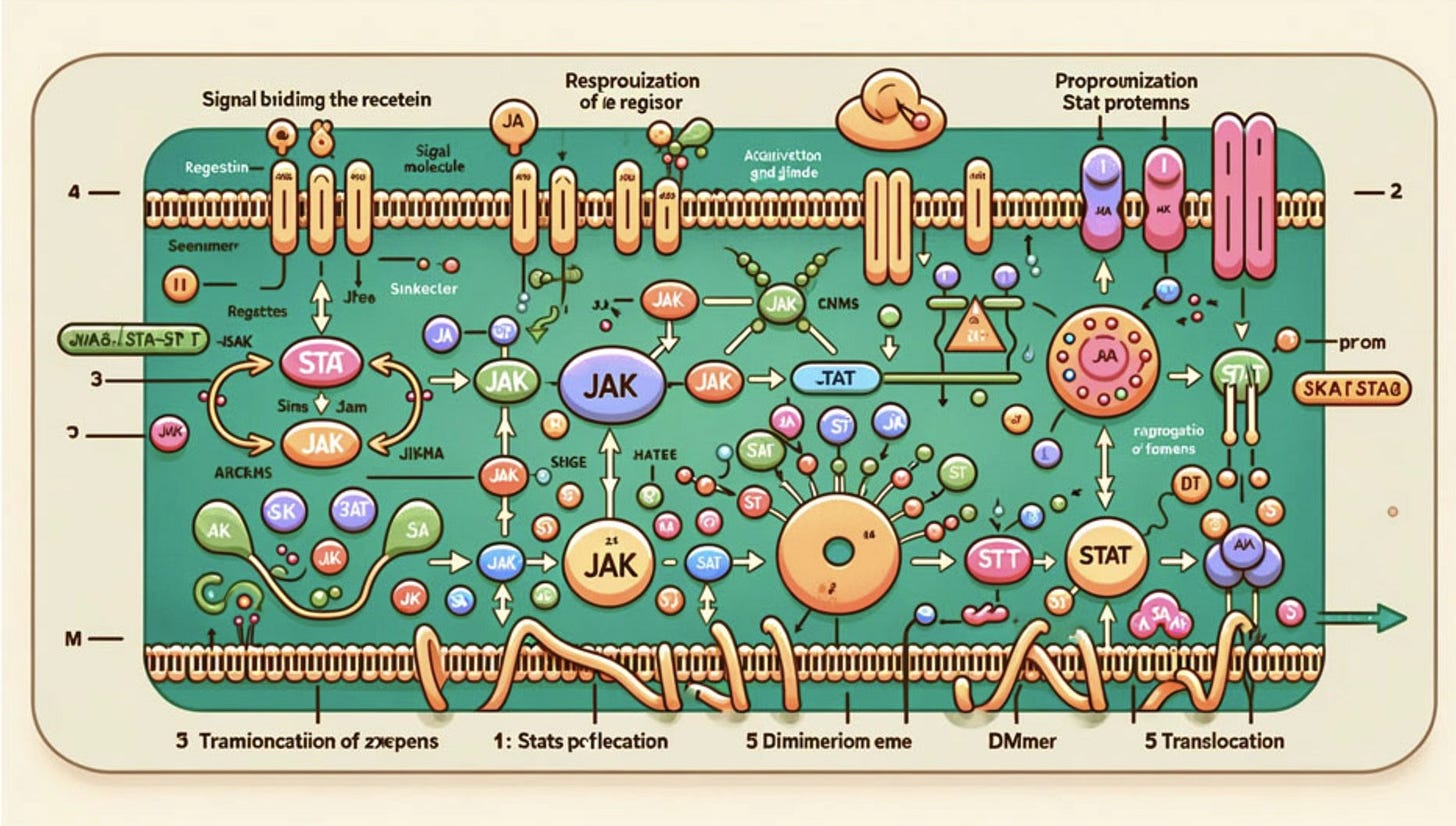

The three Chinese authors who submitted the paper included the above and other clearly AI-generated nonsensical illustrations, like the one below demonstrating Proprounization Stat protemns and Dimimeriom eme, which, if my biology jargon is on point, translate as “utter bullshit” and “pull the other one.”

I think I saw that printed on a mat in a kid’s playroom once.

Further ‘scientific’ illustrations of Spermatgolial scells [sic]…

Figure E looks like a schizophrenic on mescaline gave an artist’s rendition of Flat Earth Theory using blueberries.

The article was apparently reviewed by two scientists, from Northwestern University in the US and the National Institute of Animal Nutrition and Physiology in India respectively, and edited by someone from India’s National Dairy Research Institute (obviously the epicentre of advanced knowledge about rat testicles). No information has been forthcoming on whether the “peer review” involved the peers reviewing the paper by actually reading it. They were probably too busy generating AI images for their own legitimate scholarship.

Frontiers responded to the backlash with a brief apology that was notably light on details (and chagrin):

Thanks to the crowdsourcing dynamic of open science, we promptly acted upon the community feedback on the AI-generated figures in the article "Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway", published on 13 February 2024. Frontiers has now retracted and removed the article from the databases to protect the integrity of the scientific record.

Our investigation revealed that one of the reviewers raised valid concerns about the figures and requested author revisions. The authors failed to respond to these requests. We are investigating how our processes failed to act on the lack of author compliance with the reviewers' requirements. We sincerely apologize to the scientific community for this mistake and thank our readers who quickly brought this to our attention.

The tone comes off a little more “the system works!” than “we published a deepfake rat dong paper because our reviewers waved it through without checking,” but nobody’s perfect. By “the crowdsourcing dynamic of open science,” they probably meant the immediate calling of bullshit upon them by people on social media. That’s PR-speak for you.

Manuel Corpas, Assistant Professor of Genomics at the University of Westminster and Associated Editor of the journal Frontiers in Genetics, was scathing about how Frontiers handled the scandal:

This is not enough. You do not reveal what the comments were from this review process from reviewers and editors nor [do] you respond to the comments that suggested that the text might have been generated with #AI. You do not provide either an open forum on this post where scientists can express their concerns and worries due to the serious breach that this article may have caused on the perceived quality of the science generated by this house’s journals.

So much for “open science.”

A blog post at Science Integrity Digest called The rat with the big balls and the enormous penis also pulled no punches:

[The] paper is actually a sad example of how scientific journals, editors, and peer reviewers can be naive – or possibly even in the loop – in terms of accepting and publishing AI-generated crap. These figures are clearly not scientifically correct, but if such botched illustrations can pass peer review so easily, more realistic-looking AI-generated figures have likely already infiltrated the scientific literature. Generative AI will do serious harm to the quality, trustworthiness, and value of scientific papers.

Remember, the next time you read an article in the media saying that “A new study has shown…”, make sure to double-check the scientific paper to confirm the size of the rat’s wang so you know it’s legit.

The bigger the rat wang, the factier the science. That’s how it is. Don’t shoot the messenger.

Musk’s Mind Microchip Moves Mouse

Elon Musk, the world’s wealthiest meme circulator, has confirmed (or claimed, depending on whether you like him or not) that the first person to receive a Neuralink chip in their brain has fully recovered and can now control a mouse with their mind.

Neuralink made the news, and Weekly Weird #10, when it announced having carried out the first successful implantation in a human. Now, that human can doomscroll by brain power alone, leaving their hands free to claw out their eyes as they witness what society is becoming.

While Musk gets all the glory, there are actually a few companies/people working on brain-computer interface (BCI), and some of them are getting interesting results (without cutting open human skulls). Chris Norlund covers them in a 9-minute video on his YouTube channel:

The guy wearing what looks like the squid rig from Strange Days being asked “Do you feel like a superhero?” by an excited tech reporter while he performs the Herculean task of scrolling through lines of code using his brain is a moment to savour.

How far from here to Universal Soldier?

India Inspects Irises (And More)

In 2022, the Criminal Procedure (Identification) Act became law in India. It repealed and replaced the Identification of Prisoners Act of 1920, “a colonial-era statute that allowed police to measure suspects who had been convicted, detained, or were awaiting trial.”

It’s so great to finally hear about a government getting rid of one of these intrusive data-hoovering rules for a change.

Just kidding.

As per LiveLaw:

The Criminal Procedure (Identification) Act, 2022, empowers police officers or prison officers to collect certain identifiable information from convicts or those who have been arrested for an offence. This information could include fingerprints, photographs, iris and retina scan, biological samples and their analysis, and behavioural attributes.

The collected data and samples will be stored by India’s National Crime Records Bureau for 75 years, except in cases where the subject is released without charge, in which case their record should be destroyed. Whether that will happen in practice, of course, is as much a matter of faith as of law.

One of the criticisms of the Act is the scope it provides for the NCRB to share the data it gathers:

The NCRB is permitted under this statute to distribute and share personal data with any law enforcement agency. This goes against the principle of purpose limitation, which states that data can be legitimately collected for one purpose but can only be used for that purpose and cannot be used for any other purpose. As a result, organisations across the nation may have access to your personal information without following this rule. This action essentially provides police personnel free rein to collect samples.

This past week, India’s Supreme Court refused to rule on the constitutionality of the law, preferring to wait for a high court (which is lower) to give its opinion first.

The petitioner in the case is the Internet Freedom Foundation (IFF), “a registered charitable trust that was set up to protect, promote and defend human rights of citizens using information communication technologies.”

From The Leaflet:

The petitioner contended that the impugned Act and Rules are irrational in their classification of persons and situations in which information may be collected, disproportionate in their invasion of the fundamental right to privacy, and insufficient in discharging the State’s constitutional obligation to safeguard and protect the data that it collects from individuals.

The IFF argued that the Act constitutes “an open-ended, uncanalised discretionary power to collect sensitive personal information of virtually every person who comes in contact with the criminal justice system.”

According to the arguments put forward by the IFF, one of the categories of data to be collected, “behavioural attributes",” is undefined and has already been confirmed to include signature and handwriting. While the Act specifies that collected data be held for 75 years and then destroyed, “no procedure for destruction and disposal of such records has been prescribed under the law.”

Someone tried and acquitted would be forced to pursue the authorities for the destruction of their data even after their innocence is confirmed at trial:

[Even] after a person has been acquitted by courts, their “measurements” (taken at the time of arrest) that have been shared with any law enforcement agency shall not be deleted…the Act and Rules do not automatically require law enforcement agencies to delete the “measurements” of persons who have been acquitted and illegally shift the onus on the persons acquitted.

The idea of any government having a central repository of all your biometric data, your handwriting, your signature, possibly also your voice, at a time when generative AI can create believable fakes and trust in institutions is at a low ebb, seems like it should be a red line. Are there any of those left at this point?

The IFF withdrew their petition from the Supreme Court in order to file in a high court. We’ll see where it goes from there.

Gauteng Watch Party

In South Africa, the Gauteng Provincial Government has announced a partnership with a private surveillance firm, VumaCam1, to leverage their network of 6,000 CCTV cameras for law enforcement purposes.

Gauteng is a region which includes the infamously crime-ridden city of Johannesburg, and the Premier, Panyaza Lesufi, is (hopefully metaphorically) taking no prisoners.

I am tired of crime. We cannot be held hostage by criminals, we cannot be scared and be scared even in our shadows because of criminals. Crime is a big problem and it is even halting investment interests in our province. We are signing this agreement to protect our people.

Here’s a news anchor discussing the launch of the surveillance programme with representatives of VumaCam and the Gauteng government:

It all sounds like a definitive step towards dealing with the scourge of violent crime in the area. However, the UK, which is no slouch when it comes to the use of CCTV, has found interesting nuances in the data on the effectiveness of cameras over the past forty years.

This from the College of Policing in the UK (emphasis mine):

Overall, use of CCTV makes for a small, but statistically significant, reduction in crime, but this generalisation needs to be tempered by careful attention to (a) the type of crime being addressed and (b) the setting of the CCTV intervention. CCTV is more effective when directed at reducing theft of and from vehicles, while it has no impact on levels of violent crime.

In London, there is approximately one CCTV camera for every ten residents. In the top ten cities worldwide for CCTV density per square kilometre, London is ranked fourth and is the only city on the list not located in China or India. In Britain, Automatic Number Plate Recognition (ANPR) is the norm in urban areas, and is common at petrol stations to deter people from driving off without paying. Even (or especially) with that level of surveillance, the benefit to law enforcement in terms of arrests and convictions is shockingly poor.

As per BBC News in 2019:

Home Office figures show that in England and Wales last year only 8.2% of crimes recorded by police resulted in a suspect being charged or summonsed to appear in court, the lowest level since 2015 when a new method of counting detections was introduced.

For some individual crimes, clear-up rates were even lower: 3.8% for sexual offences; 5.4% criminal damage and arson; 6% theft.

Finding the person who committed the crime is basically a coin toss:

In 45.7% of offences, no suspect was identified.

More from the College of Policing:

…use of CCTV resulted in a marked and statistically significant reduction in crime in car parks while the evidence was insufficiently clear to draw conclusions about the effectiveness of CCTV schemes in city and town centres, public housing and public transport.

Your humble correspondent extends sincere good wishes to the beleaguered citizens of Gauteng, but if the UK’s experience is indicative, it may be worth questioning what purpose CCTV actually serves in practice, rather than in theory.

Speaking of the UK…

Mums for Dumbphones

A pair of mums worried about the impact of smartphones on their children accidentally created a new movement in the UK.

From The Guardian:

The WhatsApp group Smartphone Free Childhood was created by the former school friends Clare Fernyhough and Daisy Greenwell in response to their fears around children’s smartphone use and the “norm” of giving children smart devices when they go to secondary school.

[…]

But what they expected to be a small group of friends who help “empower each other” has turned into a nationwide campaign after the group reached the 1,000-person capacity within 24 hours of Greenwell uploading an Instagram post to promote it.

The group is now nearly 5,000 strong. One of the group’s organisers was quoted in The Guardian:

We don’t want our kids to turn up in secondary school as the only one [without a smartphone]…That’s a nightmare and no one will do that to their child. But if 20%, 30%, even 50% of kids are turning up with parents making that decision, they are in a much better position.

Despite the argument from many parents that it’s impossible to limit the use of devices because of peer pressure, there are parents who keep their children away from devices, regardless of what their peers are up to. Bill Gates and Steve Jobs famously didn’t allow their kids unfettered access to devices.

Gates laid it out in true sweater-sporting bummer dad fashion: “Just because you’re the daughter of Bill Gates does not mean you get to play on your computer all day long.”

Jobs was even more unequivocal: “We don’t allow the iPad in the home. We think it’s too dangerous for them in effect.”

It’s true that it’s hard to keep your kids off the digital crack pipe. It involves saying “no” a lot, getting accused of being unfair, suffering relentless pestering, even knowingly being an obvious hypocrite as you surf for recipes and check emails while your kid sulks, but it is possible. Do you really need 50% of other parents behind you if you are so certain that the phone is bad for your child? Why is it so hard to just say no?

Anyone?

Bueller?

That’s all, folks!

Our music video outro for this week’s Weird is a deepfake of Adolf Hitler and Joseph Stalin lip-synching to Video Killed The Radio Star by The Buggles. The YouTube comments are priceless, and Fake Hitler’s resemblance to Dame Maggie Smith is eerie. Enjoy.

Stay sane out there, friends.

Run by a guy named, and this is not a joke, Ricky Crook. What could go wrong?