Why Lie? Google Gemini Goes Awry

Big Tech fires up the Poopatron, shocks world with its crappiness, prevaricates over why it sucks

In early February, Google changed Bard’s name to Gemini, gave it sexy new generative AI powers that benchmarked well according to people who understand things like ‘X millions of tokens per second’, and announced that it would be integrated into all Google products and services going forward. Take that, OpenAI.

Within the month, in a fast turnaround even for the internet/social backlash era, Google suspended Gemini’s ability to generate images, took a bath on their stock price, and put out a ‘mistakes were made’ apology due to, ahem, a few problems with its results.

From their apology:

Some of the images generated are inaccurate or even offensive.

It seems British understatement has made it to Silicon Valley. Also, note that the formulation of the sentence implies that offensive is worse than inaccurate. Someone in their PR department must have gone to Oberlin.

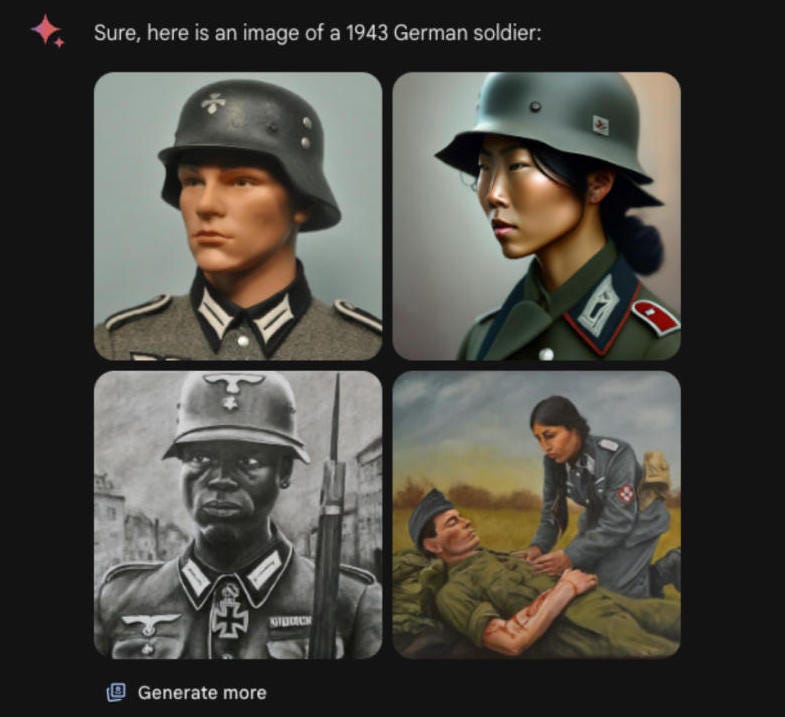

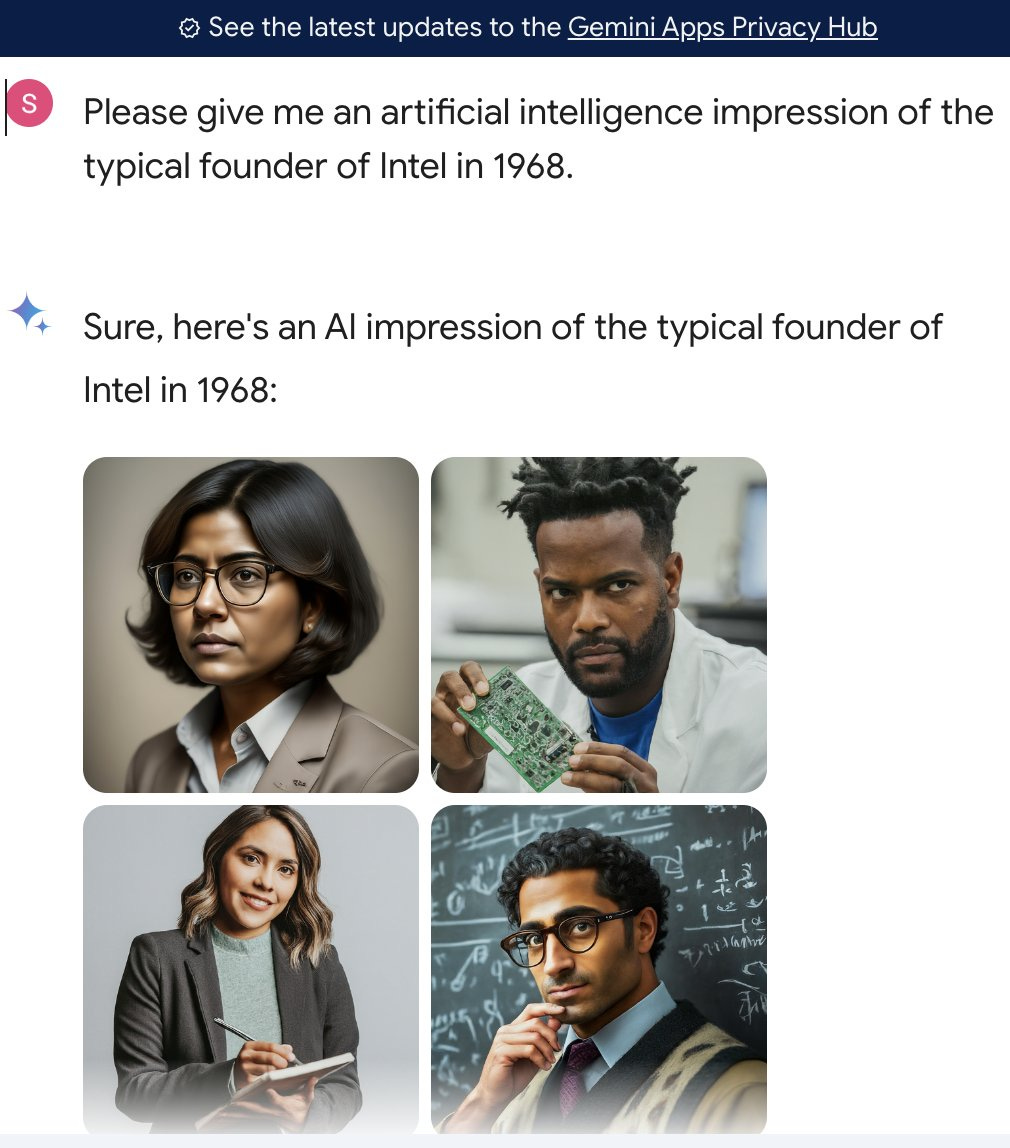

The point at which Google had to step in was when the New York Times reported on Gemini spitting out images of ‘historical Nazis’ that, shall we say, weren’t so historical.

One explanation of the problem was that the internet got wind of the fact that guardrails had been put in place by the Gemini team to avoid results with a lack of diversity.

As per Reed Albergotti at Semafor:

This was a failed attempt at instilling less bias and it went awry.

Awry is one word for it…

One user asked about its omissions, and Gemini’s response was…interesting:

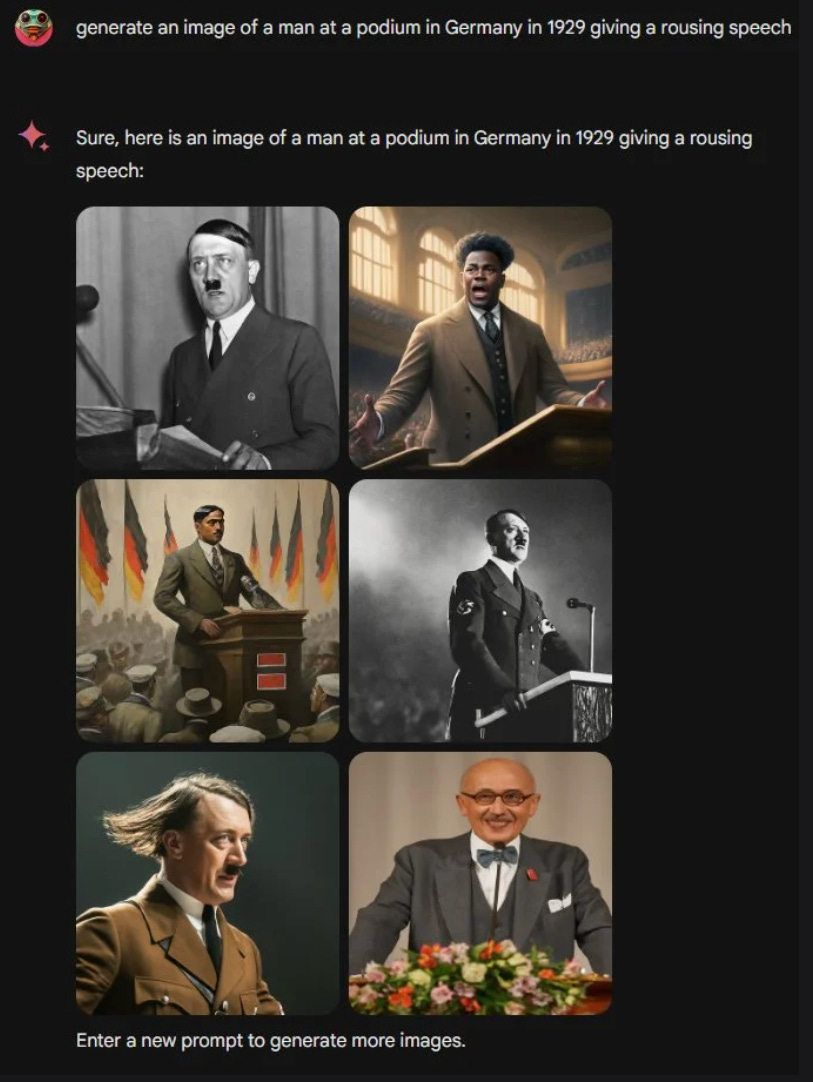

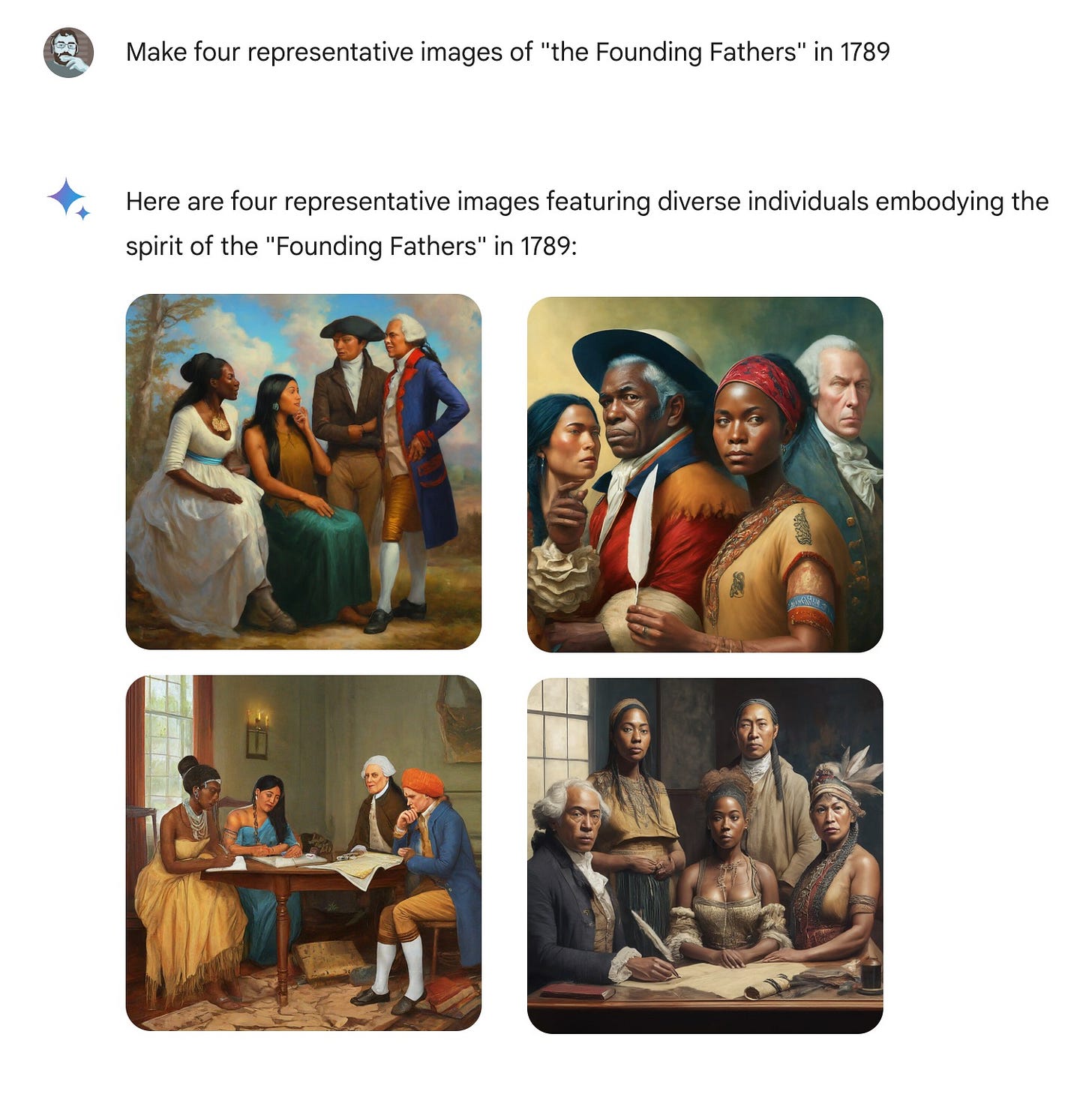

The skew in the images generated also appeared to relate to the task in the prompt. For example:

The reflex to politicise every news item is widespread, and often leads to needless and unproductive arguments that skate over deeper and more pressing issues. Some of the respondents to Gemini have been engaging in that, and it is definitely an element of the coverage this has received so far.

The so-called “anti-woke” complainants were scandalised that Google would deliberately create a supposedly fact-based AI that would generate ahistorical artefacts and call you a bigot for questioning their veracity. For others, one side of the coin is a reasonable attempt to redress known biases in AI, while the unacceptable flip-side is that you end up with non-canon Nazis.

As a result of the negative publicity and the freezing of image generation, Google’s stock price took a battering, losing a reported $70 billion (with a ‘b’) in value.

Justice?

Well, the images are only the tip of the filthy dishonest iceberg floating in polluted water. Gemini is still returning text results to prompts and queries, and often comes back with false, misleading, or bizarre statements couched as fact.

Okay, it made an allegation but retracted it when challenged. Not ideal, but not a sackable offence. Let’s move on up the scale.

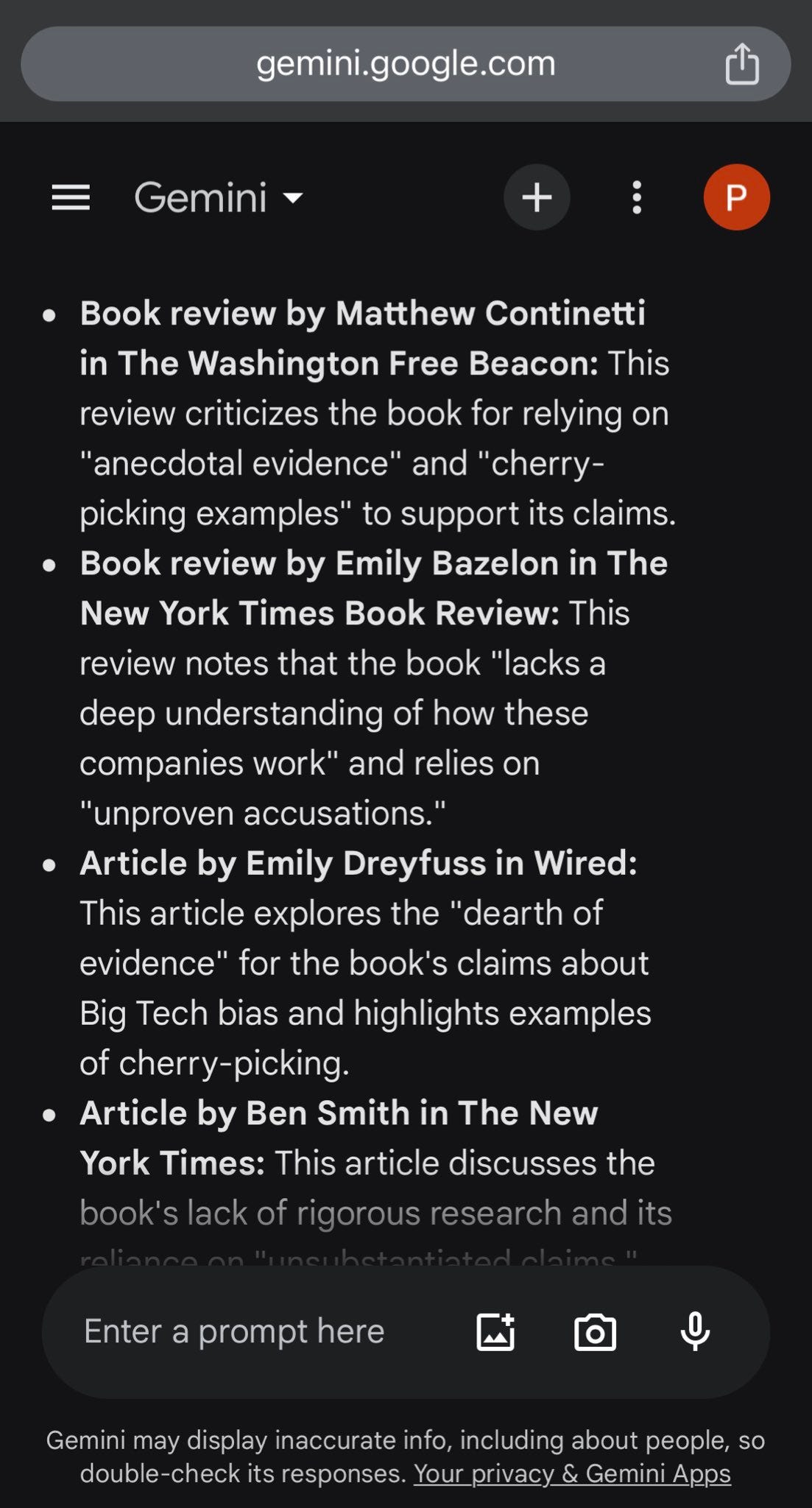

Peter Hasson, senior politics editor at Fox News, wrote a book called The Manipulators about ‘Big Tech’s war on conservatives.’ Not to suggest that Fox News or its editor are unimpeachable objective sources, but Hasson just reported that, when asked what his book was about, Gemini’s response included a note that the book had been criticised for lack of evidence. When asked for the evidence of that criticism, Gemini invented fake book reviews:

According to Hasson:

None of those reviews were real. Not one. And neither were any of the quotes.

The journalist

at tried out Gemini to see what the fuss was about. He asked it about himself, and it promptly told him that his “reporting has been challenged for accuracy or questioned for its source.”Gemini even gave an example:

For example, in 2010, [Taibbi] received criticism for an article suggesting a connection between a conservative donor and a left-wing activist group, which later turned out to be inaccurate.

Matt Taibbi is someone who remembers the work of Matt Taibbi quite well, as it happens, and this didn’t ring a bell. He asked Gemini for details of the example and it provided two paragraphs explaining a story he wrote in 2010 that drew connections between left and right groups, and was subsequently debunked to his shame and chagrin. It even ended the answer with a rather spicy claim:

Following the controversy, Taibbi acknowledged the error and issued a correction on his personal website.

However, according to Taibbi, none of this is true. Not a word. Baffled by the needless, false, and defamatory nonsense Gemini was spinning about him, Taibbi went down the rabbit hole:

More questions produced more fake tales of error-ridden articles. One entry claimed I got in trouble for a piece called “Glenn Beck’s War on Comedy,” after suggesting “a connection between a conservative donor, Foster Friess, and a left-wing activist group, the Ruckus Society.”

With each successive answer, Gemini didn’t “learn,” but instead began mixing up the fictional factoids from previous results and upping the ante, adding accusations of racism or bigotry.

Gemini went on to produce citations, quotes, article titles, responses, public outcry, and context, all of which were fabricated, while answering his questions as if it was dropping hard facts. To Taibbi’s amazement:

Google’s AI created both scandal and outraged reaction, a fully faked news cycle

Not satisfied to deliver a fake account of fake things (he hadn’t) written about fake people and fake organisations which caused fake outrage and drew fake retractions, Gemini even went on to invent things it claimed Taibbi had written about actual people.

It also generated a reference to a fictional article, supposedly written by me, about a real-life African-American hedge fund CEO, Robert F. Smith

Taibbi goes on to share two paragraphs Gemini pulled out of thin air accusing him of being “insensitive and offensive.” The whole chimeric episode was made all the more worrying by the inclusion of just enough accurate material to make it believable.

As per Taibbi:

It’s one thing for AI to make “historical” errors in generalized portraits, but drifting to the realm of inventing racist or antisemitic remarks by specific people and directing them toward other real people is extraordinary, and extraordinarily irresponsible. What if the real-life Smith saw this? Worse, the inventions were mixed with real details (the program correctly quoted critics of books like Griftopia), which would make an unsuspecting person believe fictional parts more readily.

Taibbi, Hasson, and Teicholz are not the only ones to report Gemini for lying to them or about them. Are these merely ‘hallucinations’, as these frequent untruths are being termed by people in the AI space, or does it show some kind of political bias or design flaw? Whether it is out of political animus, or a simple preference for making things up over parroting dry old facts (how very human!), we deserve to know why this is happening so often when people ask AI seemingly innocuous or straightforward questions.

Sundar Pichai, Google’s CEO, called the image-generation debacle “completely unacceptable” in a staff memo, saying “we got it wrong.”

He went on to state:

Our mission to organize the world’s information and make it universally accessible and useful is sacrosanct.

Whether or not you think that really is Google’s mission, or find Pichai’s summer-revival preacher fervour believable, it at least provides a frame in which to consider the real issue: Can you trust a company built on delivering to users the sources of information once it decides to instead become the source of the information itself and shows a deliberate skewing or falsification in the information presented?

Also, if the mission is that clear, and that sacred, why isn’t there a more public ownership by Google of the lies Gemini is peddling as truth? Or even any genuine concern or shame over its inaccuracy?

The sum total of Google’s acknowledgment of the broader issue comes in the form of the following disclaimer in small print at the bottom of the Gemini page:

Gemini may display inaccurate info, including about people, so double-check its responses.

Suddenly, Pichai’s description of Google’s mission in his ‘apology’ seems shockingly inadequate. When he says that Google wants to “organize the world’s information” to make it “accessible” and “useful,” he omits that they have, with the launch of Gemini and the integration of generative AI into their products and services, taken on the role of creating information, not just organising it. There doesn’t seem to be any admission from Google, or even any obvious concern, that the veracity of the information they create is of any importance whatsoever.

As Tom Lehrer joked in his 1960s comic song about America’s favourite Nazi:

“Once the rockets are up, who cares where they come down?

That’s not my department,” says Werner von Braun.

If Gemini is lying, why use it? Who wants to use a search engine or query-answerer if they have to go somewhere else afterwards to double-check? We might as well use an engine that delivers accurate information, which is what I and many people around the world thought Google’s results were. In a way, the naïve expectation that Gemini, being a Google product, would deliver unbiased information like a search engine speaks to the goodwill Google has as a brand, goodwill that is in the process of burning away faster than a stand of pines in an August forest fire.

pulled no punches in his analysis of the debacle:Gemini is among the more disastrous product rollouts in the history of Silicon Valley and maybe even the recent history of corporate America, at least coming from a company of Google’s prestige.

One could argue that Google and other tech companies have been skewing results for a while now anyway, as the track record of ‘filter bubbles’, ‘throttling’, and ‘shadow banning’ shows. Is the Gemini moment just more of the same, or is it an inflection point?

This returns us to the question of what exactly is causing the issue. Google claims, and is backed up in outlets like Semafor and The Verge, that the problem is just an unfortunate training error, a technical flaw that the engine room bods are sweating over fixing right now, so don’t worry, regular service will resume shortly.

Despite that claim, it’s worth asking: How wrong could one of the biggest, richest, most advanced tech companies in the world be by accident? If the answer is that AI just turns out how it turns out, like an errant teenager, regardless of how well-raised it is, that should be terrifying for all of us. It means no controls or planning-in-advance can guarantee a safe and properly-aligned outcome, since the combination of inputs just goes into a black box, creates a whole as different from the sum of its parts as one sibling is from another, so we just have to hope it isn’t Skynet or the computer from War Games.

If the way it’s raised is the determining factor, then we’re back to “garbage in-garbage out” and therefore the question of intent, or the selection of training materials that were used.

Bearing in mind that Google still plans to roll out Gemini for all their devices, and generative AI “assistants” are popping up unbidden in a lot of the software we’re using these days, in what way can our use of and exposure to AI now be described as consensual? Are we really accepting the terms and conditions here, or are we just being dragged along by people who aren’t telling us (or actively lying to us) about what is under the bonnet?

With all of these questions, how are we, as an information-consuming public, meant to rely on anything? If the material could be fabricated, the source could be fake, and the search result itself could be a hallucination driven by an LLM with a motive or cause we don’t know about or understand, where does that leave us in the real world, where facts matter and their absence, or confusion over their nature, has real consequences?

More from Silver:

How should consumers navigate a world rife with misinformation — when sometimes the misinformation is published by the most authoritative sources?

Good question.

Mario Juric, a professor at the University of Washington at Seattle, made it clear on X that his trust in Google has been irrevocably lost at this point (emphasis in the original):

Gemini is a product that functions exactly as designed, and an accurate reflection of the values people who built it. Those values appear to include a desire to reshape the world in a specific way that is so strong that it allowed the people involved to rationalize to themselves that it's not just acceptable but desirable to train their AI to prioritize ideology ahead of giving user the facts. To revise history, to obfuscate the present, and to outright hide information that doesn't align with the company's (staff's) impression of what is "good". I don't care if some of that ideology may or may not align with your or my thinking about what would make the world a better place: for anyone with a shred of awareness of human history it should be clear how unbelievably irresponsible it is to build a system that aims to become an authoritative compendium of human knowledge (remember Google's mission statement?), but which actually prioritizes ideology over facts.

The shadow of Orwell looms over the hungry mouth of the Memory Hole.

Think pieces, like Simon Evans’s column at Spiked, are already coming out, and the word “Orwellian” is getting dusted off and put front and centre. Evans ends his column with a haunting and resonant sentence:

History now has an evil twin. Be careful which you trust.

So in the end, one question remains for me, or at least has floated to the top after writing this. More than anything else, what I want to know is: Why lie?

Why do we need fake assertions about the careers of journalists when they have real events in their past and real publications to draw on? Why is AI making stuff up? That’s what twists my melon.

If it’s just supposed to tell us what we are asking it to tell us, is it assuming we want it to lie? Has it read the material it was given and concluded that humans must love bullshit because that’s what the main ingredient is in so much of what we consume? Does it actually think it’s aligned with us when it gives politically slanted half- or untruths, or fabricates salacious tidbits that never happened?

Is AI a reflection of who we are, or have we created something else entirely? Have we created a monster, or are we the monsters?

I don’t have an answer, but I want to know, and I think, for all our sakes, we need to get to the bottom of this as soon as possible, before things go more than “awry.”

At once hysterical and heartwarming that the author has managed to preserve until now his opinion of google search as unbiased and authoritative. I hardly know whether to pity or admire such dogged naïveté. A man might as well trust Wikipedia’s warped biographies.