The Weekly Weird #53

TrumpCoin, Britain bans banter, China hacks the US Treasury, Tony Blair cares a lot (about digital ID), US police FaRT on rights, Suk in South Korea Pt. 3, the All-Seeing Allstate

Well hello!

Once again, we gather aboard the SS Absurdity for another voyage into the turbulent tides of today’s news, where reason takes a holiday and the peculiar runs rampant. We’ve had some epic waves over the past seven days, so grab your life jackets and a stiff drink—it’s time for your Weekly Weird!

Up-fronts:

Hail to the Shitcoiner-In-Chief: Donald J. Trump has claimed a hitherto-unimaginable first. He launched a memecoin (or ‘shitcoin’ in crypto parlance) two days before his inauguration and it immediately soared to a $24 billion market cap. Print this out and frame it, it’s a piece of history now:

The disclaimer from the GetTrumpMemes website is priceless (emphasis mine):

“Trump Memes are intended to function as an expression of support for, and engagement with, the ideals and beliefs embodied by the symbol "$TRUMP" and the associated artwork, and are not intended to be, or to be the subject of, an investment opportunity, investment contract, or security of any type. GetTrumpMemes.com is not political and has nothing to do with any political campaign or any political office or governmental agency.”

This guy. Just in case you thought shilling autographed bibles or gold watches was too shyster-y for a President-elect. Okay, one more screenshot, because really, it’s just too good (remember, it’s “not political”):

In the latest episode of Cyber Shenanigans: Treasury Edition, US Treasury Secretary Janet Yellen’s computer was hacked, with Chinese state-sponsored actors reportedly gaining access to fewer than 50 unclassified files. While it’s comforting to know her cat memes are safe, the hackers also infiltrated the computers of her deputies and over 400 other devices, rummaging through usernames, passwords, and thousands of files. The breach, attributed to Chinese operatives dubbed “Silk Typhoon” (because they work from home in pyjamas?), zeroed in on Treasury’s international affairs, sanctions, and sensitive investigations. The interlopers apparently only got access to the unclassified material, like “law enforcement sensitive” data and notes on foreign financing investigations. China’s Foreign Ministry predictably dismissed the allegations, calling them “unwarranted and groundless,” because of course they did.

South Korea’s president, Yoon Suk Yeol, made history—but not the good kind—when he became the first sitting leader to be detained on rebellion charges. He was arrested in a dramatic raid on the presidential compound after weeks of resistance, following his brief (and bizarre) imposition of martial law last month. If convicted, “the leader of a rebellion can face the death penalty or life imprisonment,” so the saga continues. The embattled (and tone deaf) president released a video prior to his detention complaining that the “rule of law has completely collapsed in this country.” Jeez, it’s like you can’t even declare martial law to seize control of a country any more.

As if LA residents haven’t suffered enough, insurance companies are taking a hard pass on covering houses in high-risk areas. State Farm pulled a vanishing act last year, dropping 70% of its policyholders in the area, leaving homeowners to rely on California’s insurer of last resort, the FAIR Plan, which comes with higher premiums and less coverage. The FAIR Plan is also facing a financial abyss, with $200 million in cash and $450 billion in potential exposure. When a similar state insurance scheme in Florida, Citizens, ran dry after Hurricane Ian in 2022, residents got hit with a ‘Hurricane Tax’ to cover the shortfall. “I believe we’re marching toward an uninsurable future,” Dave Jones, the former insurance commissioner of California, told the New York Times.

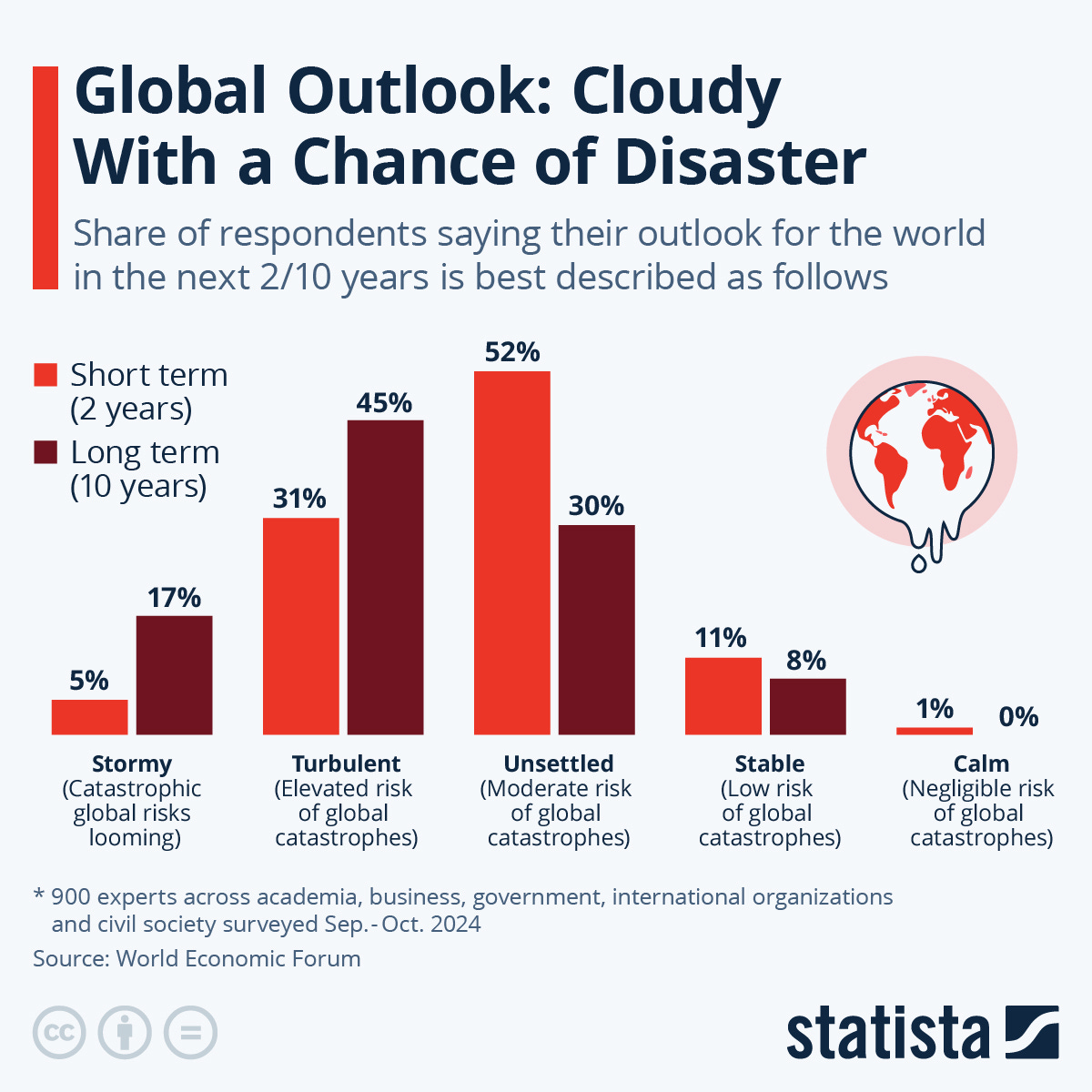

Finally, everyone’s favourite intellectually-inbred cabal of technocratic douchebags, the World Economic Forum, conducted a poll that asked “900 experts across academia, business, government, international organisations and civil society” what their outlook was for global turmoil over the next two years and also for the decade ahead. Presented without comment:

And Global Risk Numero Uno over the next two years, according to the WEF’s shiny new 2025 Global Risks Report?

The ACLED Conflict Index reported in December 2024 that worldwide there were an estimated 233,000 deaths from “state-based armed conflict” in the preceding year. The International Disaster Database estimates at least 11,500 deaths in 2024 from “extreme weather.” The WEF and its poll respondents, however, appear to believe that “misinformation and disinformation” is more pressing and deadly than either of those over the next two years, and also that the 11,500 deaths from extreme weather are more worrying than wars that killed 233,000 people. Whatever metric they’re using to measure risk, it might need adjusting…

Onwards!

Britain Bans Banter

Hot on the heels of their bungled handling of a shocking, widespread, and too-horrible-and-serious-to-summarise-in-the-Weird mass rape scandal, Britain’s Labour government is pushing through an Employment Rights Bill that includes a provision aimed at addressing harassment of workers.

From the Free Speech Union:

Introduced as part of Labour’s broader workplace reforms, the proposed Employment Rights Bill includes Clause 16, which aims to address harassment by third parties, such as customers or clients. The clause amends Section 40 of the Equality Act 2010 (EqA), reintroducing an employer’s duty to prevent harassment from third parties. This duty, previously repealed in 2013, would hold employers liable for harassment by individuals outside the workplace unless they could demonstrate reasonable steps were taken to prevent it.

Harassment is defined in Section 26 of the EqA as “unwanted conduct related to a relevant protected characteristic and which violates a person’s dignity or has the purpose or effect of creating an intimidating, hostile, degrading, humiliating or offensive environment”. While the government insists this represents a high threshold, critics argue the vague language leaves room for over-interpretation, especially when it comes to speech-related incidents.

If Britain hadn’t developed a worrying track record of punishing people for what they say or post online (while police, local authorities, and the government continue to deny, cover up, lie about, or refuse to redress decades of deliberate neglect and, in some cases, complicity in widespread sexual abuse and torture of minors across the country), one could be forgiven for leaving it to the authorities to determine what’s reasonable in good faith. Given the parlous state of speech in the UK, however, trust in the state to determine what can and can’t be said is at an understandable low.

The UK’s Equality and Human Rights Commission (EHRC) warned against Clause 16, noting the danger it posed to free expression due to the steps employers might be obligated to take to avoid litigation.

From the Daily Telegraph:

The EHRC said in evidence to MPs that it fully supported measures to combat sexual harassment in the workplace in the bill.

However, the organisation warned that planned protections around third-party harassment “raise complex questions about the appropriate balance between third parties’ rights to freedom of expression and employees’ protection from harassment and their right to private and family life”.

The watchdog said the issue was even more complex in the case of “philosophical beliefs”, which are protected under the Equality Act as long as they are cogent, serious and apply to an important aspect of human life or behaviour, such as religion, politics and sexuality.

“The legal definition of what amounts to philosophical belief is complex and not well understood by employers,” the EHRC said. “It is arguable that these difficulties may lead to disproportionate restriction of the right to freedom of expression.”

The Steve Bannon look-a-like and owner of the JD Wetherspoon pub chain, Sir Tim Martin, told the Daily Telegraph that it “[s]ounds like Big Brother thought control which would be a bureaucratic nightmare to enforce.”

Even “nicotine-stained man-frog” Nigel Farage is weighing in:

“Every pub is a parliament. It is where we discuss the world. If that is restricted they might as well all close.”

If every pub was a parliament, Britain might be run better. Or at least we really would have a polar research ship called Boaty McBoatface instead of the cop-out option, Sir David Attenborough.

Joining what Salman Rushdie dubbed “the ‘but’ brigade,” a spokesperson for the Office for Equality and Opportunity (which is a thing that exists) defended the legislation:

Free speech is a cornerstone of British values, but of course it is right that the Employment Rights Bill protects employees from workplace harassment, which is a serious issue.

As with all cases of harassment under the Equality Act 2010, courts and tribunals will continue to be required to balance rights on the facts of a particular case, including the rights of freedom of expression.

‘Upsetting’ remarks do not fall within the definition of harassment. Employers should not be treated as having failed to take reasonable steps solely because they did not seek to prevent certain conversations. That is not the intent of the bill.

Contrast the spokesperson’s certainty that “the intent of the bill” will affect the outcome of the bill with this New York Times coverage of the arrests and convictions for online speech in the wake of the 2024 summer protests in the UK, and the Crown Prosecution Service’s triumphal summary of recent hate crime convictions, headed with the following cheery warning:

Whether this legislation will bring clarity or chaos to the hospitality sector remains to be seen. So far, the Labour government has shown that its reflex is mud-slinging, dismissing criticism as stemming from the “far right” whenever it doesn’t like what’s being said. Many media outlets have been playing the same game for years, painting concern over free speech as a disingenuous obsession of far right extremists or a masked defence of racism and supremacism, rather than a long-standing pillar of liberal policy to preserve and protect individual civil liberties and the principle of the public square.

Who’s up for a pint?

Tony Blair Cares A Lot (About Digital ID)

Former Prime Minister and British Smile™ model Tony Blair (pictured above with his wife Cherie) is back on the scene, and this time he’s here to sell you the future, whether you like it or not.

He kicks off his latest manifesto for technocratic utopia, Digital ID Is the Disruption the UK Desperately Needs, with the following:

I make you this confident prediction: in the not too distant future, British people will all have their own unique digital identifier, and will make most transactions through their phone, as citizens with government and as customers with firms.

And we will wonder what all the fuss was about.

Cloaked in the language of “efficiency” and “disruption,” Blair’s pitch deftly sidesteps pesky details like privacy concerns or government overreach. Instead, he serves up a buffet of shiny promises: digital IDs will make governance smoother, cut immigration fraud, and even let us track down those who dare to “overstay” their welcome. What better way to instil trust in government systems than by turning public services into one giant surveillance app?

It’s not all moonbeams and unicorn farts from the man who brought you the sexed-up dossier on Iraq. He offers up this guarded admission that all is not roses:

Is this world also scary in many respects? Absolutely. Most technology, including artificial intelligence (AI), is general-purpose technology. It can be used for good or ill. But as I say when discussing the issue with the governments around the globe with whom my Institute works, there is no point in debating whether it’s a good thing or a bad thing. It is a thing. Possibly the thing. And history teaches us that what is invented by human ingenuity is rarely disinvented by human anxiety.

Naturally, there’s no need to unpack the false dichotomy of invented/disinvented, since things that are invented by human ingenuity are never ever regulated or moderated by laws, policy decisions, or social norms. That’s why you can buy antibiotics without a prescription and carry around a fully automatic firearm with an extended magazine everywhere you go. It’s also why there are no rules governing what can go in food, or skincare products, or the fuel tank of your car. You can’t get in the way of “human ingenuity” just because of “human anxiety.” That would just be…eww, right Tony?

He assures us, “properly done,” a digital ID will give individuals “more control over their data,” a statement so rich it might as well be wearing a mink coat and stepping out of a Koenigsegg in Monte Carlo. Never mind the track record of governments mishandling sensitive data or the growing unease over tech companies’ algorithms affecting and interfering in every corner of our lives. According to Blair, “the risks should not blind us to the opportunities.” Translation: Let’s ignore the massive potential for abuse because progress.

Blair also waxes poetic about how digital IDs are the key to solving everything from NHS inefficiencies to crime rates. Facial recognition, data, DNA, it’s a veritable dystopia smoothie he’s knocking up in his Blair Blender of Bad Ideas.

He cites “[c]ountries from Singapore to India to the UAE” as examples of the successful deployment of digital ID and other surveillance technology, forgetting that none of them are particularly compelling poster-children for liberal democracy (a word that does not appear in his article even once, by the way). Singapore has been a borderline dictatorship for decades, India’s Aadhaar system has pulled almost every citizen into a biometric dragnet, and between Makani numbers and robot police, Dubai is at the forefront of intrusive tracking and law enforcement technology.

Here’s another paragraph that isn’t selling what it thinks it’s selling:

Around the world, governments are moving in this direction. Of the 45 governments we work with, I would estimate that three-quarters of them are embracing some form of digital ID. The president of the World Bank, Ajay Banga, has said it is a top priority for the bank’s work with leaders.

His closing is possibly the most telling line:

Our present system isn’t working. This is a time for shaking up. For once-in-a-generation disruption. Digital ID is a good place to start.

If there’s one thing Tony Blair loves, it seems, it’s a massive shake-up where the costs and risks are someone else’s problem. After all, his track record of bold interventions has always worked out so well…just ask Iraq.

US Police FaRT On Rights

Ah, the marvels of modern technology! In a dazzling display of humanity’s capacity to combine innovation with utter recklessness, American law enforcement has found a way to turn artificial intelligence into a tool for all-too-real injustice.

The Washington Post just published an eight-month investigation into the use of facial recognition technology (FaRT) by law enforcement, and it ain’t pretty. WaPo “found that law enforcement agencies across the nation are using the artificial intelligence tools in a way they were never intended to be used: as a shortcut to finding and arresting suspects without other evidence.”

One case study is an assault case in Pagedale, Missouri, in which poor quality security camera footage was used to wrongly accuse a man with no connection to the crime.

Though the city’s facial recognition policy warns officers that the results of the technology are “nonscientific” and “should not be used as the sole basis for any decision,” [county transit police detective Matthew] Shute proceeded to build a case against one of the AI-generated results: Christopher Gatlin, a 29-year-old father of four who had no apparent ties to the crime scene nor a history of violent offenses, as Shute would later acknowledge.

Nothing says "justice" like letting a machine with “unspecified accuracy” decide someone’s fate (emphasis mine).

Most police departments are not required to report that they use facial recognition, and few keep records of their use of the technology. The Post reviewed documents from 23 police departments where detailed records about facial recognition use are available and found that 15 departments spanning 12 states arrested suspects identified through AI matches without any independent evidence connecting them to the crime — in most cases contradicting their own internal policies requiring officers to corroborate all leads found through AI.

Some law enforcement officers using the technology appeared to abandon traditional policing standards and treat software suggestions as facts, The Post found. One police report referred to an uncorroborated AI result as a “100% match.” Another said police used the software to “immediately and unquestionably” identify a suspected thief.

Despite a general tumescence for “transparency” and “accountability” from authorities where the public is concerned, the story is decidedly different for the cops.

The total number of false arrests fueled by AI matches is impossible to know, because police and prosecutors rarely tell the public when they have used these tools and, in all but seven states, no laws explicitly require it to be disclosed.

Hundreds of police departments in Michigan and Florida have the ability to run images through statewide facial recognition programs, but the number that do so is unknown. One leading maker of facial recognition software, Clearview AI, has said in a pitch to potential investors that 3,100 police departments use its tools — more than one-sixth of all U.S. law enforcement agencies. The company does not publicly identify most of its customers.

Back to the case of Christopher Gatlin.

When detectives presented the victim, Michael Feldman, with a lineup that included Gatlin’s photo, Feldman expressed uncertainty. In fact, he initially pointed to someone else. But the detectives, channeling their inner game show hosts, encouraged him to “think harder” and “picture these guys wearing stocking caps.” Feldman eventually circled Gatlin’s photo.

This half-hearted identification became the cornerstone of the case. Months later, when Detective Shute was asked in court if this process was "a reliable way to get a legitimate identification," he admitted, "I do not." Brave of him to realise that after derailing someone’s life. Gatlin ended up spending 16 months in jail before the judge in his case tossed out the improper identification process relied upon by the prosecution.

Gatlin’s public defender, Brooke Lima, described how the police tried to find a suspect.

“It seemed so outrageously dystopian to me,” Lima said in an interview. “You’ve got a search engine that is determined to give you a result, and it’s only going to search for people who have been arrested for something, and it’s going to try to find somebody among that limited population that comes closest to this snippet of a half-face that you found.”

Facial recognition technology works well in controlled lab conditions, but throw in some low-res security footage, i.e. anything from the real world, and the software is basically playing Guess Who with humans. On top of that, there’s a well-documented tendency among users to accept search results or determinations more or less blindly. Researchers have even coined a term for it: “automation bias.” It’s the same cognitive weakness that makes someone follow Google Maps directions into a lake, except instead of a soggy Prius, automation bias in policing means innocent people go to jail for crimes they didn’t commit.

The way that the algorithms are developed also leads to a tremendous racial bias.

Federal testing in 2019 showed that Asian and Black people were up to 100 times as likely to be misidentified by some software as White men, potentially because the photos used to train some of the algorithms were initially skewed toward White men.

In interviews with The Post, all eight people known to have been wrongly arrested said the experience had left permanent scars: lost jobs, damaged relationships, missed payments on car and home loans. Some said they had to send their children to counseling to work through the trauma of watching their mother or father get arrested on the front lawn.

Most said they also developed a fear of police.

Put more bluntly by Alonzo Sawyer, a wrongly arrested man who “only got out of jail because his wife drove 90 miles to personally confront a probation officer whom police had pressured into falsely confirming her husband as the attacker”:

“I knew I was innocent, so how do I beat a machine?”

How indeed.

There’s one thing we know about FaRT: It stinks.

The All-Seeing Allstate

Texas Attorney General Ken Paxton has filed a lawsuit against American insurance giant Allstate for secretly tracking customers and using the information gathered to raise their premiums.

From his office’s press release:

Texas Attorney General Ken Paxton sued Allstate and its subsidiary, Arity (“Allstate”), for unlawfully collecting, using, and selling data about the location and movement of Texans’ cell phones through secretly embedded software in mobile apps, such as Life360. Allstate and other insurers then used the covertly obtained data to justify raising Texans’ insurance rates.

The complaint filed in the District Court of Montgomery, Texas, details how Allstate “conspired to secretly collect and sell “trillions of miles” of consumers’ “driving behavior” data from mobile devices, in-car devices, and vehicles…[and then] used the illicitly obtained data to build the “world’s largest driving behavior database,” housing the driving behavior of over 45 million Americans.”

Not only was Allstate raising premiums based on illegally-obtained customer data, it was also aiming to “profit from selling the driving behavior data to third parties, including other car insurance carriers.”

The court documents outline a practice so creepy that you’d have been called paranoid for suggesting it:

Defendants covertly collected much of their “trillions of miles” of data by maintaining active connections with consumers’ mobile devices and harvesting the data directly from their phone. Defendants developed and integrated software into third-party apps so that when a consumer downloaded the third-party app onto their phone, they also unwittingly downloaded Defendants’ software. Once Defendants’ software was downloaded onto a consumer’s device, Defendants could monitor the consumer’s location and movement in real-time.

Through the software integrated into the third-party apps, Defendants directly pulled a litany of valuable data directly from consumers’ mobile phones. The data included a phone’s geolocation data, accelerometer data, magnetometer data, and gyroscopic data, which monitors details such as the phone’s altitude, longitude, latitude, bearing, GPS time, speed, and accuracy.

To encourage developers to adopt Defendants’ software, Defendants paid app developers millions of dollars to integrate Defendants’ software into their apps. Defendants further incentivized developer participation by creating generous bonus incentives for increasing the size of their dataset. According to Defendants, the apps integrated with their software currently allow them to “capture[] [data] every 15 seconds or less” from “40 [million] active mobile connections.”

The outcome of the data harvesting was predictable, even though I’ve been scoffed at every time I’ve mentioned to someone that this is where this kind of data-consolidation is headed.

If a consumer requested a car insurance quote or had to renew their coverage, Insurers would access that consumer’s driving behavior in Defendants’ database. Insurers then used that consumer’s data to justify increasing their car insurance premiums, denying them coverage, or dropping them from coverage.

According to Paxton’s press release, “[t]his lawsuit follows Attorney General Paxton’s lawsuit against General Motors and his ongoing investigations into several car manufacturers for secretly collecting and selling drivers’ highly detailed driving data.”

Well, at least it isn’t a systemic problem.

That’s it for this week’s Weird everyone! I hope you enjoyed it.

Outro music is I Don’t Know How But They Found Me with Do It All The Time, a cheerful pop song to soften the inexorable extension of surveillance technology into our lives.

Stay sane, friends.

We’re taking over the world

A little victimless crime

and when I’m taking your innocence

I’ll be corrupting your mind

Love your stack but it's clickbait to say "Britain bans banter". It's the British parliament considering a bill to ban banter. Not saying it won't happen, but might as well refrain from exaggeration.

AI, the tracking devices in new(er) cars and even tires, toll roads/toll tags, big cities and the multitude of cameras that cover nearly every corner, cameras on major highways, airports, our cell phones, laptops, and so many different electronic devices that people have in their homes…

I shudder to think about the gigantic databases that house all this data because you know someone has aggregated all available data. It’s the exact reason I drive a 25 year old SUV, avoid any devices/appliances that can connect to my WiFi save for this blasted iPhone (soon to be history!), I’ve stopped flying anywhere, and I live totally off grid. 🤣 It’s a bit of comfort but if the poop hits the fan, they have a lot of unwoke data on me to easily label me internment camp ready!